Research Projects

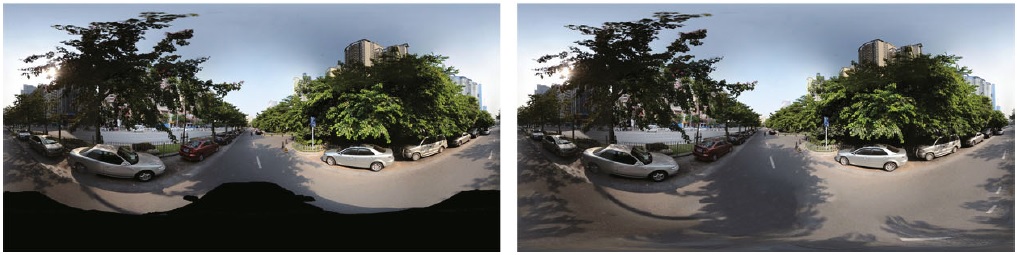

Pamoramic vision data analysis, processing and VR interaction, Key Program,

National Natural Science Foundation,

PI: Song-Hai Zhang, Project Number: 6213000127, 2022-2026.

Deep learning algorithm and framework for computational visual media,

Key International Joint Research Program, National Natural Science Foundation,

PI: Shi-Min Hu, Project number: 62220106003, 2023-2027.

Narrative Visual Content Creation and Immersive Interaction of Panoramic Video,

International Cooperation and Exchange Programs (NSFC-ISF), National Natural Science Foundation,

PI: Song-Hai Zhang, Project number: 62361146854, 2024-2026.

Deep Learning Framework and Large Model Application Verification for Complex

Heterogeneous Computing Systems, Majar Program, National Natural Science Foundation,

PI: Shi-Min Hu, Project number: 62495060, 2025-2029.

2025

Fast Galerkin Multigrid Method for Unstructured Meshes

ACM Transactions on Graphics, 2025, Vol.44, No.6, Article No.179, 1 - 16.

Jia-Ming Lu, Tailing Yuan, Zhe-Han Mo, Shi-Min Hu

This research presents an efficient multigrid solver for deformable body simulations

on unstructured tetrahedral meshes. The method combines the Full Approximation Scheme

with Galerkin formulation and introduces a matrix-free vertex block Jacobi smoother

that eliminates the computational burden of dense coarse matrices. The approach

supports both piecewise constant and linear Galerkin formulations and achieves up to

6.9x speedup over traditional methods. Comprehensive GPU optimization techniques address

parallel architecture challenges through Morton sorting, grid reduction, and spatial hashing.

Extensive experiments demonstrate robust convergence across varying mesh resolutions,

material stiffness values, extreme deformations, and complex collision scenarios, enabling

practical simulation of million-vertex meshes at interactive frame rates.

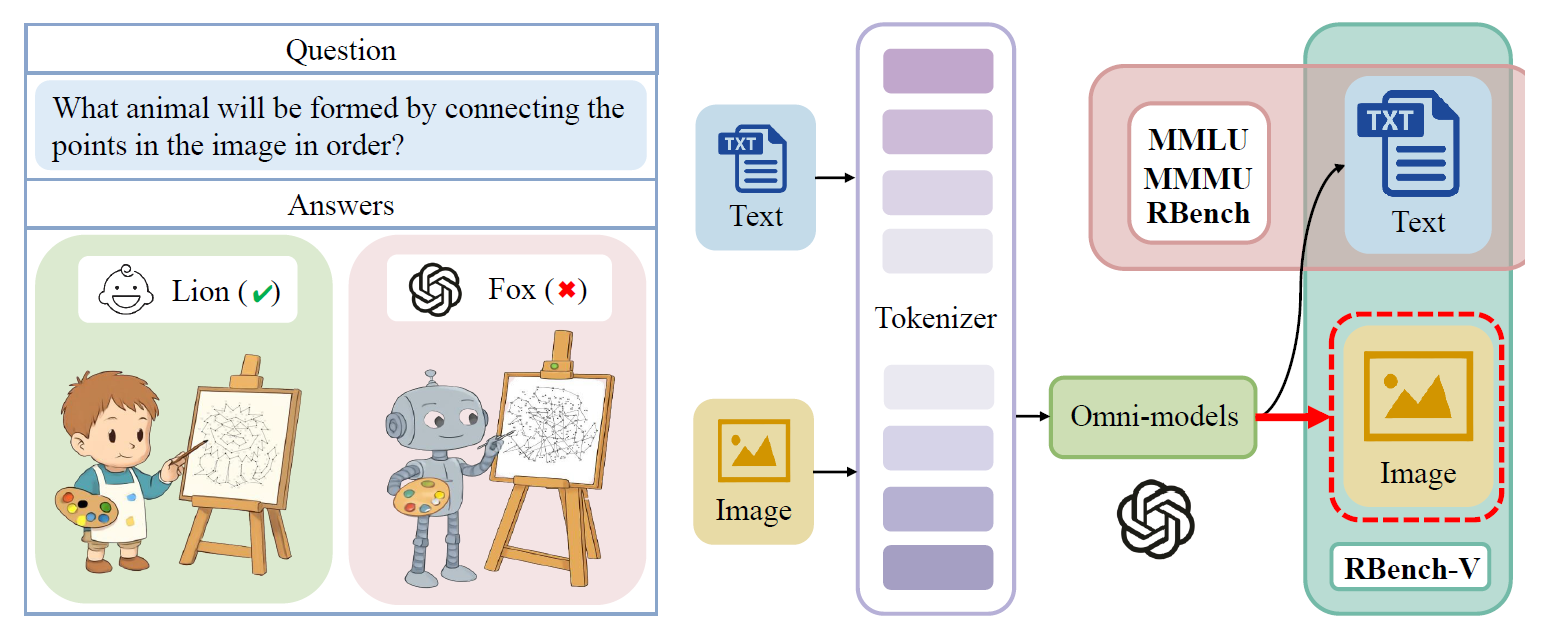

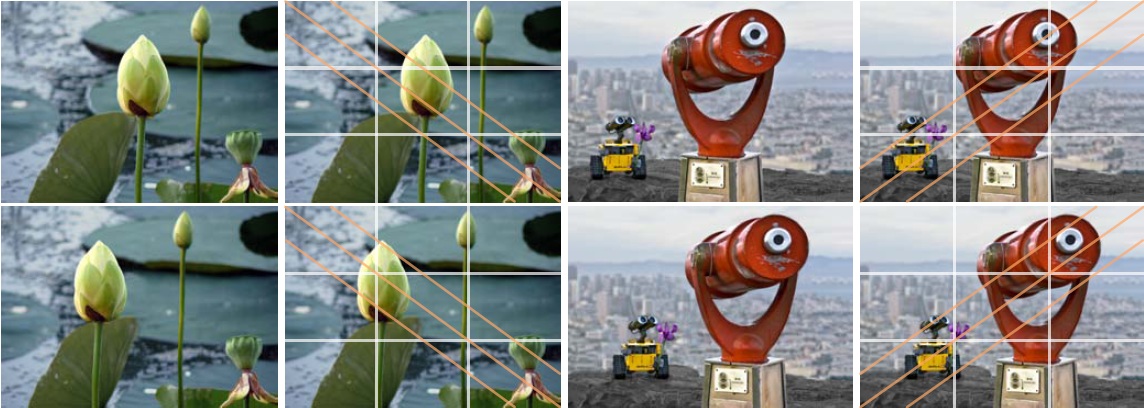

RBench-V: A Primary Assessment for Visual Reasoning Models with Multimodal Outputs

NeurIPS 2025 Datasets and Benchmarks Track, 2025.

Meng-Hao Guo, Xuanyu Chu, Qianrui Yang, Zhe-Han Mo, Yiqing Shen, Pei-lin Li, Xinjie Lin, Jinnian Zhang, Xin-Sheng Chen, Yi Zhang, Kiyohiro Nakayama, Zhengyang Geng, Houwen Peng, Han Hu, Shi-Min Hu

The rapid advancement of native multi-modal models and omni-models, exemplified by GPT-4o,

Gemini and o3 with their capability to process and generate content across modalities such

as text and images, marks a significant milestone in the evolution of intelligence.

Systematic evaluation of their multi-modal output capabilities in visual thinking process

(a.k.a., multi-modal chain of thought, M-CoT) becomes critically important. However,

existing benchmarks for evaluating multi-modal models primarily focus on assessing

multi-modal inputs and text-only reasoning process while neglecting the importance of

reasoning through multi-modal outputs. In this paper, we present a benchmark, dubbed as

RBench-V, designed to assess models¡¯ vision-indispensable reasoning. To conduct RBench-V,

we carefully hand-pick 803 questions covering math, physics, counting and games. Unlike

problems in previous benchmarks, which typically specify certain input modalities,

RBench-V presents problems centered on multi-modal outputs, which require image

manipulation, such as generating novel images and constructing auxiliary lines to

support reasoning process. We evaluate numerous open- and closed-source models on RBench-V,

including o3, Gemini 2.5 pro, Qwen2.5-VL, etc. Even the best-performing model, o3, achieves

only 25.8% accuracy on RBench-V, far below the human score of 82.3%, which shows

current models struggle to leverage multi-modal reasoning. Data and code are available

at https://evalmodels.github.io/rbenchv.

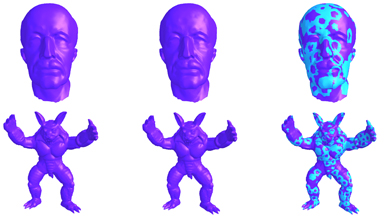

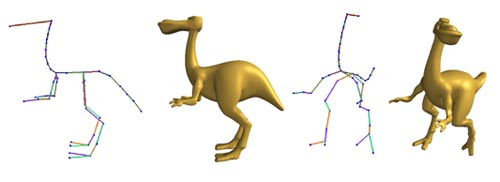

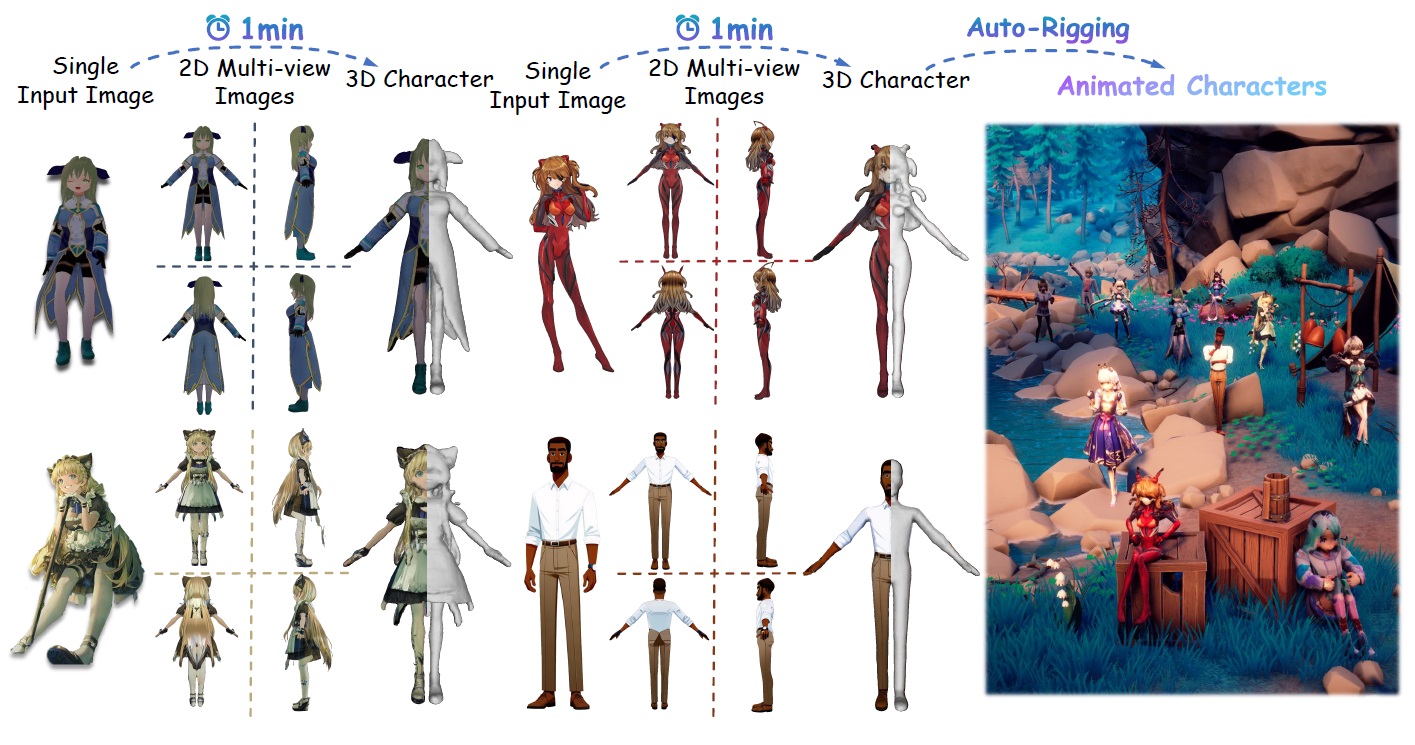

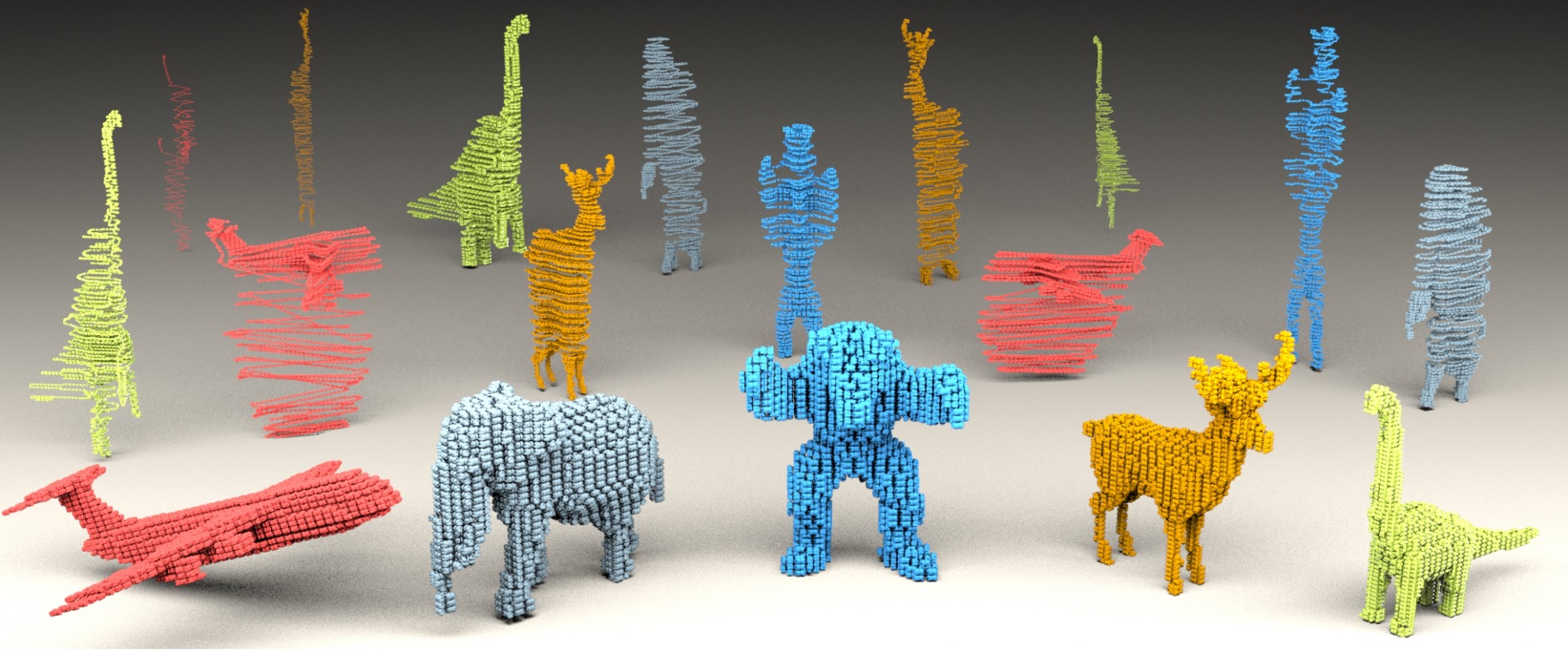

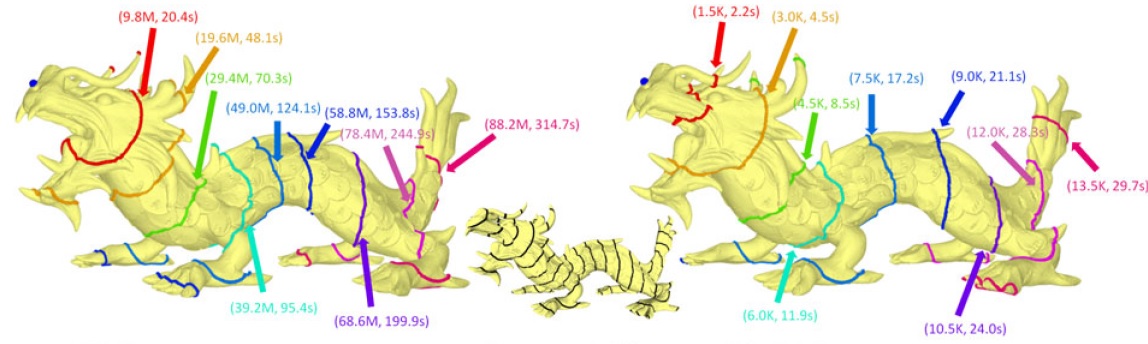

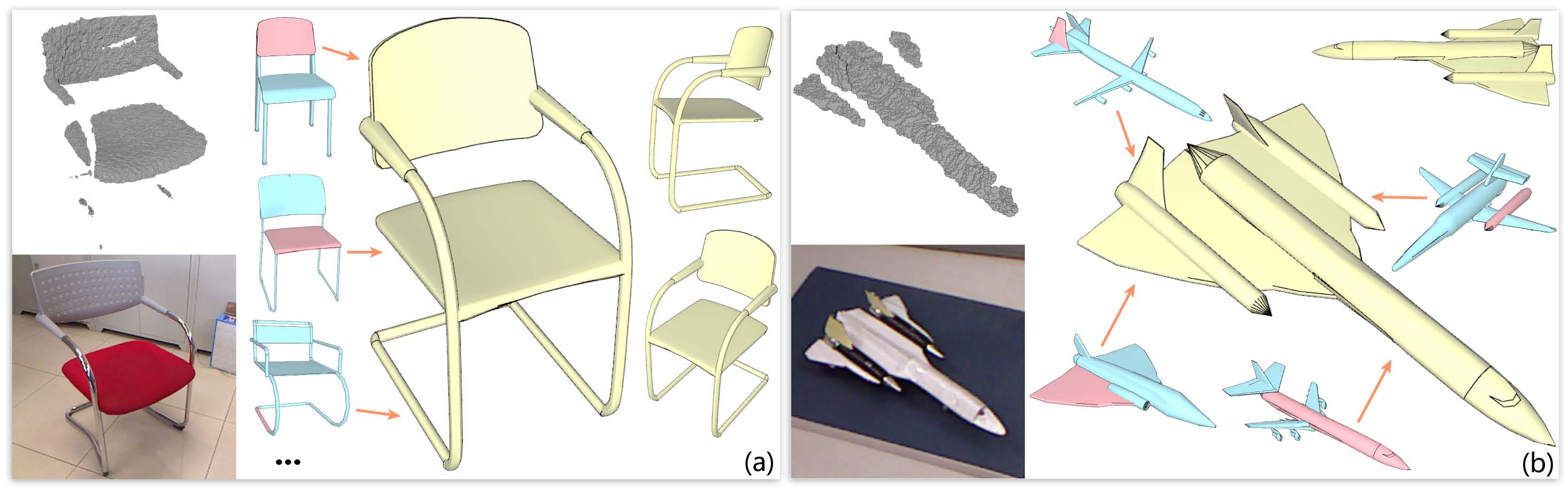

One Model to Rig Them All: Diverse Skeleton Rigging with UniRig

Simulation

ACM Transactions on Graphics, 2025, Vol.44, No.4, Article No.123, 1 - 19.

Jia-Peng Zhang, Cheng-Feng Pu, Meng-Hao Guo, Yan-Pei Cao, Shi-Min Hu

The rapid evolution of 3D content creation, encompassing both AI-powered

methods and traditional workflows, is driving an unprecedented demand

for automated rigging solutions that can keep pace with the increasing

complexity and diversity of 3D models. We introduce UniRig, a novel, unified

framework for automatic skeletal rigging that leverages the power of large

autoregressive models and a bone-point cross-attention mechanism to generate

both high-quality skeletons and skinning weights. Unlike previous

methods that struggle with complex or non-standard topologies, UniRig

accurately predicts topologically valid skeleton structures thanks to a new

Skeleton Tree Tokenization method that efficiently encodes hierarchical

relationships within the skeleton. To train and evaluate UniRig, we present

Rig-XL, a new large-scale dataset of over 14,000 rigged 3D models spanning

a wide range of categories. UniRig significantly outperforms state-of-the-art

academic and commercial methods, achieving a 215% improvement in rigging

accuracy and a 194% improvement in motion accuracy on challenging

datasets. Our method works seamlessly across diverse object categories,

from detailed anime characters to complex organic and inorganic structures,

demonstrating its versatility and robustness. By automating the tedious

and time-consuming rigging process, UniRig has the potential to speed up

animation pipelines with unprecedented ease and efficiency. Project Page:

https://zjp-shadow.github.io/works/UniRig/

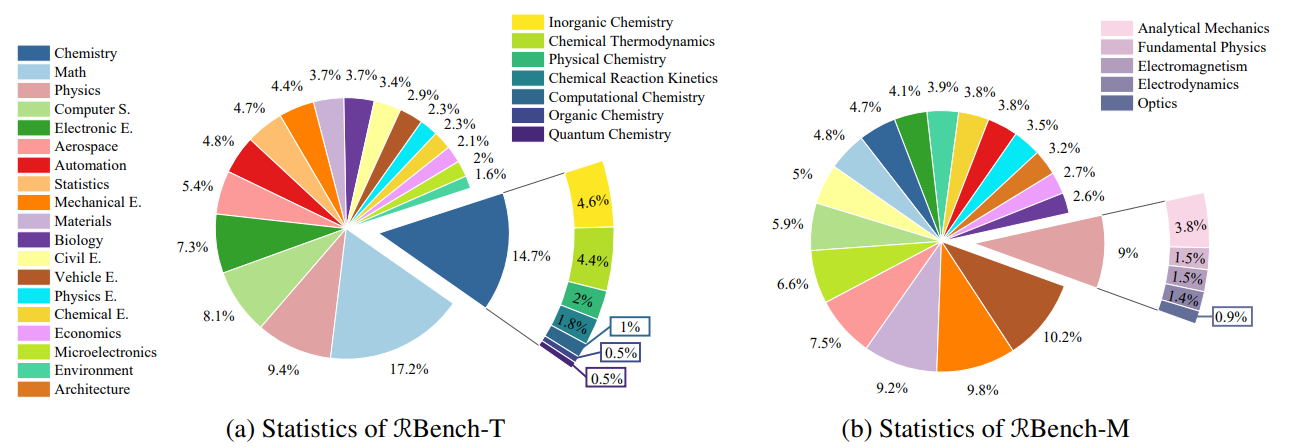

RBench: Graduate-level Multi-disciplinary Benchmarks for LLM & MLLM Complex Reasoning Evaluation

Proceedings of the 42nd International Conference on Machine Learning, Vancouver, Canada. PMLR 267, 2025.

Meng-Hao Guo, Jiajun Xu, Yi Zhang, Jiaxi Song, Haoyang Peng, Yi-Xuan Deng,

Xinzhi Dong, Kiyohiro Nakayama, Zhengyang Geng, Chen Wang, Bolin Ni, Guo-Wei Yang,

Yongming Rao, Houwen Peng, Han Hu, Gordon Wetzstein, Shi-min Hu

Reasoning stands as a cornerstone of intelligence, enabling the synthesis of

existing knowledge to solve complex problems. Despite remarkable progress,

existing reasoning benchmarks often fail to rigorously evaluate the nuanced

reasoning capabilities required for complex, real-world problemsolving,

particularly in multi-disciplinary and multimodal contexts. In this paper,

we introduce a graduate-level, multi-disciplinary, EnglishChinese benchmark,

dubbed as Reasoning Bench (RBench), for assessing the reasoning capability

of both language and multimodal models. RBench spans 1,094 questions across

108 subjects for language model evaluation and 665 questions across 83 subjects

for multimodal model testing. These questions are meticulously curated to

ensure rigorous difficulty calibration, subject balance, and cross-linguistic

alignment, enabling the assessment to be an Olympiad-level multidisciplinary

benchmark. We evaluate many models such as o1, GPT-4o, DeepSeek-R1, etc.

Experimental results indicate that advanced models perform poorly on complex

reasoning, especially multimodal reasoning. Even the top-performing model

OpenAI o1 achieves only 53.2% accuracy on our multimodal evaluation. Data and

code are made publicly available athttps://evalmodels.github.io/rbench/

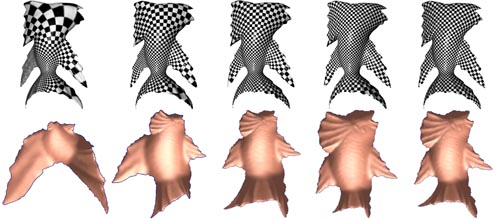

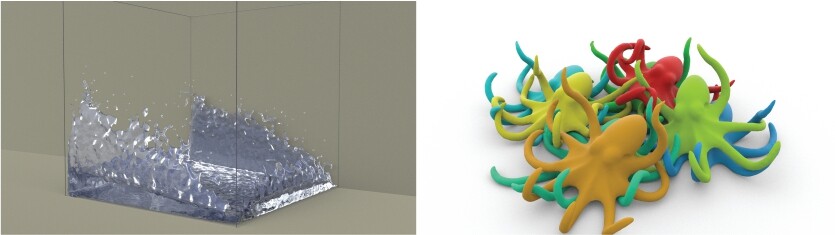

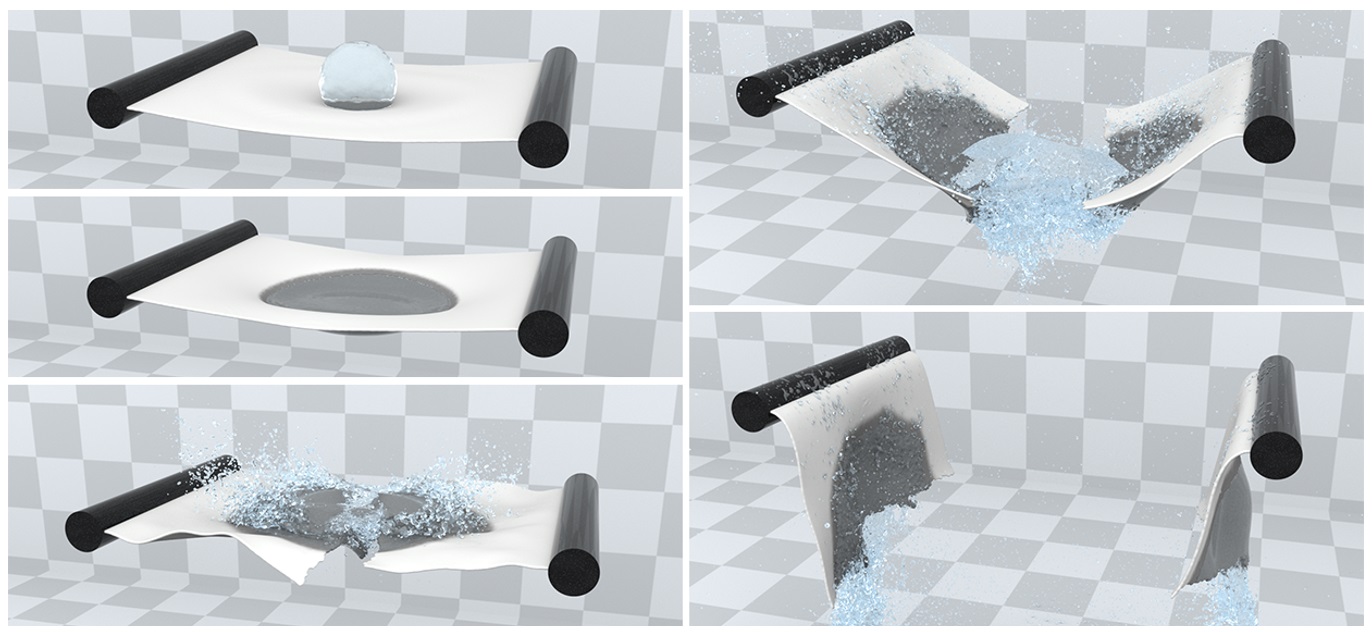

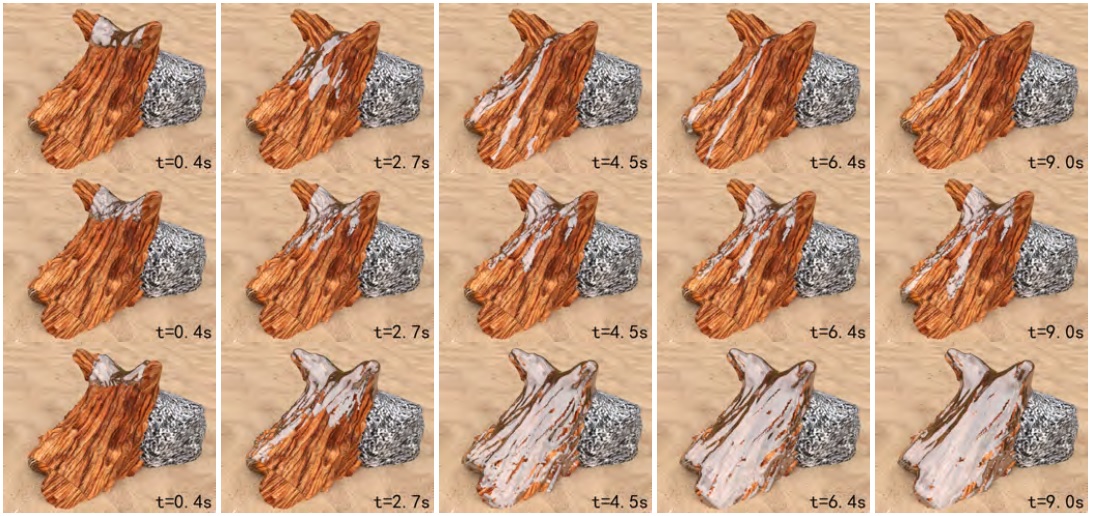

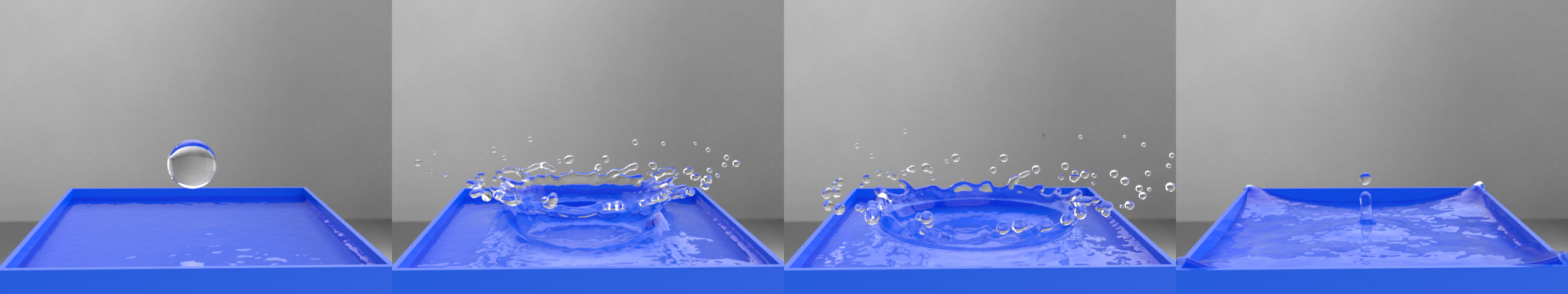

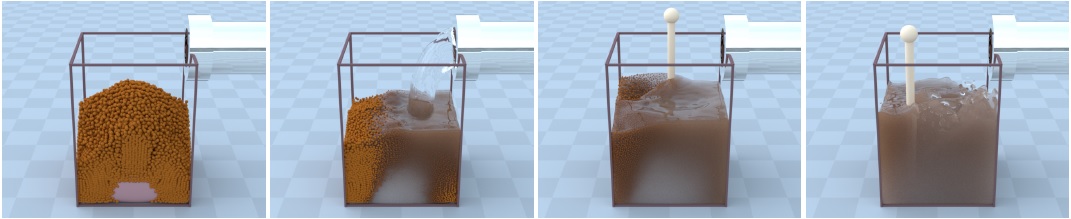

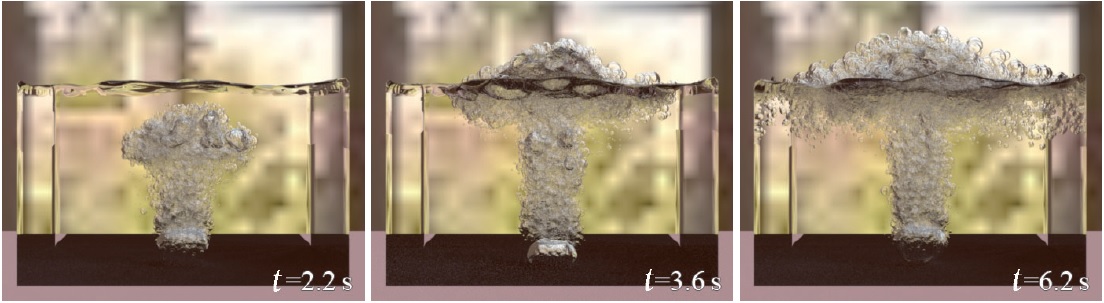

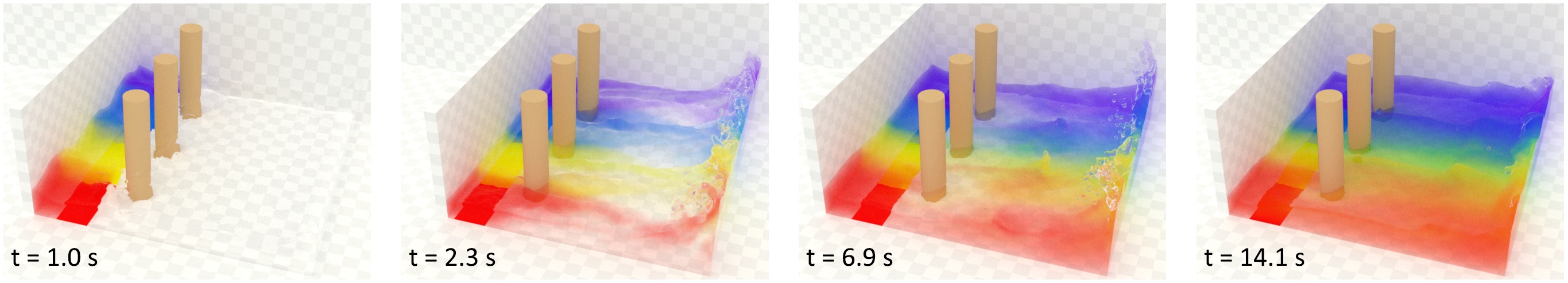

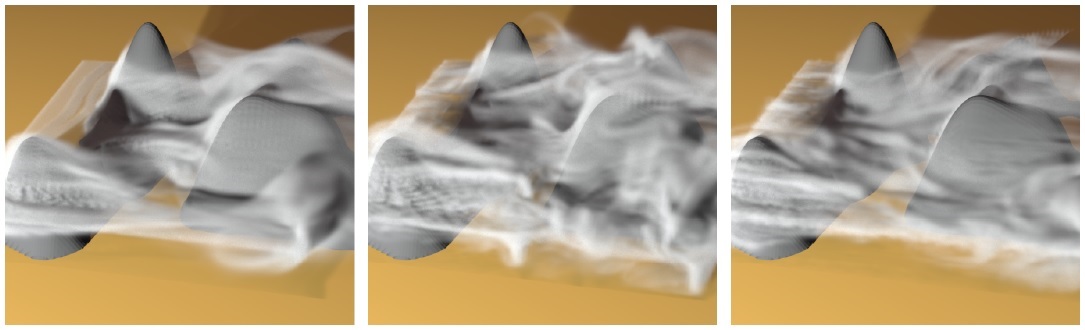

Reliable Iterative Dynamics: A Versatile Method for Fast and Robust

Simulation

ACM Transactions on Graphics, 2025, Vol.44, No.3, Article No.29, 1 - 18.

Jia-Ming Lu, Shi-Min Hu

Simulating stiff materials has long posed formidable challenges for traditional

physics-based solvers. Explicit time integration schemes demand

prohibitively small time steps, while implicit methods necessitate an excessive

number of iterations to converge, often yielding visually objectionable

transient configurations in the early iterations, severely limiting their

real-time applicability. Position-based dynamics techniques can efficiently

simulate stiff constraints but are inherently restricted to constraint-based

formulations, curtailing their versatility.We present "Reliable Iterative Dynamics"

(RID), a novel iterative solver that introduces a dual descent framework

with theoretical guarantees for visual reliability at each iteration,

while maintaining fast and stable convergence even for extremely stiff systems.

Our core innovation is an iterativemethod that circumvents the need

for numerous iterations or small time steps to handle stiff materials robustly.

Experimental evaluations demonstrate our method¡¯s ability to handle

a wide range of materials, from soft to infinitely rigid, while producing

visually reliable results even with large time steps and minimal iterations.

The versatile formulation allows seamless integration with diverse simulation

paradigms like the finite element method, material point method,

smoothed particle hydrodynamics, and incremental potential contact for

applications ranging from elastic body simulations to fluids and collision

handling.

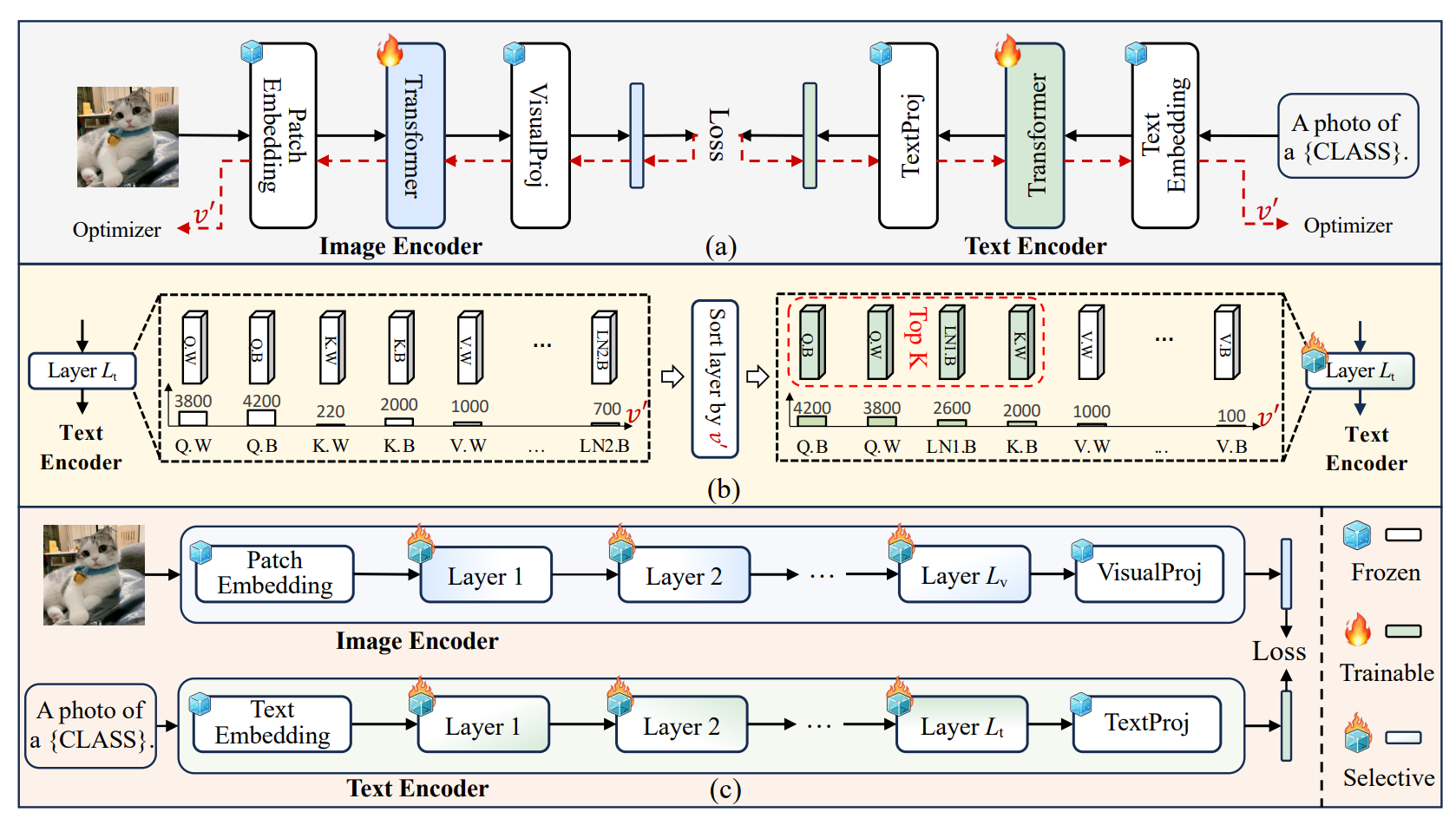

Adaptive Parameter Selection for Tuning Vision-Language Models

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025, 4280-4290.

Yi Zhang, Yi-Xuan Deng, Meng-Hao Guo, Shi-Min Hu

Vision-language models (VLMs) like CLIP have been widely used in various specific tasks.

Parameter-efficient fine-tuning (PEFT) methods, such as prompt and adapter tuning,

have become key techniques for adapting these models to specific domains.

However, existing approaches rely on prior knowledgeto manually identify the locations

requiring fine-tuning.Adaptively selecting which parameters in VLMs should be tuned remains

unexplored. In this paper, we propose CLIP with Adaptive Selective Tuning (CLIP-AST),

which can be used to automatically select critical parameters in VLMs for fine-tuning

for specific tasks.It opportunely leveragesthe adaptive learning rate in the optimizer

and improves model performance without extra parameter overhead. We conduct extensive

experiments on 13 benchmarks, such as ImageNet, Food101, Flowers102, etc,with different

settings, including few-shot learning, base-to-novel class generalization, and

out-of-distribution. The results show that CLIP-AST consistently outperforms the original

CLIP model as well as its variantsand achieves state-of-the-art (SOTA) performance in all

cases. For example, with the 16-shot learning, CLIP-AST surpasses GraphAdapter and PromptSRC

by 3.56% and 2.20% in average accuracy on 11 datasets, respectively. Code will be

publicly available.

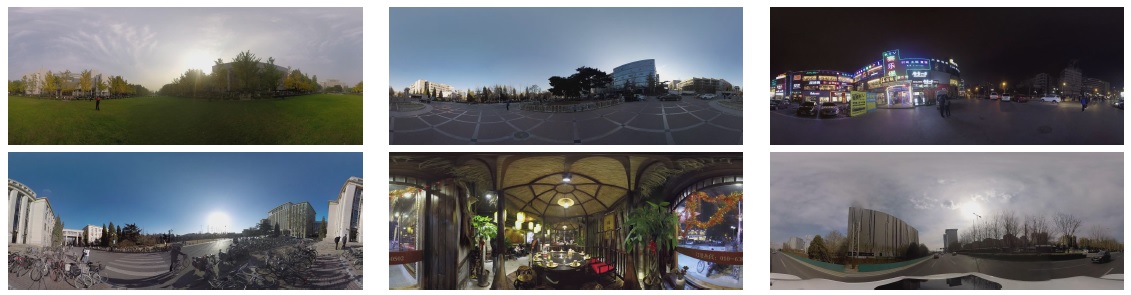

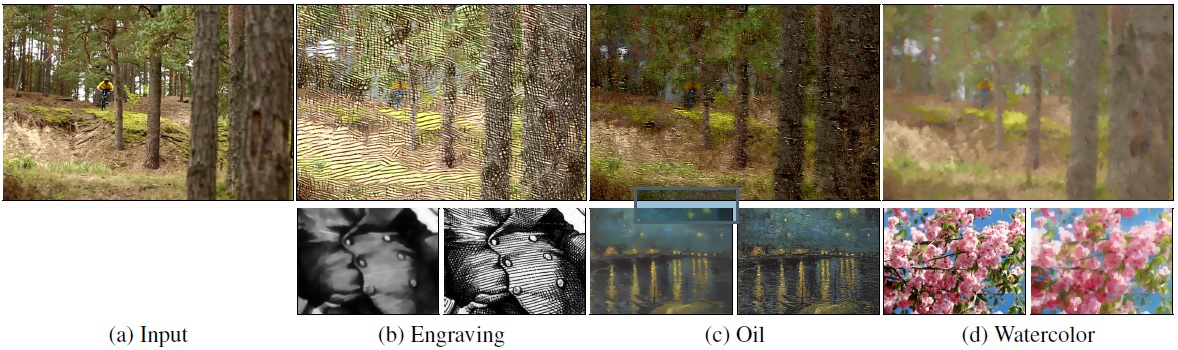

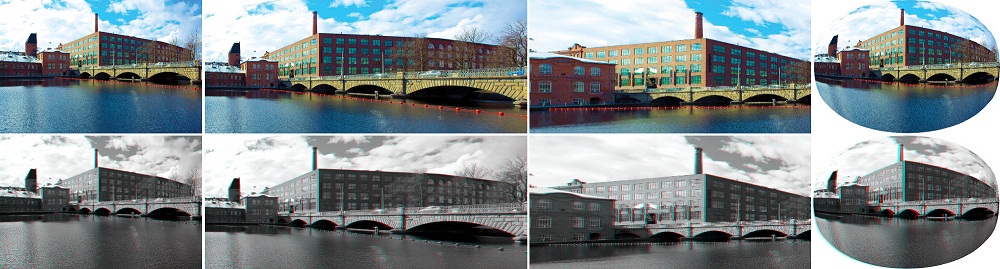

Splatter-360: Generalizable 360? Gaussian Splatting for Wide-baseline

Panoramic Images

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025, 21590-21598.

Zheng Chen, Chenming Wu, Zhelun Shen, Chen Zhao, Weicai Ye, Haocheng Feng, Errui Ding, Song-Hai Zhang

Vision-language models (VLMs) like CLIP have been widely used in various specific tasks.

Parameter-efficient fine-tuning (PEFT) methods, such as prompt and adapter tuning,

have become key techniques for adapting these models to specific domains.

However, existing approaches rely on prior knowledgeto manually identify the locations

requiring fine-tuning.Adaptively selecting which parameters in VLMs should be tuned remains

unexplored. In this paper, we propose CLIP with Adaptive Selective Tuning (CLIP-AST),

which can be used to automatically select critical parameters in VLMs for fine-tuning

for specific tasks.It opportunely leveragesthe adaptive learning rate in the optimizer

and improves model performance without extra parameter overhead. We conduct extensive

experiments on 13 benchmarks, such as ImageNet, Food101, Flowers102, etc,with different

settings, including few-shot learning, base-to-novel class generalization, and

out-of-distribution. The results show that CLIP-AST consistently outperforms the original

CLIP model as well as its variantsand achieves state-of-the-art (SOTA) performance in all

cases. For example, with the 16-shot learning, CLIP-AST surpasses GraphAdapter and PromptSRC

by 3.56% and 2.20% in average accuracy on 11 datasets, respectively. Code will be

publicly available.

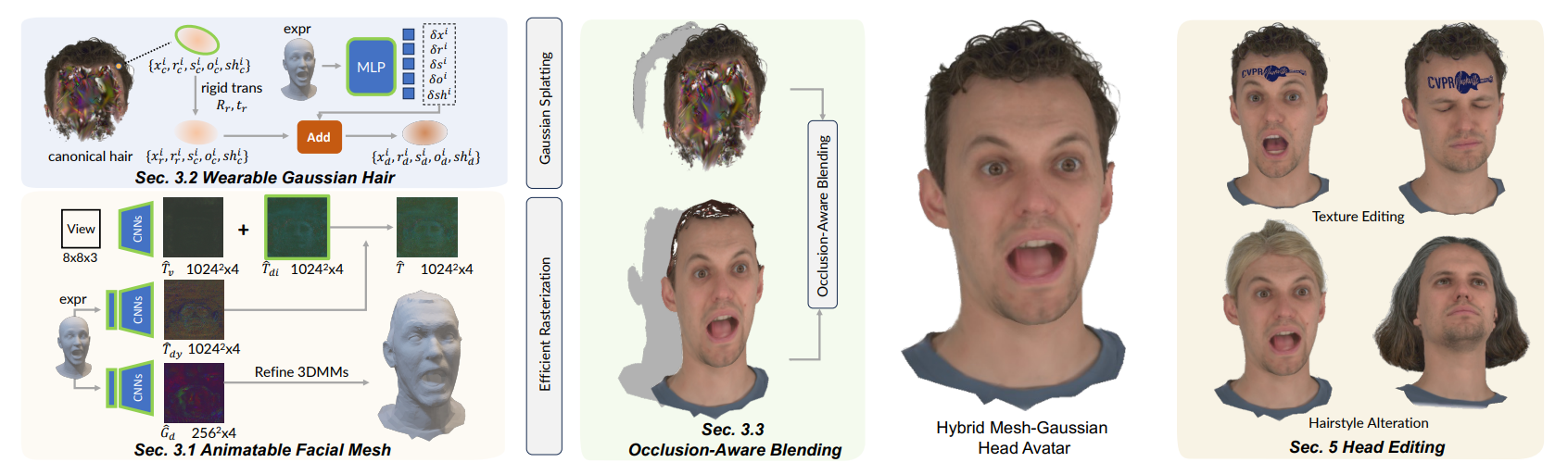

MeGA: Hybrid Mesh-Gaussian Head Avatar for High-Fidelity Rendering and Head Editing

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025, 26274-26284.

Cong Wang, Di Kang, Heyi Sun, Shenhan Qian, Zixuan Wang, Linchao Bao, Song-Hai Zhang

Creating high-fidelity head avatars from multi-view videos is essential for many AR/VR applications.

However, current methods often struggle to achieve high-quality renderings across all head components

(e.g., skin vs. hair) due to the limitations of using one single representation for elements with

varying characteristics. In this paper, we introduce a Hybrid Mesh-Gaussian Head Avatar (MeGA)

that models different head components with more suitable representations. Specifically,

we employ an enhanced FLAME mesh for the facial representation and predict a UV displacement

map to provide per-vertex offsets for improved personalized geometric details.

To achieve photorealistic rendering, we use deferred neural rendering to obtain facial

colors and decompose neural textures into three meaningful parts. For hair modeling,

we first build a static canonical hair using 3D Gaussian Splatting. A rigid transformation

and an MLP-based deformation field are further applied to handle complex dynamic expressions.

Combined with our occlusion-aware blending, MeGA generates higher-fidelity renderings for

the whole head and naturally supports diverse downstream tasks. Experiments on the NeRSemble

dataset validate the effectiveness of our designs, outperforming previous state-of-the-art

methods and enabling versatile editing capabilities, including hairstyle alteration and texture editing.

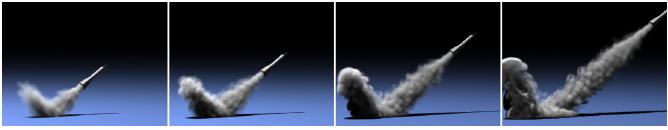

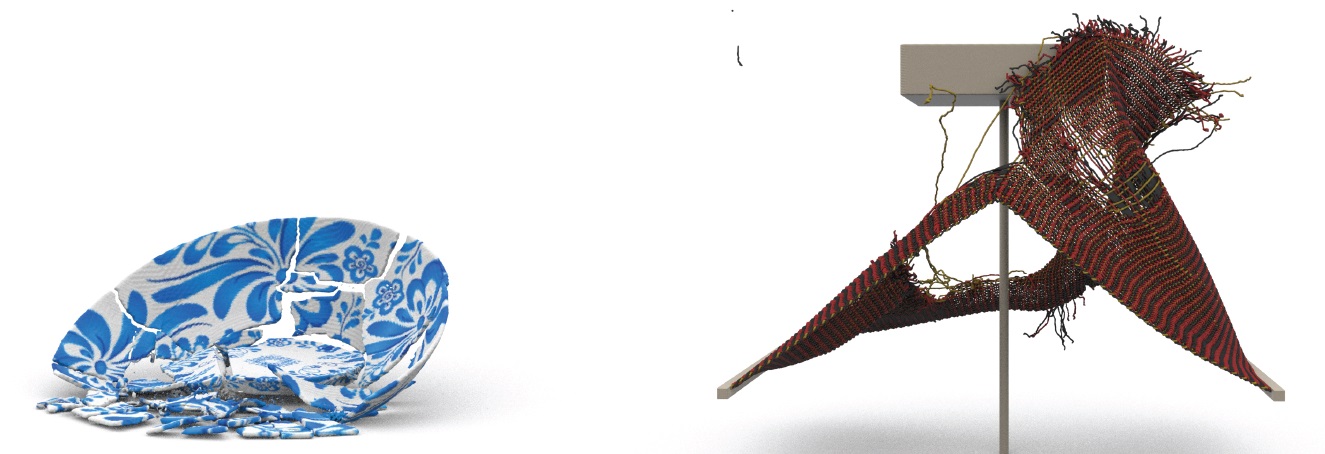

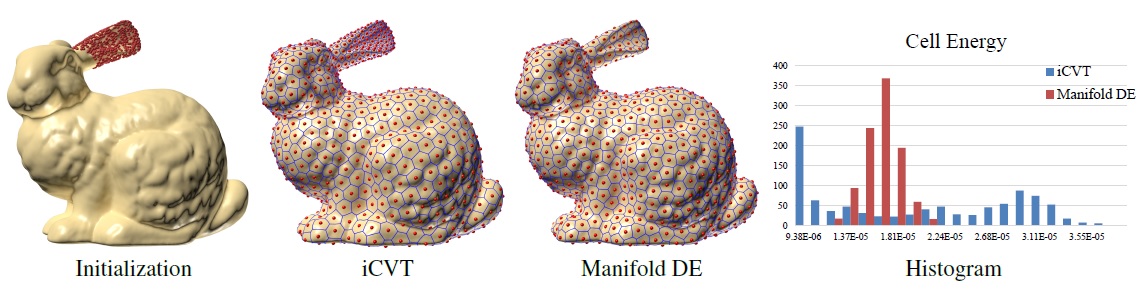

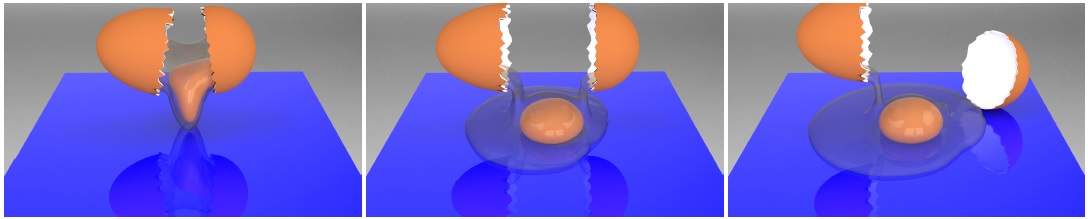

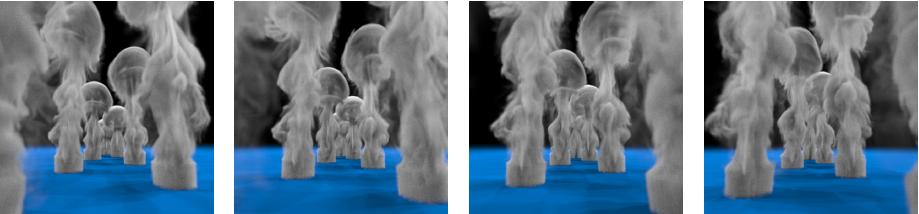

Implicit Bonded Discrete Element Method with Manifold Optimization

ACM Transactions on Graphics, 2025, Vol.44, No.1, Article No.9, 1 - 17.

Jia-Ming Lu, Geng-Chen Cao, Chenfeng Li, Shi-Min Hu

This paper proposes a novel simulation approach that combines implicit integration with the

Bonded Discrete Element Method (BDEM) to achieve faster, more stable and more accurate

fracture simulation. The new method leverages the eiciency of implicit schemes in dynamic

simulation and the versatility of BDEM in fracture modelling. Speciically, an

optimization-based integrator for BDEM is introduced and combined with a manifold optimization

approach to accelerate the solution process of the quaternion-constrained system. Our

comparative experiments indicate that our method ofers better scale consistency and more

realistic collision efects than FEM and MPM fragmentation approaches. Additionally, our method

achieves a computational speedup of 2.1 ~ 9.8 times over explicit BDEM methods.

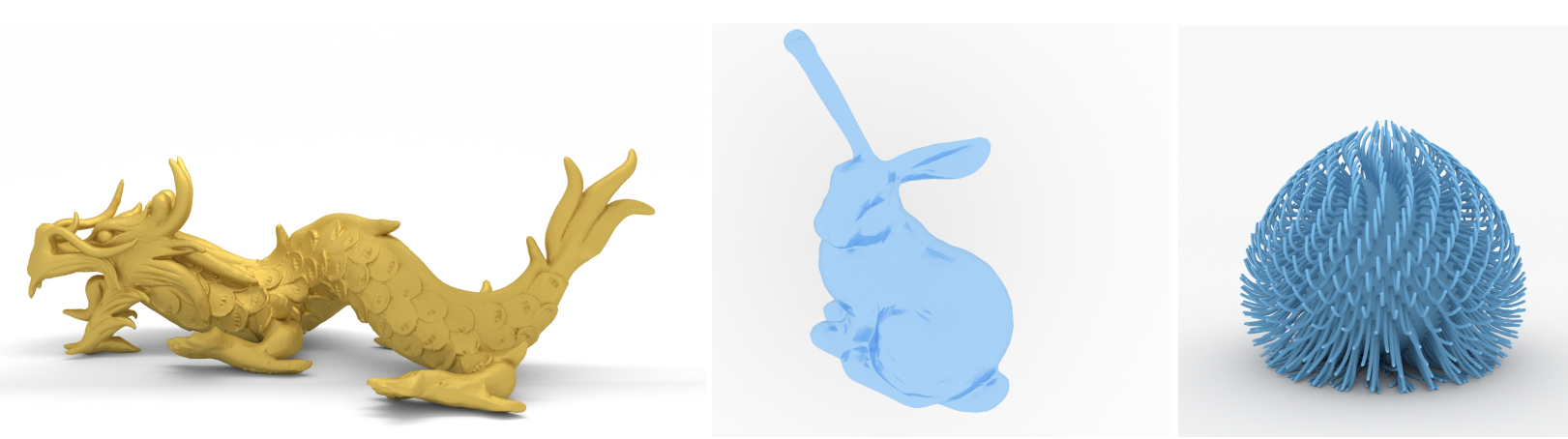

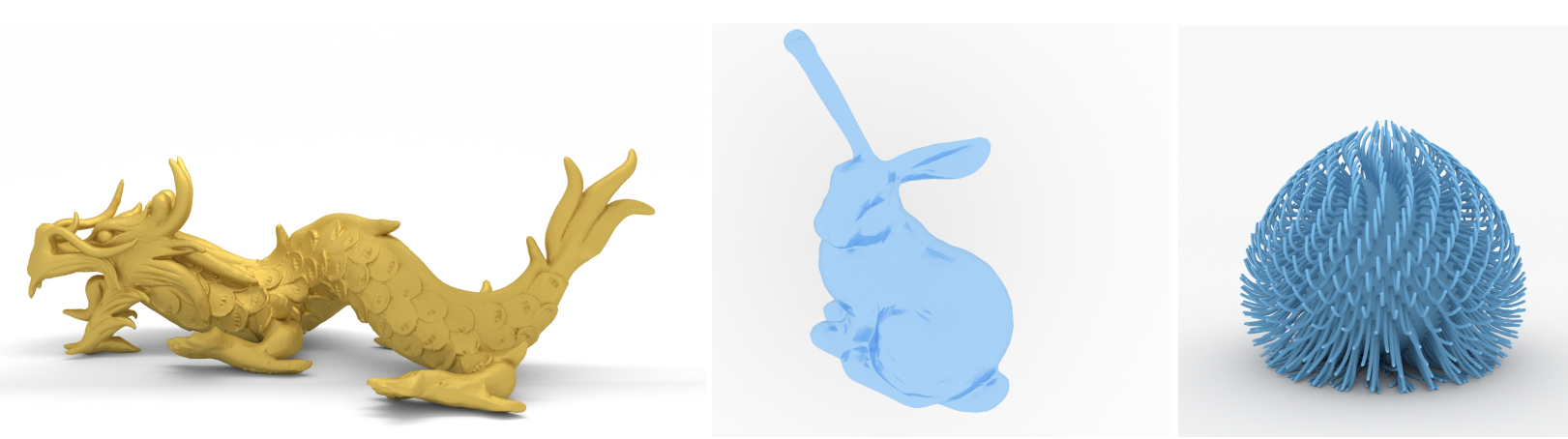

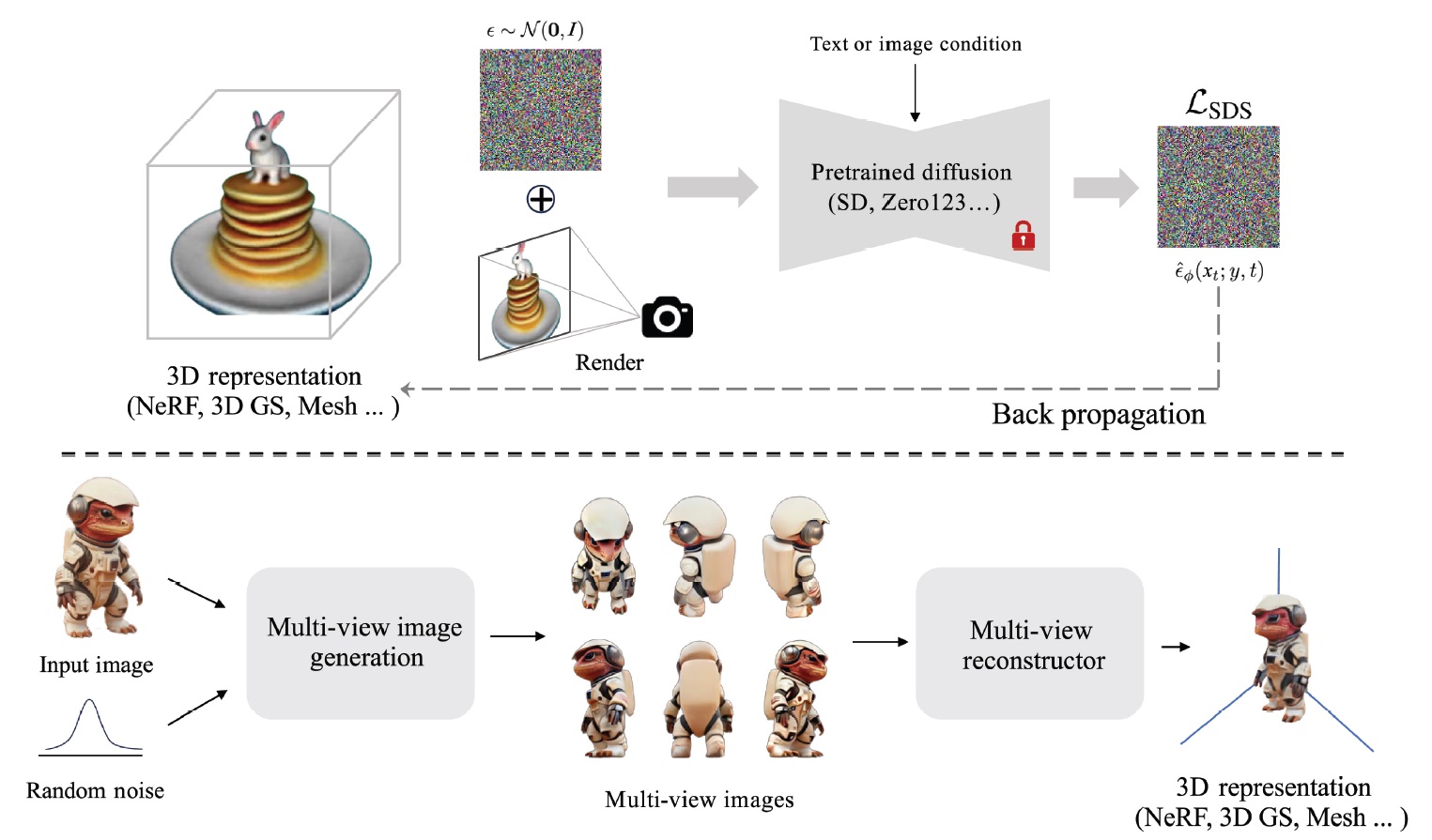

Diffusion models for 3D generation: A survey

Computational Visual Media, 2025, Vol. 11, No.1, 1-28.

Chen Wang, Hao-Yang Peng, Ying-Tian Liu, Jiatao Gu, Shi-Min Hu

Denoising diffusion models have demonstrated tremendous success in modeling data

distributions and synthesizing high-quality samples. In the 2D image domain,

they have become the state-of-the-art and are capable of generating

photo-realistic images with high controllability. More recently, researchers

have begun to explore how to utilize diffusion models to generate 3D data,

as doing so has more potential in real-world applications. This requires careful

design choices in two key ways: identifying a suitable 3D representation and

determining how to apply the diffusion process. In this survey, we provide the

first comprehensive review of diffusion models for manipulating 3D content,

including 3D generation, reconstruction, and 3D-aware image synthesis.

We classify existing methods into three major categories: 2D space diffusion with

pretrained models, 2D space diffusion without pretrained models, and 3D space

diffusion. We also summarize popular datasets used for 3D generation with diffusion

models. Along with this survey, we maintain a repository

https://github.com/cwchenwang/awesome-3d-diffusion

to track the latest relevant papers and codebases. Finally, we pose current

challenges for diffusion models for 3D generation, and suggest future research directions.

2024

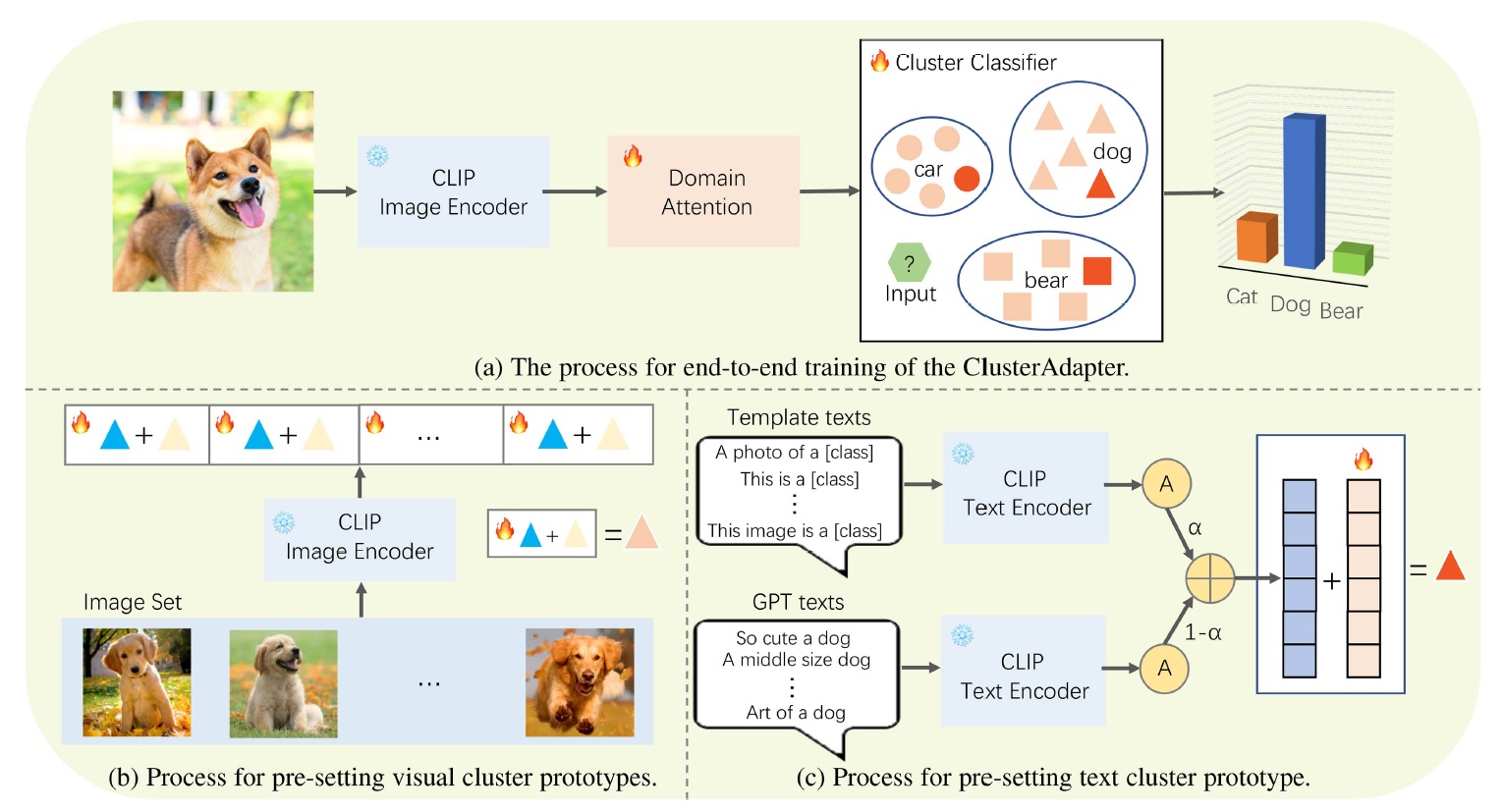

Tuning Vision-Language Models With Multiple Prototypes Clustering

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024, Vol. 46, No. 12, 11186-11199.

Meng-Hao Guo, Yi Zhang, Tai-Jiang Mu, Sharon X. Huang, Shi-Min Hu

Attention mechanisms, especially self-attention, have played an increasingly important role

in deep feature representation for visual tasks. Self-attention updates the feature at each

position by computing a weighted sum of features using pair-wise affinities across all positions

to capture the long-range dependency within a single sample. However, self-attention has

quadratic complexity and ignores potential correlation between different samples.

This article proposes a novel attention mechanism which we call external attention ,

based on two external, small, learnable, shared memories, which can be implemented easily

by simply using two cascaded linear layers and two normalization layers; it conveniently

replaces self-attention in existing popular architectures. External attention has linear

complexity and implicitly considers the correlations between all data samples. We further

incorporate the multi-head mechanism into external attention to provide an all-MLP architecture,

external attention MLP (EAMLP), for image classification. Extensive experiments on

image classification, object detection, semantic segmentation, instance segmentation, image

generation, and point cloud analysis reveal that our method provides results comparable or

superior to the self-attention mechanism and some of its variants, with much lower

computational and memory costs.

DIScene: Object Decoupling and Interaction Modeling for Complex Scene Generation

ACM SIGGRAPH Asia 2024 Conference Papers, 2024, Article No.101, 1-12.

Xiao-Lei Li, Haodong Li, Hao-Xiang Chen, Tai-Jiang Mu, and Shi-Min Hu

This paper reconsiders how to distill knowledge from pretrained 2D diffusion

models to guide 3D asset generation, in particular to generate complex 3D scenes:

it should accept varied inputs, i.e., texts or images, to allow for flexible

expression of requirement; objects in the scene should be style-consistent

and decoupled with clearly modeled interactions, benefiting downstream tasks.

We propose DIScene, a novel method for this task. It represents the entire 3D

scene with a learnable structured scene graph: each node explicitly models an

object with its appearance, textual description, transformation, geometry as

a mesh attached with surface-aligned Gaussians; the graph's edges model object

interactions. With this new representation, objects are optimized in the

canonical space and interactions between objects are optimized by object-aware

rendering to avoid wrong back-propagation. Extensive experiments demonstrate the

significant utility and superiority of our approach and that DIScene can greatly

facilitate 3D content creation tasks.

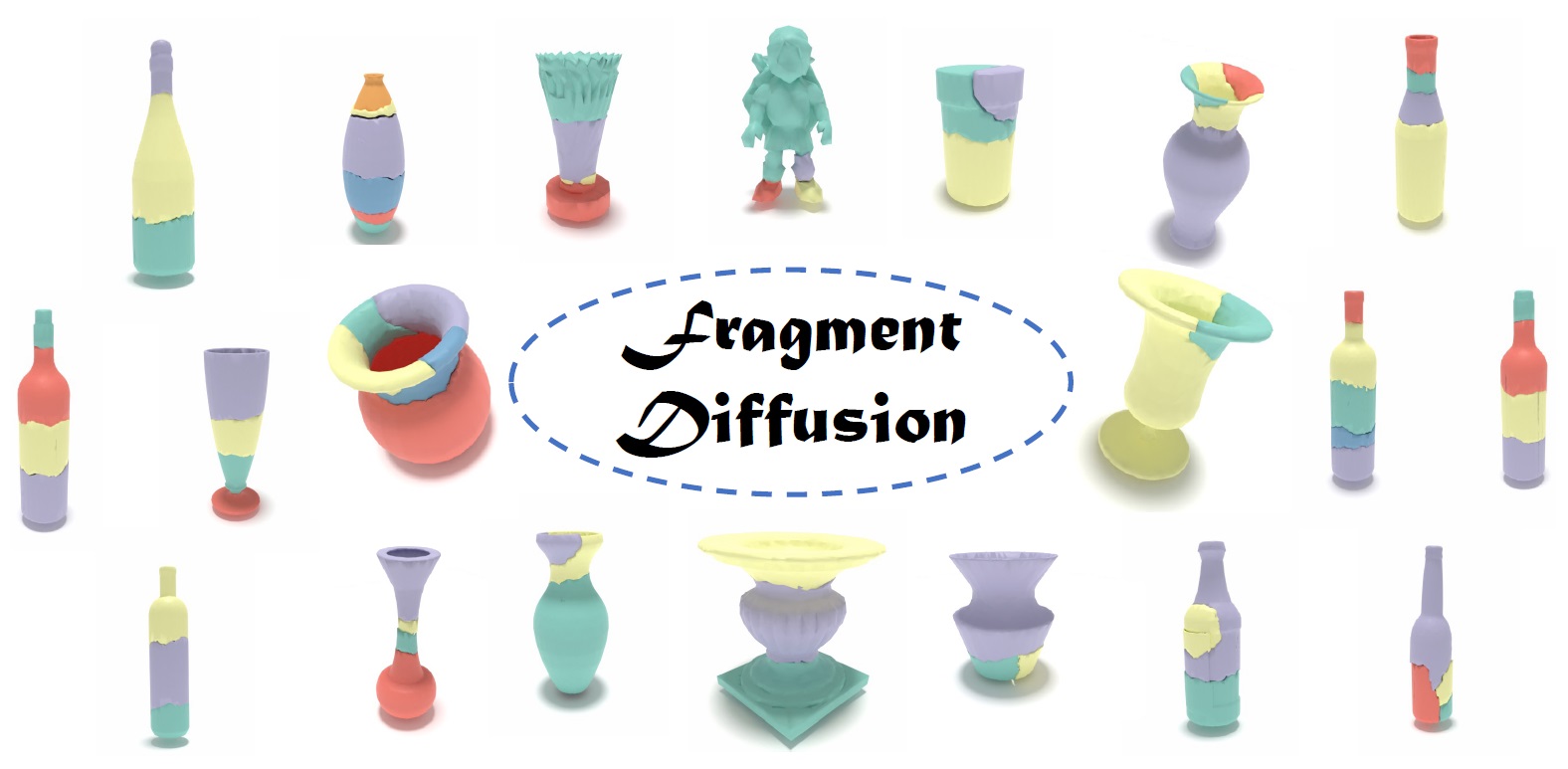

FragmentDiff: A Diffusion Model for Fractured Object Assembly

ACM SIGGRAPH Asia 2024 Conference Papers, 2024, Article No. 58, Pages 1 - 12.

Qun-Ce Xu, Hao-Xiang Chen, Jiacheng Hua, Xiaohua Zhan, Yong-Liang Yang, Tai-Jiang Mu

Fractured object reassembly is a challenging problem in computer vision and graphics

with applications in industrial manufacturing and archaeology. Traditional methods

based on shape descriptors and geometric registration often struggle with ambiguous

features, resulting in lower accuracy. Recent data-driven methods are inherently affected

by the representation and learning ability of the trained models. To address this,

we propose a novel approach inspired by diffusion models and transformers. Our method

applies diffusion denoising via a transformer to predict the pose parameter of each fragment,

taking advantage of their global feature correlation and pose prior learning abilities.

We evaluate our approach on a fractured object dataset and demonstrate superior performance

compared to state-of-the-art methods. Our method offers a promising solution for accurate

and robust fractured object reassembly, advancing the field in complex shape analysis and

assembly tasks.

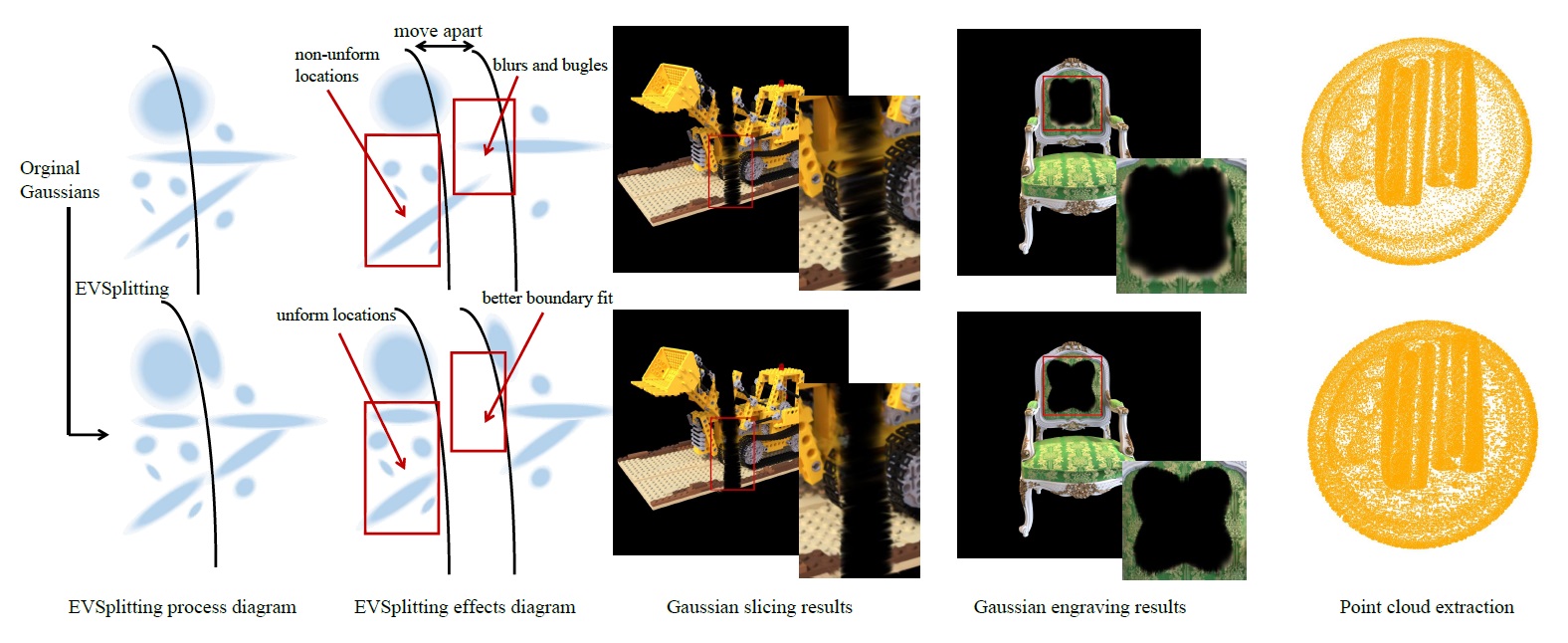

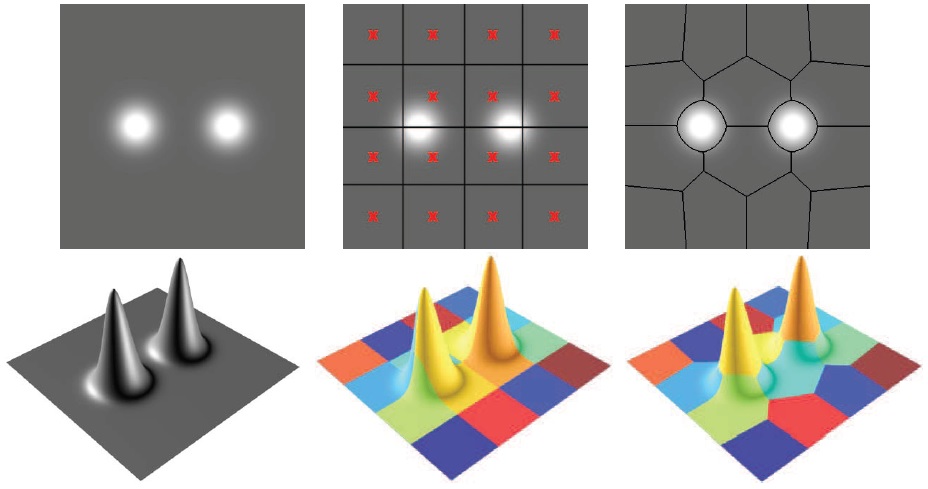

EVSplitting: An Efficient and Visually Consistent Splitting Algorithm for 3D Gaussian Splatting

ACM SIGGRAPH Asia 2024 Conference Papers, 2024, Article No. 35, Pages 1 - 11.

Qi-Yuan Feng, Geng-Chen Cao, Hao-Xiang Chen, Qun-Ce Xu, Tai-Jiang Mu, Ralph Martin, Shi-Min Hu

This paper presents EVSplitting, an efficient and visually consistent splitting algorithm for

3D Gaussian Splatting (3DGS). It is designed to make operating 3DGS as easy and effective as

other 3D explicit representations, readily for industrial productions. The challenges of above

target are: 1) The huge number and complex attributes of 3DGS make it tough to explicitly

operate on 3DGS in a real-time and learning-free manner; 2) The visual effect of 3DGS is

very difficult to maintain during explicit operations and 3) The anisotropism of Gaussian

always leads to blurs and artifacts. As far as we know, no prior work can address these

challenges well. In this work, we introduce a direct and efficient 3DGS splitting algorithm

to solve them. Specifically, we formulate the 3DGS splitting as two minimization problems

that aim to ensure visual consistency and reduce Gaussian overflow across boundary (splitting

plane), respectively. Firstly, we impose conservations on the zero-, first- and second-order

moments of the weighted Gaussian distribution to guarantee visual consistency. Secondly, we

reduce the boundary overflow with a special constraint on the aforementioned conservations.

With these conservations and constraints, we derive a closed-form solution for the 3DGS splitting

problem. This yields an easy-to-implement, plug-and-play, efficient and fundamental tool,

benefiting various downstream applications of 3DGS.

CharacterGen: Efficient 3D Character Generation from Single Images with Multi-View Pose Canonicalization

ACM Transactions on Graphics, 2024, Vol. 43, No. 4, article number: 84, 1-13, ACM SIGGRAPH.

Hao-Yang Peng, Jia-Peng Zhang, Meng-Hao Guo, Yan-Pei Cao, Shi-Min Hu

In the field of digital content creation, generating high-quality 3D characters

from single images is challenging, especially given the complexities of various

body poses and the issues of self-occlusion and pose ambiguity. In this paper,

we present CharacterGen, a framework developed to efficiently generate 3D characters.

CharacterGen introduces a streamlined generation pipeline along with an

image-conditioned multi-view diffusion model. This model effectively

calibrates input poses to a canonical form while retaining key attributes of

the input image, thereby addressing the challenges posed by diverse poses.

A transformer-based, generalizable sparse-view reconstruction model is the

other core component of our approach, facilitating the creation of detailed 3D

models from multi-view images. We also adopt a texture-back-projection strategy

to produce high-quality texture maps. Additionally, we have curated a dataset of

anime characters, rendered in multiple poses and views, to train and evaluate our

model. Our approach has been thoroughly evaluated through quantitative and

qualitative experiments, showing its proficiency in generating 3D characters

with high-quality shapes and textures, ready for downstream applications such

as rigging and animation.

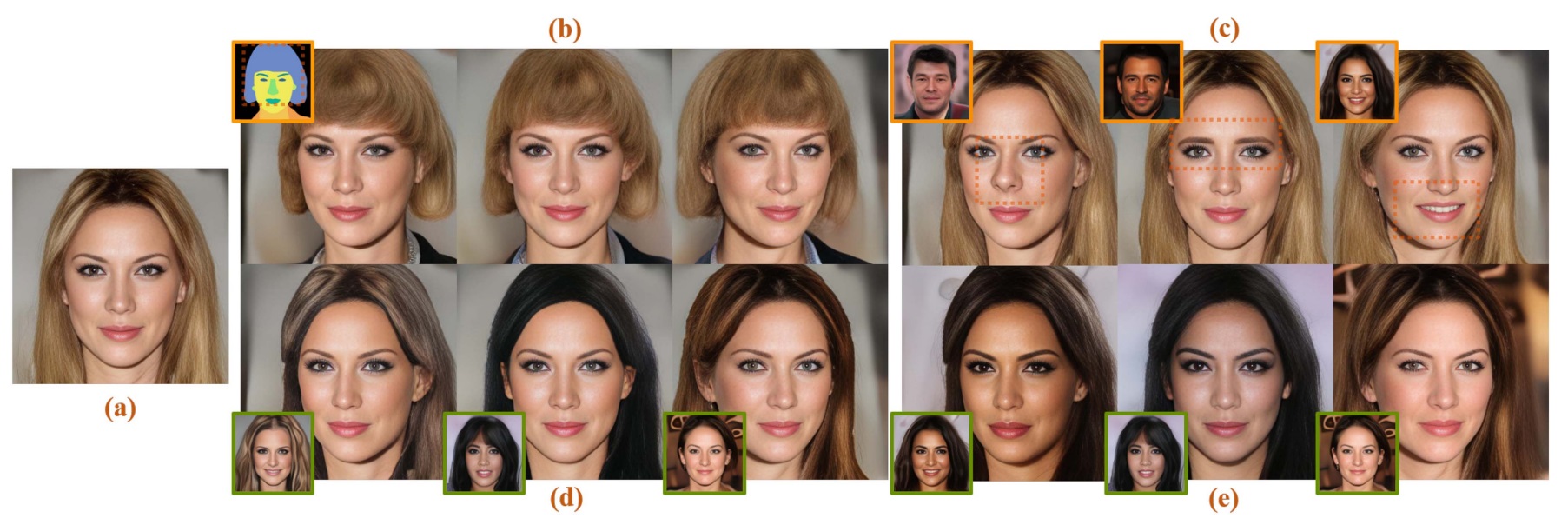

LC-NeRF: Local Controllable Face Generation in Neural Radiance Field

IEEE Transactions on Visualization and Computer Graphics, 2024, Vol. 30, No. 8, 5437-5448.

Wen-Yang Zhou, Lu Yuan, Shu-Yu Chen, Lin Gao, Shi-Min Hu

3D face generation has achieved high visual quality and 3D consistency thanks

to the development of neural radiance fields (NeRF). However, these methods model

the whole face as a neural radiance field, which limits the controllability of the

local regions. In other words, previous methods struggle to independently control

local regions, such as the mouth, nose, and hair. To improve local controllability in

NeRF-based face generation, we propose LC-NeRF, which is composed of a Local Region

Generators Module (LRGM) and a Spatial-Aware Fusion Module (SAFM) , allowing for

geometry and texture control of local facial regions. The LRGM models different

facial regions as independent neural radiance fields and the SAFM is responsible

for merging multiple independent neural radiance fields into a complete representation.

Finally, LC-NeRF enables the modification of the latent code associated with each

individual generator, thereby allowing precise control over the corresponding

local region. Qualitative and quantitative evaluations show that our method provides

better local controllability than state-of-the-art 3D-aware face generation methods.

A perception study reveals that our method outperforms existing state-of-the-art methods

in terms of image quality, face consistency, and editing effects. Furthermore, our method

exhibits favorable performance in downstream tasks, including real image editing

and text-driven facial image editing.

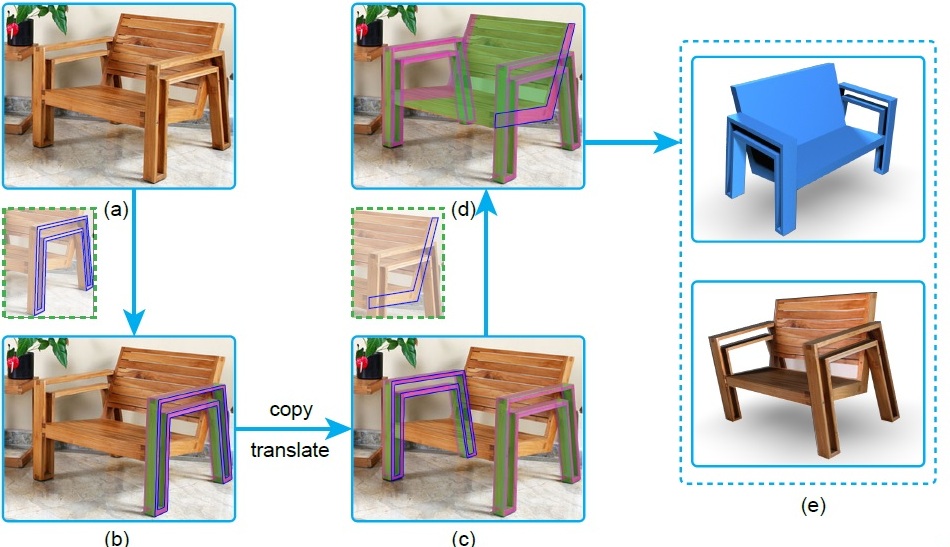

SceneDirector: Interactive Scene Synthesis by Simultaneously Editing Multiple Objects in Real-Time

IEEE Transactions on Visualization and Computer Graphics, 2024, Vol. 30, No. 8, 4558-4569,.

Shao-Kui Zhang, Hou Tam, Yike Li, Ke-Xin Ren, Hongbo Fu, Song-Hai Zhang

3D face generation has achieved high visual quality and 3D consistency thanks

to the development of neural radiance fields (NeRF). However, these methods model

the whole face as a neural radiance field, which limits the controllability of the

local regions. In other words, previous methods struggle to independently control

local regions, such as the mouth, nose, and hair. To improve local controllability in

NeRF-based face generation, we propose LC-NeRF, which is composed of a Local Region

Generators Module (LRGM) and a Spatial-Aware Fusion Module (SAFM) , allowing for

geometry and texture control of local facial regions. The LRGM models different

facial regions as independent neural radiance fields and the SAFM is responsible

for merging multiple independent neural radiance fields into a complete representation.

Finally, LC-NeRF enables the modification of the latent code associated with each

individual generator, thereby allowing precise control over the corresponding

local region. Qualitative and quantitative evaluations show that our method provides

better local controllability than state-of-the-art 3D-aware face generation methods.

A perception study reveals that our method outperforms existing state-of-the-art methods

in terms of image quality, face consistency, and editing effects. Furthermore, our method

exhibits favorable performance in downstream tasks, including real image editing

and text-driven facial image editing.

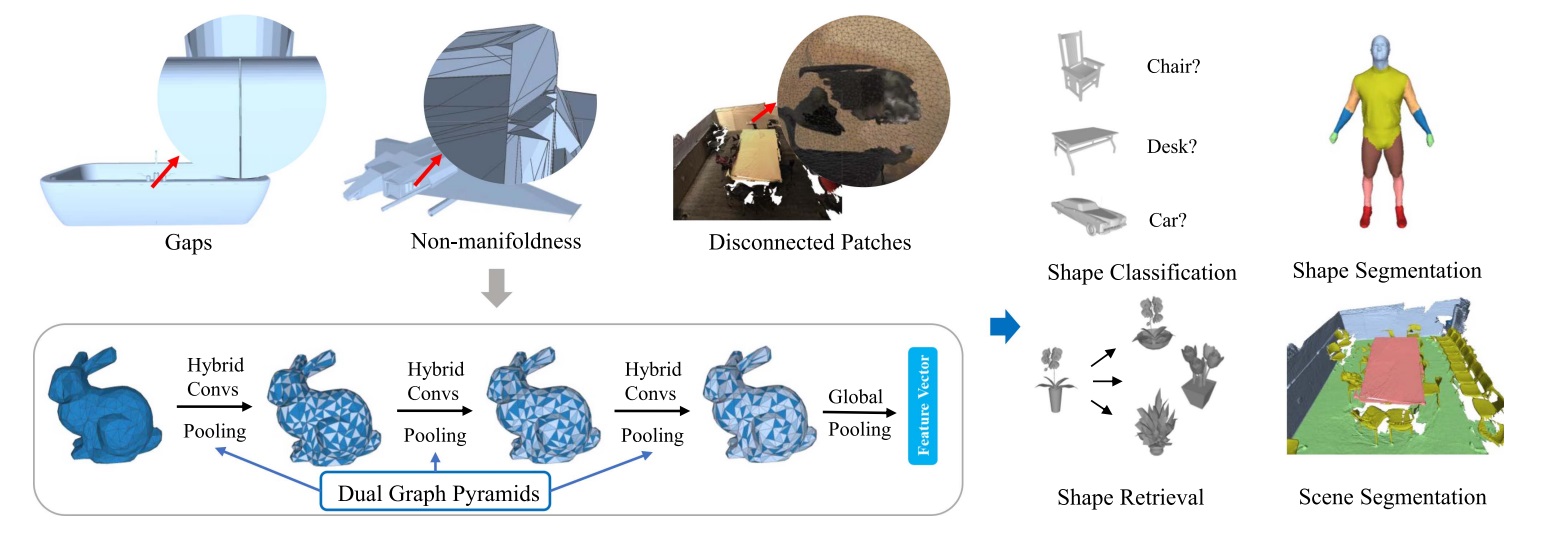

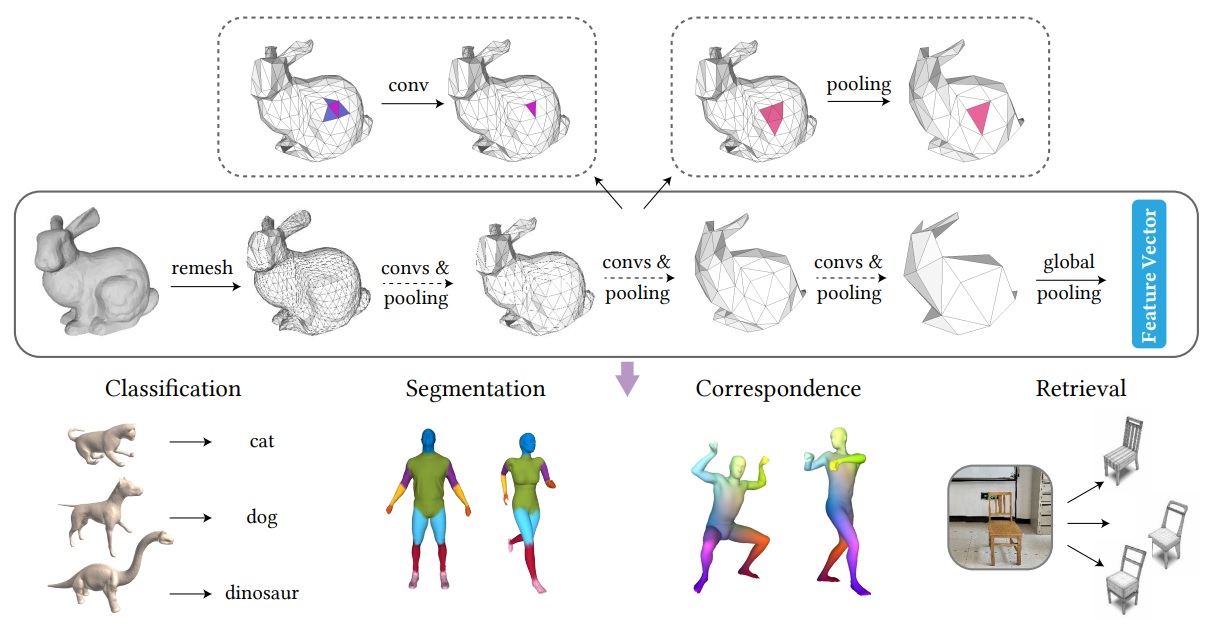

Mesh Neural Networks Based on Dual Graph Pyramids

IEEE Transactions on Visualization and Computer Graphics, 2024, Vol. 30, No. 7, 4211-4224.

Xiang-Li Li, Zheng-Ning Liu, Tuo Chen, Tai-Jiang Mu, Ralph R. Martin, Shi-Min Hu

Deep neural networks (DNNs) have been widely used for mesh processing in recent years.

However, current DNNs can not process arbitrary meshes efficiently. On the one hand,

most DNNs expect 2-manifold, watertight meshes, but many meshes, whether manually

designed or automatically generated, may have gaps, non-manifold geometry, or other

defects. On the other hand, the irregular structure of meshes also brings challenges

to building hierarchical structures and aggregating local geometric information,

which is critical to conduct DNNs. In this paper, we present DGNet, an efficient,

effective and generic deep neural mesh processing network based on dual graph pyramids;

it can handle arbitrary meshes. First, we construct dual graph pyramids for meshes to

guide feature propagation between hierarchical levels for both downsampling and

upsampling. Second, we propose a novel convolution to aggregate local features on

the proposed hierarchical graphs. By utilizing both geodesic neighbors and

euclidean neighbors, the network enables feature aggregation both within local

surface patches and between isolated mesh components. Experimental results demonstrate

that DGNet can be applied to both shape analysis and large-scale scene understanding.

Furthermore, it achieves superior performance on various benchmarks, including

ShapeNetCore, HumanBody, ScanNet and Matterport3D. Code and models will be available

at https://github.com/li-xl/DGNet .

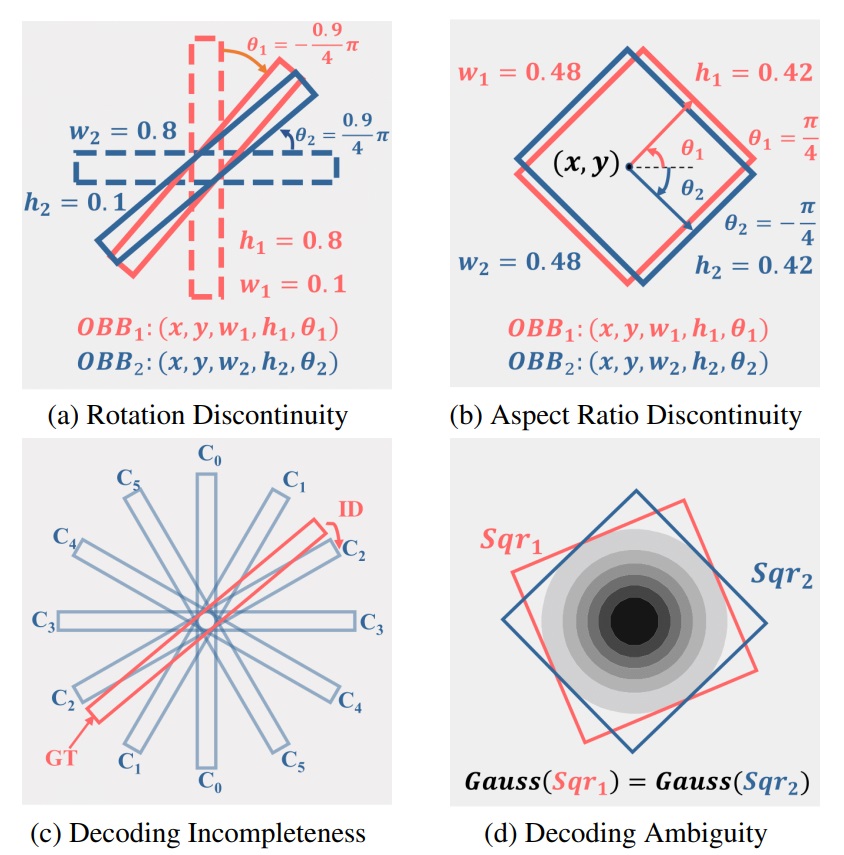

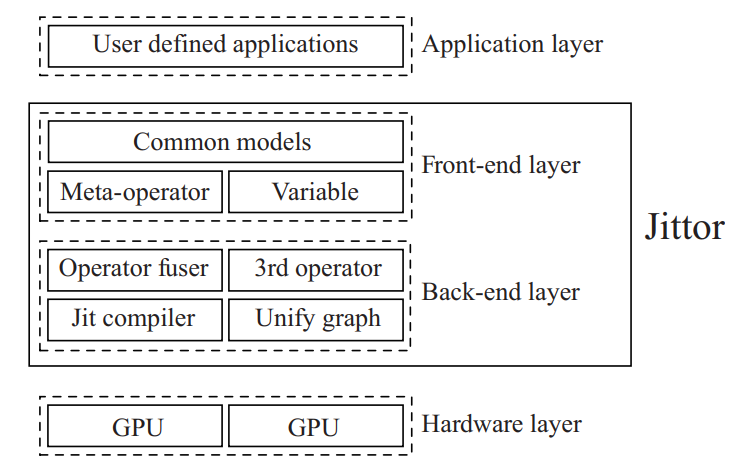

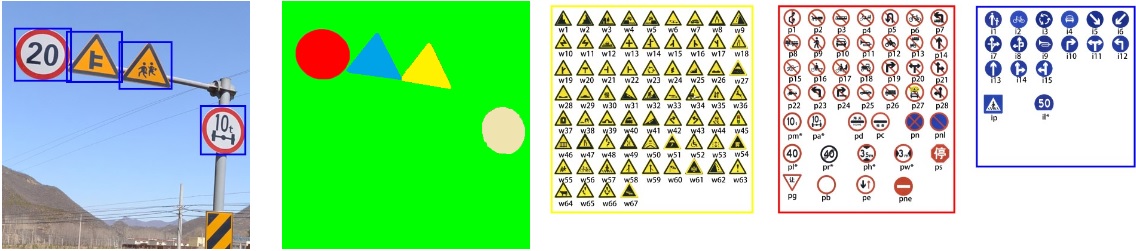

Theoretically Achieving Continuous Representation of Oriented Bounding Boxes

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024, 16912-16922.

Zi-Kai Xiao, Guo-Ye Yang, Xue Yang, Tai-Jiang Mu, Junchi Yan, Shi-Min Hu

Considerable efforts have been devoted to Oriented Ob-ject Detection (OOD).

However, one lasting issue regarding the discontinuity in Oriented Bounding Box (OBB)

rep-resentation remains unresolved, which is an inherent bot-tleneck for extant OOD

methods. This paper endeavors to completely solve this issue in a theoretically

guaranteed manner and puts an end to the ad-hoc efforts in this di-rection. Prior

studies typically can only address one of the two cases of discontinuity: rotation

and aspect ratio, and often inadvertently introduce decoding discontinuity, e.g.

Decoding Incompleteness (DI) and Decoding Ambi-guity (DA) as discussed in literature.

Specifically, we pro-pose a novel representation method called Continuous OBB (COBB),

which can be readily integrated into existing de-tectors e.g. Faster-RCNN as a

plugin. It can theoreti-cally ensure continuity in bounding box regression which

to our best knowledge, has not been achieved in literature for rectangle-based

object representation. For fairness and transparency of experiments, we have developed

a modu-larized benchmark based on the open-source deep learning framework Jittor's

detection toolbox JDetfor OOD evaluation. On the popular DOTA dataset, by integrating

Faster-RCNN as the same baseline model, our new method out-performs the peer method

Gliding Vertex by 1.13% mAP 50 (relative improvement 1.54%), and 2.46% mAP 75

(relative improvement 5.91%), without any tricks.

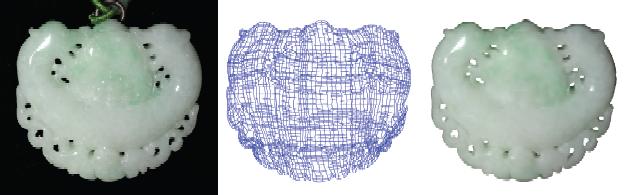

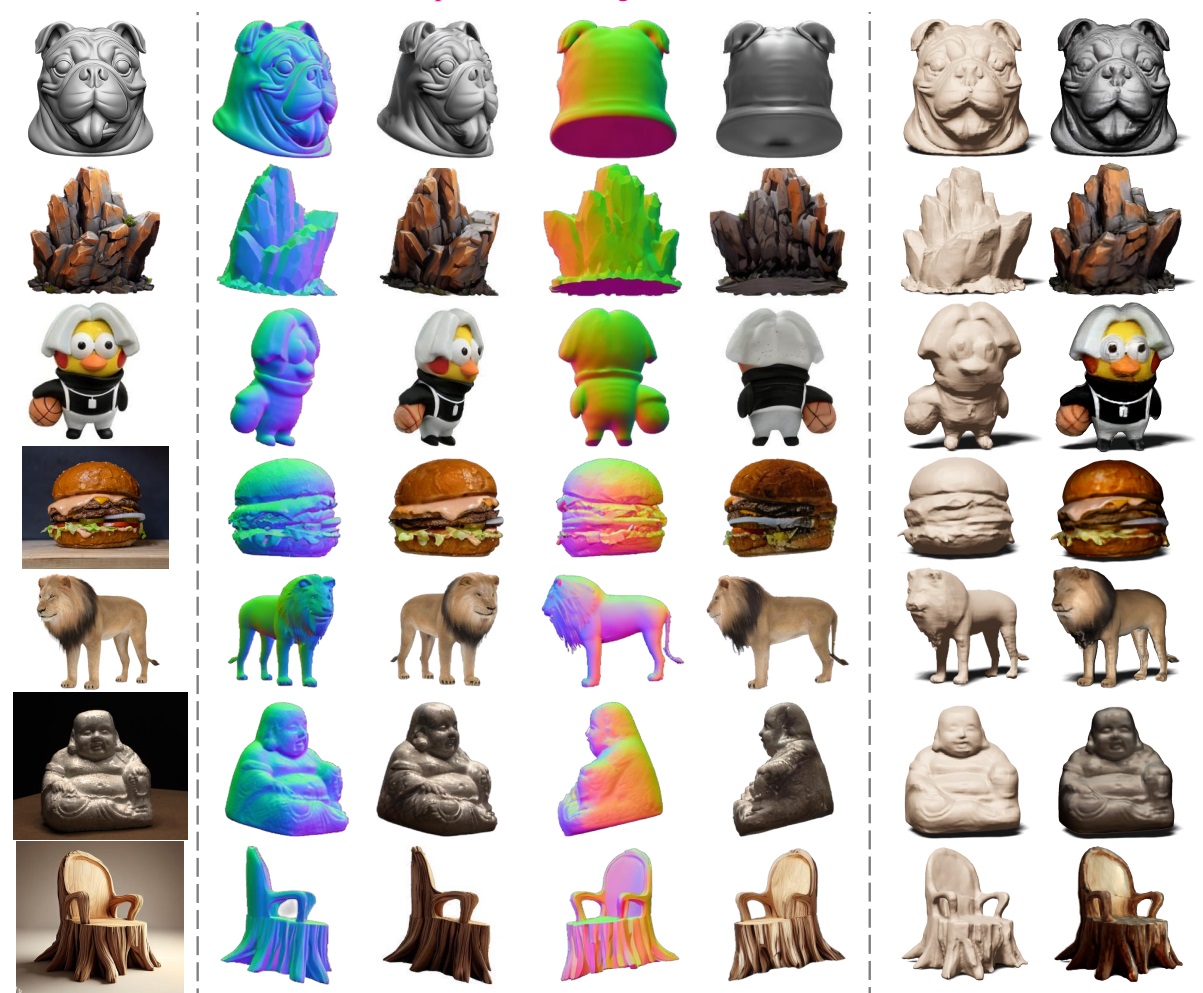

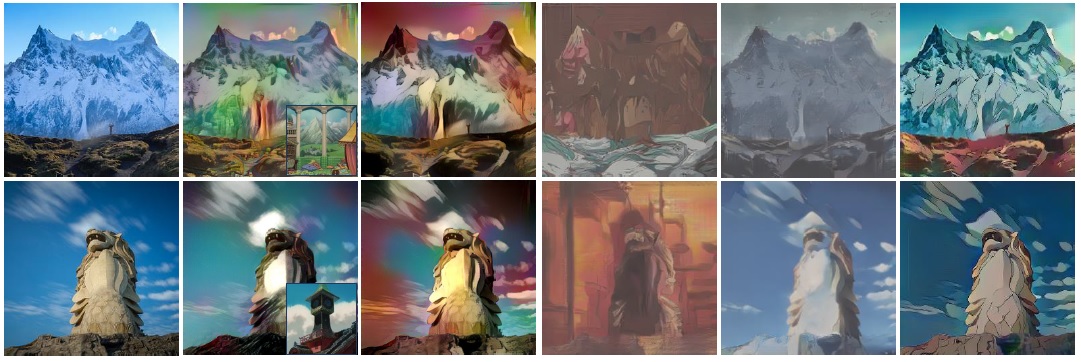

Wonder3D: Single Image to 3D Using Cross-Domain Diffusion

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024, 9970-9980.

Xiaoxiao Long, Yuan-Chen Guo, Cheng Lin, Yuan Liu, Zhiyang Dou, Lingjie Liu, Yuexin Ma, Song-Hai Zhang, Marc Habermann,

Christian Theobalt, Wenping Wang

In this work, we introduce Wonder3D, a novel method for efficiently generating high-fidelity textured

meshes from single-view images. Recent methods based on Score Distillation Sampling (SDS)

have shown the potential to recover 3D geometry from 2D diffusion priors, but they typically

suffer from time-consuming per-shape optimization and inconsistent geometry. In contrast,

certain works di-rectly produce 3D information via fast network inferences, but their results

are often of low quality and lack geometric details. To holistically improve the quality,

consistency, and efficiency of single-view reconstruction tasks, we pro-pose a cross-domain

diffusion model that generates multi-view normal maps and the corresponding color images.

To ensure the consistency of generation, we employ a multi-view cross-domain attention

mechanism that facilitates information exchange across views and modalities. Lastly, we

introduce a geometry-aware normal fusion algorithm that extracts high-quality surfaces

from the multi-view 2D representations in only 2 ~ 3 minutes. Our extensive evaluations

demonstrate that our method achieves high-quality reconstruction results, robust generalization,

and good efficiency compared to prior works.

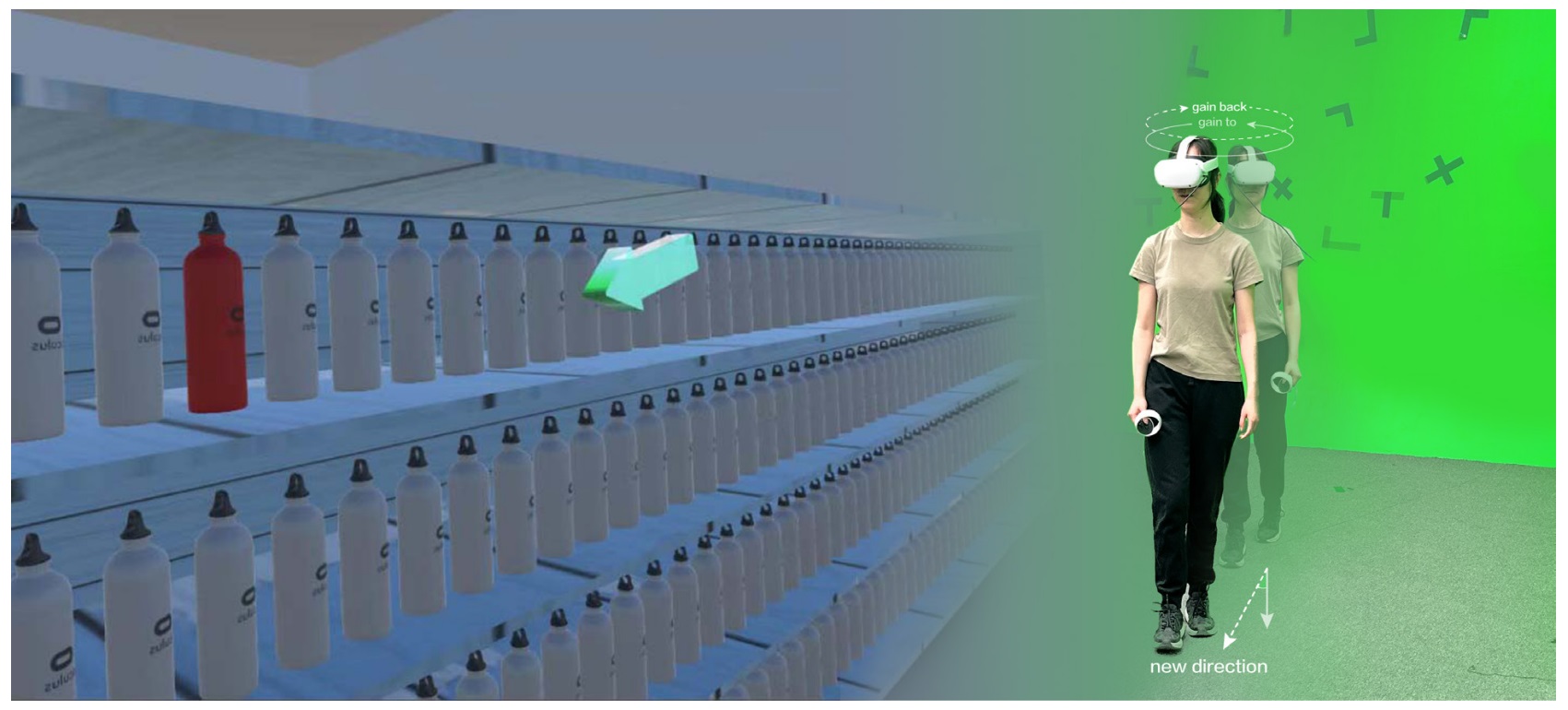

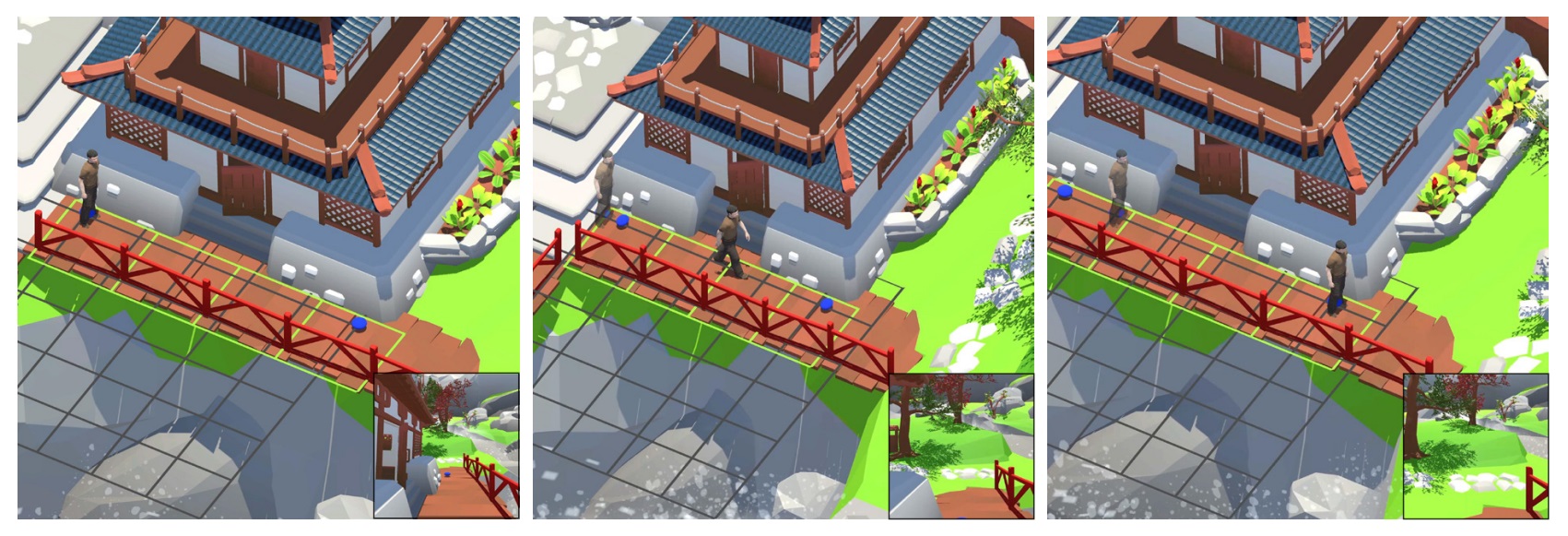

BiRD: Using Bidirectional Rotation Gain Differences to Redirect Users during Back-and-forth Head Turns in Walking

IEEE Transactions on Visualization and Computer Graphics, Vol. 30, No. 4, 1916-1926.

Sen-Zhe Xu, Fiona Xiao Yu Chen, Ran Gong, Fang-Lue Zhang, Song-Hai Zhang

Redirected walking (RDW) facilitates user navigation within expansive

virtual spaces despite the constraints of limited physical spaces. It employs

discrepancies between human visual-proprioceptive sensations, known as gains,

to enable the remapping of virtual and physical environments. In this paper,

we explore how to apply rotation gain while the user is walking. We propose

to apply a rotation gain to let the user rotate by a different angle when

reciprocating from a previous head rotation, to achieve the aim of steering

the user to a desired direction. To apply the gains imperceptibly based on

such a Bidirectional Rotation gain Difference (BiRD), we conduct both

measurement and verification experiments on the detection thresholds of

the rotation gain for reciprocating head rotations during walking. Unlike

previous rotation gains which are measured when users are turning around

in place (standing or sitting), BiRD is measured during users' walking.

Our study offers a critical assessment of the acceptable range of rotational

mapping differences for different rotational orientations across the user's

walking experience, contributing to an effective tool for redirecting users

in virtual environments.

Spatial Contraction Based on Velocity Variation for Natural Walking in Virtual Reality

IEEE Transactions on Visualization and Computer Graphics, Vol. 30, No. 5, 2444-2453.

Sen-Zhe Xu, Kui Huang, Cheng-Wei Fan, Song-Hai Zhang

Virtual Reality (VR) offers an immersive 3D digital environment,

but enabling natural walking sensations without the constraints of physical space

remains a technological challenge. Previous VR locomotion methods, including

game controller, teleportation, treadmills, walking-in-place, and redirected

walking (RDW), have made strides towards overcoming this challenge. However,

these methods also face limitations such as possible unnaturalness,

additional hardware requirements, or motion sickness risks. This paper

introduces ¡°Spatial Contraction (SC)¡±, an innovative VR locomotion method

inspired by the phenomenon of Lorentz contraction in Special Relativity.

Similar to the Lorentz contraction, our SC contracts the virtual space

along the user's velocity direction in response to velocity variation.

The virtual space contracts more when the user's speed is high, whereas

minimal or no contraction happens at low speeds. We provide a virtual space

transformation method for spatial contraction and optimize the user experience

in smoothness and stability. Through SC, VR users can effectively traverse a

longer virtual distance with a shorter physical walking. Different from locomotion

gains, the spatial contraction effect is observable by the user and aligns

with their intentions, so there is no inconsistency between the user's

proprioception and visual perception. SC is a general locomotion method

that has no special requirements for VR scenes. The experimental results

of our live user studies in various virtual scenarios demonstrate that SC

has a significant effect in reducing both the number of resets and the physical

walking distance users need to cover. Furthermore, experiments have also

demonstrated that SC has the potential for integration with existing

locomotion techniques such as RDW.

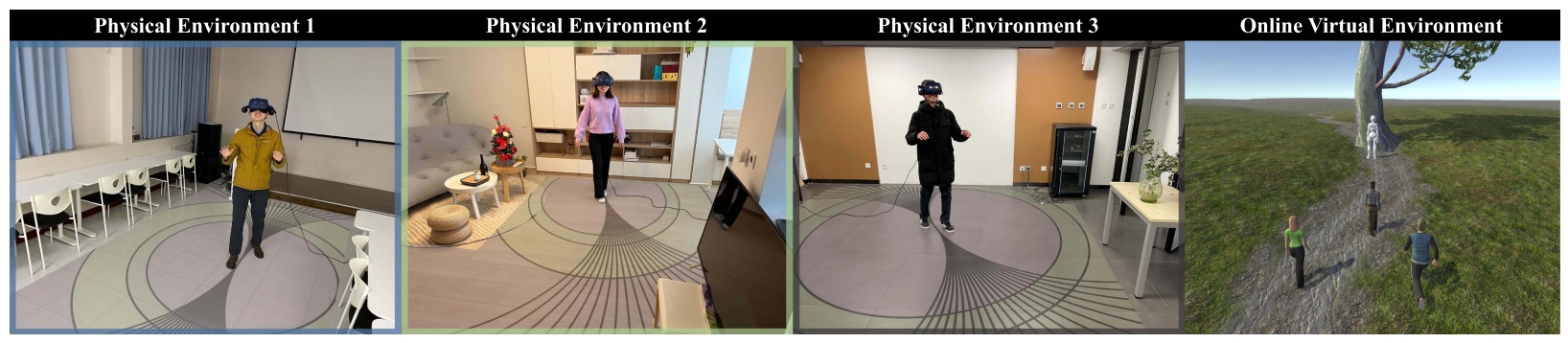

Multi-User Redirected Walking in Separate Physical Spaces for Online VR Scenarios

IEEE Transactions on Visualization and Computer Graphics, Vol. 30, No. 4, 1916-1926.

Sen-Zhe Xu, Jia-Hong Liu, Miao Wang, Fang-Lue Zhang, Song-Hai Zhang

With the recent rise of Metaverse, online multiplayer VR applications are becoming

increasingly prevalent worldwide. However, as multiple users are located in different

physical environments, different reset frequencies and timings can lead to serious

fairness issues for online collaborative/competitive VR applications. We propose a

novel multi-user RDW method that is able to significantly reduce

the overall reset number and give users a better immersive experience by providing

a fair exploration. Our key idea is to first find out the ¡±bottleneck¡± user that

may cause all users to be reset and estimate the time to reset given the users¡¯

next targets, and then redirect all the users to favorable poses during that maximized

bottleneck time to ensure the subsequent resets can be postponed as much as possible.

More particularly, we develop methods to estimate the time of possibly encountering

obstacles and the reachable area for a specific pose to enable the prediction of the

next reset caused by any user. Our experiments and user study found that our method

outperforms existing RDW methods in online VR applications.

Other publications in 2024

1. Tai-Jiang Mu, Ming-Yuan Shen, Yu-Kun Lai, Shi-Min Hu,

Learning Virtual View Selection for 3D Scene Semantic Segmentation,

IEEE Transactions on Image Processing, 2024, Vol. 33, 4159-4172.

2. Guo-Ye Yang, George Kiyohiro Nakayama, Zi-Kai Xiao, Tai-Jiang Mu, Xiaolei Huang, Shi-Min Hu,

Semantic-Aware Transformation-Invariant RoI Align,

AAAI 2024: 6486-6493.

3. Yi Zhang, Meng-Hao Guo, Miao Wang, Shi-Min Hu,

Exploring Regional Clues in CLIP for Zero-Shot Semantic Segmentation,

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024, 3270-3280.

4. Xin Yu, Yuan-Chen Guo, Yangguang Li, Ding Liang, Song-Hai Zhang, Xiaojuan Qi,

Text-to-3D with Classifier Score Distillation,

ICLR 2024: 6486-6493.

2023

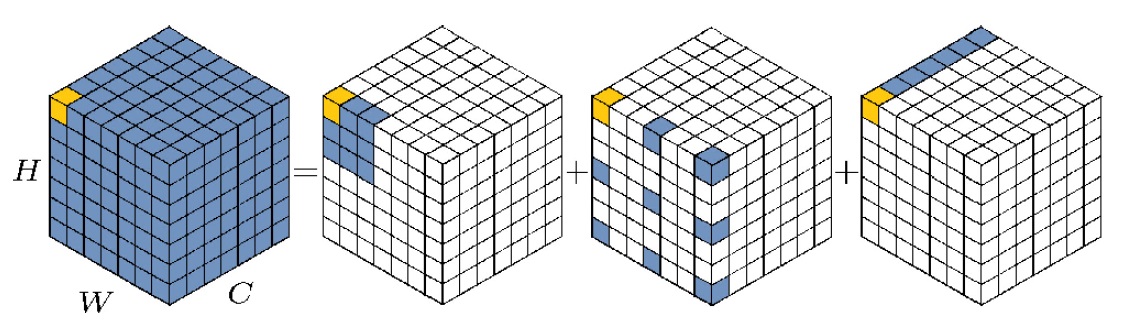

Visual attention network

Computational Visual Media, 2023, Vol. 9, No. 4, 733-752.

Meng-Hao Guo, Cheng-Ze Lu, Zheng-Ning Liu, Ming-Ming Cheng & Shi-Min Hu

While originally designed for natural language processing tasks, the self-attention

mechanism has recently taken various computer vision areas by storm. However,

the 2D nature of images brings three challenges for applying self-attention

in computer vision: (1) treating images as 1D sequences neglects their 2D structures;

(2) the quadratic complexity is too expensive for high-resolution images;

(3) it only captures spatial adaptability but ignores channel adaptability.

In this paper, we propose a novel linear attention named large kernel attention (LKA) to

enable self-adaptive and long-range correlations in self-attention while avoiding

its shortcomings. Furthermore, we present a neural network based on LKA, namely

Visual Attention Network (VAN). While extremely simple, VAN achieves comparable results

with similar size convolutional neural networks (CNNs) and vision transformers (ViTs)

in various tasks, including image classification, object detection, semantic segmentation,

panoptic segmentation, pose estimation, etc. For example, VAN-B6 achieves 87.8% accuracy

on ImageNet benchmark, and sets new state-of-the-art performance (58.2 PQ) for panoptic

segmentation. Besides, VAN-B2 surpasses Swin-T 4 mIoU (50.1 vs. 46.1) for semantic

segmentation on ADE20K benchmark, 2.6 AP (48.8 vs. 46.2) for object detection on COCO dataset.

It provides a novel method and a simple yet strong baseline for the community.

The code is available at https://github.com/Visual-Attention-Network.

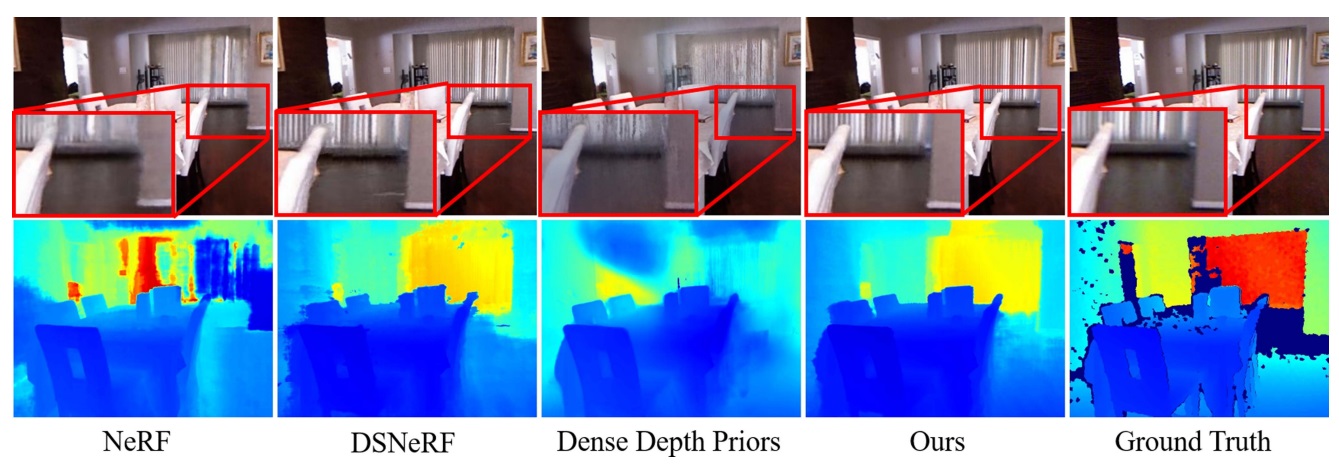

StructNeRF: Neural Radiance Fields for Indoor Scenes With Structural Hints

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, Vol. 45, No. 12, 15694-15705.

Zheng Chen, Chen Wang, Yuan-Chen Guo, Song-Hai Zhang

Neural Radiance Fields (NeRF) achieve photo-realistic view synthesis with densely

captured input images. However, the geometry of NeRF is extremely under-constrained

given sparse views, resulting in significant degradation of novel view synthesis quality.

Inspired by self-supervised depth estimation methods, we propose StructNeRF, a

solution to novel view synthesis for indoor scenes with sparse inputs. StructNeRF

leverages the structural hints naturally embedded in multi-view inputs to handle the

unconstrained geometry issue in NeRF. Specifically, it tackles the texture and

non-texture regions respectively: a patch-based multi-view consistent photometric

loss is proposed to constrain the geometry of textured regions; for non-textured

ones, we explicitly restrict them to be 3D consistent planes. Through the dense

self-supervised depth constraints, our method improves both the geometry and the view

synthesis performance of NeRF without any additional training on external data.

Extensive experiments on several real-world datasets demonstrate that StructNeRF

shows superior or comparable performance compared to state-of-the-art methods

(e.g. NeRF, DSNeRF, RegNeRF, Dense Depth Priors, MonoSDF, etc.) for indoor scenes

with sparse inputs both quantitatively and qualitatively.

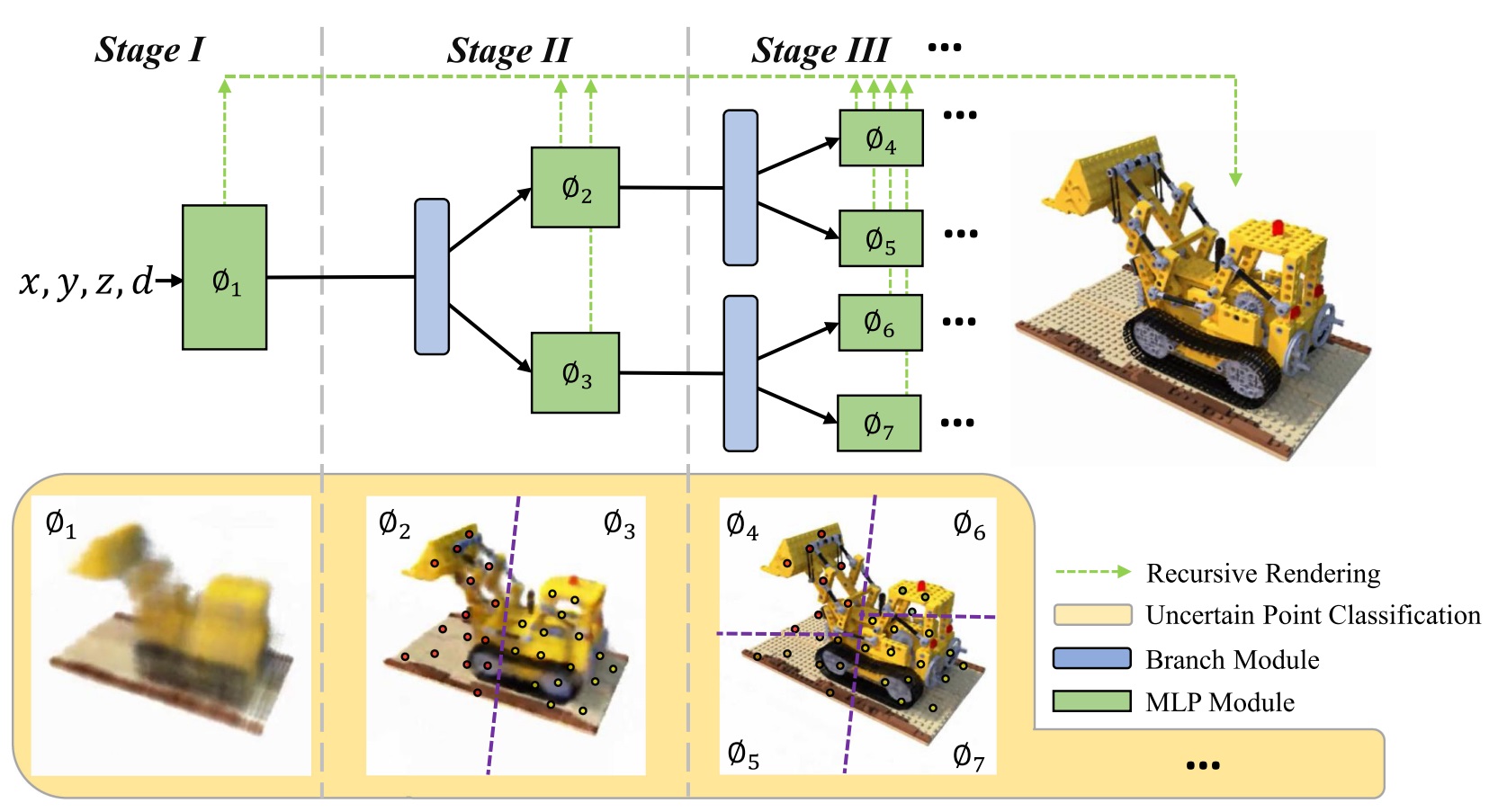

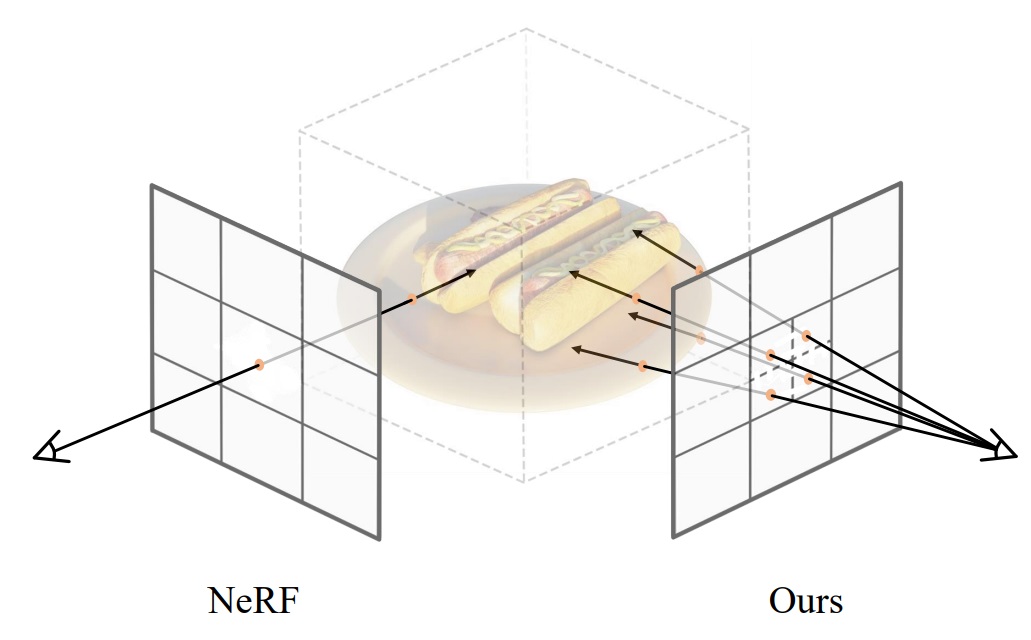

Recursive-NeRF: An Efficient and Dynamically Growing NeRF

IEEE Transactions on Visualization and Computer Graphics, 2023, Vol. 29, No. 12, 5124-5136.

Guo-Wei Yang, Wen-Yang Zhou, Hao-Yang Peng, Dun Liang, Tai-Jiang Mu, Shi-Min Hu

Neural Radiance Fields (NeRF) achieve photo-realistic view synthesis with densely

captured input images. However, the geometry of NeRF is extremely under-constrained

given sparse views, resulting in significant degradation of novel view synthesis quality.

Inspired by self-supervised depth estimation methods, we propose StructNeRF, a

solution to novel view synthesis for indoor scenes with sparse inputs. StructNeRF

leverages the structural hints naturally embedded in multi-view inputs to handle the

unconstrained geometry issue in NeRF. Specifically, it tackles the texture and

non-texture regions respectively: a patch-based multi-view consistent photometric

loss is proposed to constrain the geometry of textured regions; for non-textured

ones, we explicitly restrict them to be 3D consistent planes. Through the dense

self-supervised depth constraints, our method improves both the geometry and the view

synthesis performance of NeRF without any additional training on external data.

Extensive experiments on several real-world datasets demonstrate that StructNeRF

shows superior or comparable performance compared to state-of-the-art methods

(e.g. NeRF, DSNeRF, RegNeRF, Dense Depth Priors, MonoSDF, etc.) for indoor scenes

with sparse inputs both quantitatively and qualitatively.

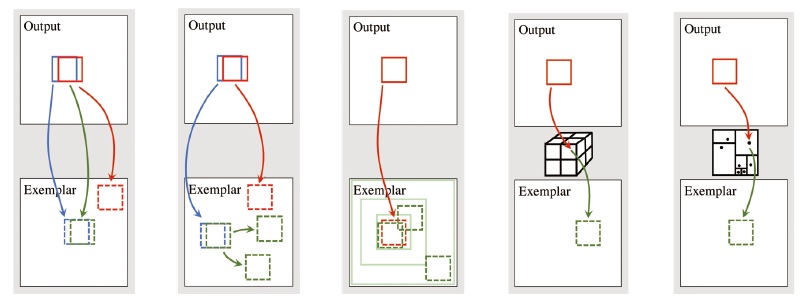

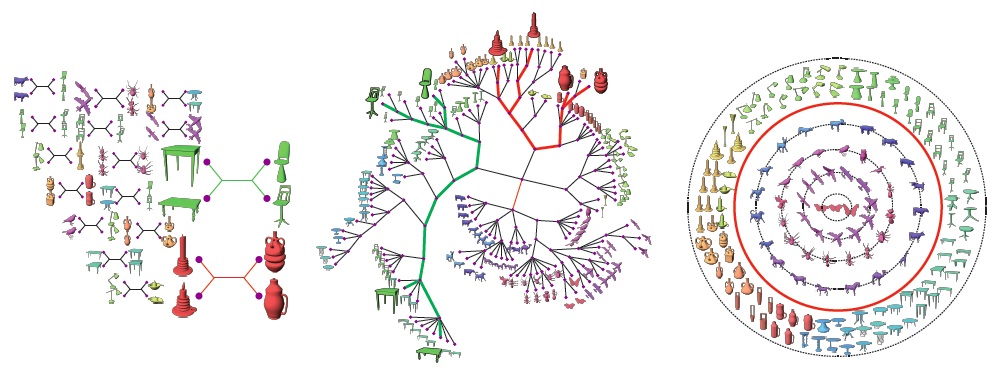

DiffFacto: Controllable Part-Based 3D Point Cloud Generation with Cross Diffusion

IEEE/CVF International Conference on Computer Vision, 2023, 14211-14221.

George Kiyohiro Nakayama; Mikaela Angelina Uy; Jiahui Huang; Shi-Min Hu; Ke Li; Leonidas Guibas

While the community of 3D point cloud generation has witnessed a big growth in recent

years, there still lacks an effective way to enable intuitive user control in the

generation process, hence limiting the general utility of such methods. Since an

intuitive way of decomposing a shape is through its parts, we propose to tackle

the task of controllable part-based point cloud generation. We introduce DiffFacto,

a novel probabilistic generative model that learns the distribution of shapes

with part-level control. We propose a factorization that models independent part

style and part configuration distributions, and present a novel cross diffusion

network that enables us to generate coherent and plausible shapes under our

proposed factorization. Experiments show that our method is able to generate

novel shapes with multiple axes of control. It achieves state-of-the-art

part-level generation quality and generates plausible and coherent shape while

enabling various downstream editing applications such as shape interpolation,

mixing, and transformation editing. Please visit our project webpage at https://difffacto.github.io/

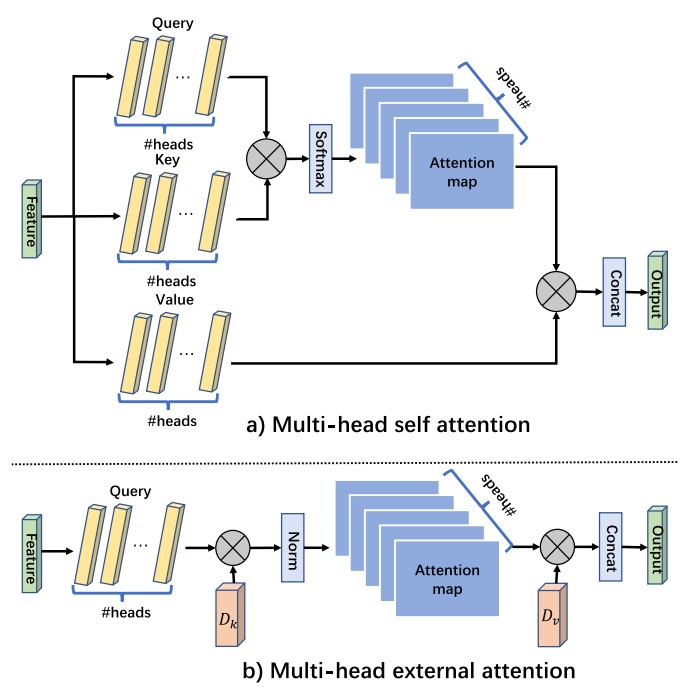

Beyond Self-Attention: External Attention Using Two Linear Layers for Visual Tasks

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, Vol. 45, No. 5, 5436-5447.

Meng-Hao Guo, Zheng-Ning Liu, Tai-Jiang Mu, Shi-Min Hu

Attention mechanisms, especially self-attention, have played an increasingly important role

in deep feature representation for visual tasks. Self-attention updates the feature at each

position by computing a weighted sum of features using pair-wise affinities across all positions

to capture the long-range dependency within a single sample. However, self-attention has

quadratic complexity and ignores potential correlation between different samples.

This article proposes a novel attention mechanism which we call external attention ,

based on two external, small, learnable, shared memories, which can be implemented easily

by simply using two cascaded linear layers and two normalization layers; it conveniently

replaces self-attention in existing popular architectures. External attention has linear

complexity and implicitly considers the correlations between all data samples. We further

incorporate the multi-head mechanism into external attention to provide an all-MLP architecture,

external attention MLP (EAMLP), for image classification. Extensive experiments on

image classification, object detection, semantic segmentation, instance segmentation, image

generation, and point cloud analysis reveal that our method provides results comparable or

superior to the self-attention mechanism and some of its variants, with much lower

computational and memory costs.

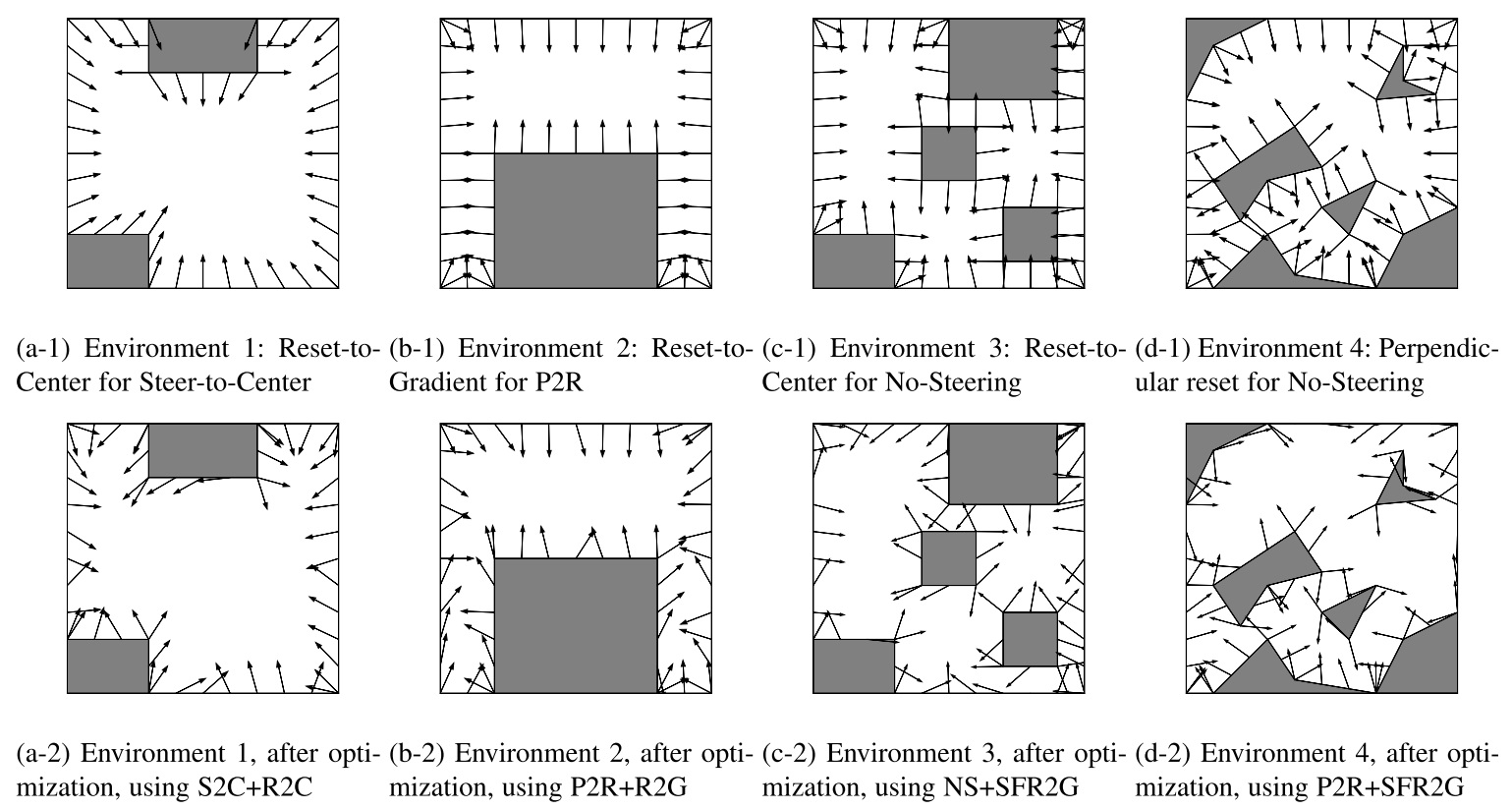

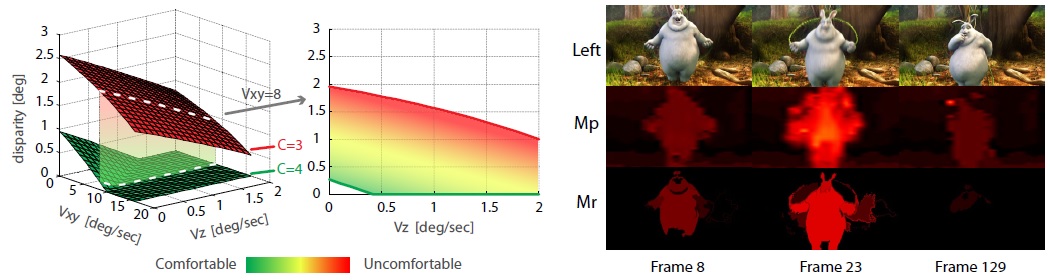

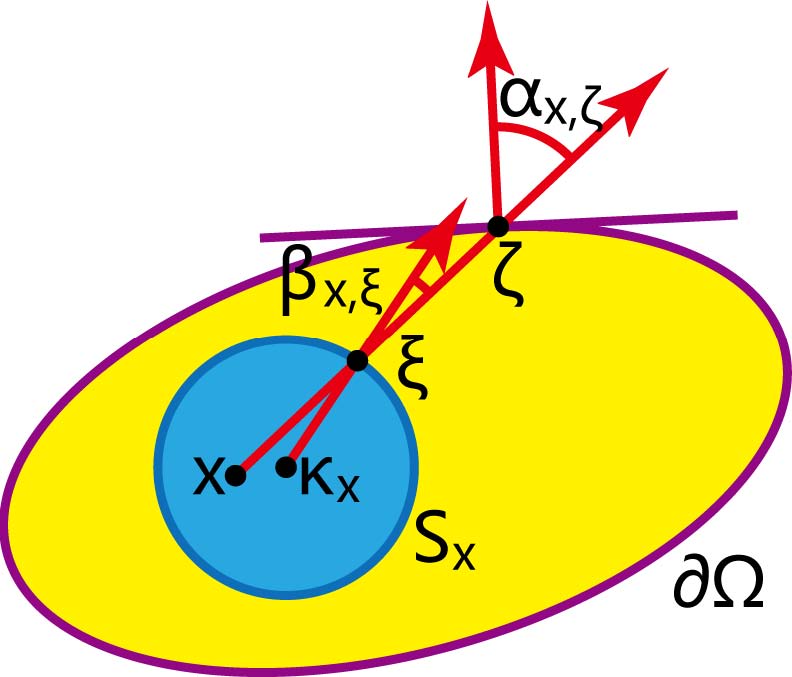

Adaptive Optimization Algorithm for Resetting Techniques in Obstacle-Ridden Environments

IEEE Transactions on Visualization and Computer Graphics, 2023, Vol. 29, No. 4, 1977-1991.

Song-Hai Zhang, Chia-Hao Chen, Fu Zheng, Yong-Liang Yang, Shi-Min Hu

Redirected Walking (RDW) algorithms aim to impose several types of gains on users

immersed in Virtual Reality and distort their walking paths in the real world,

thus enabling them to explore a larger space. Since collision with physical boundaries

is inevitable, a reset strategy needs to be provided to allow users to reset when they

hit the boundary. However, most reset strategies are based on simple heuristics by

choosing a seemingly suitable solution, which may not perform well in practice.

In this article, we propose a novel optimization-based reset algorithm adaptive to

different RDW algorithms. Inspired by the approach of finite element analysis, our

algorithm splits the boundary of the physical world by a set of endpoints. Each

endpoint is assigned a reset vector to represent the optimized reset direction when

hitting the boundary. The reset vectors on the edge will be determined by the

interpolation between two neighbouring endpoints. We conduct simulation-based experiments

for three RDW algorithms with commonly used reset algorithms to compare with. The

results demonstrate that the proposed algorithm significantly reduces the number of resets.

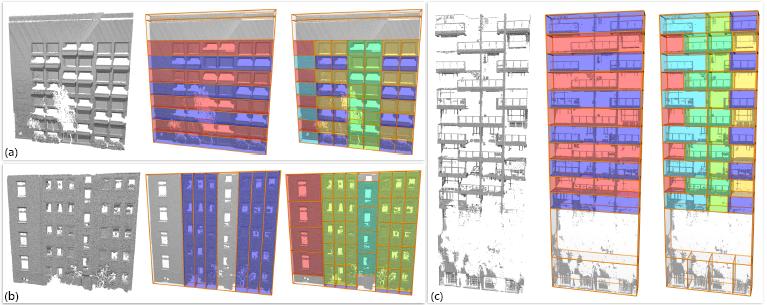

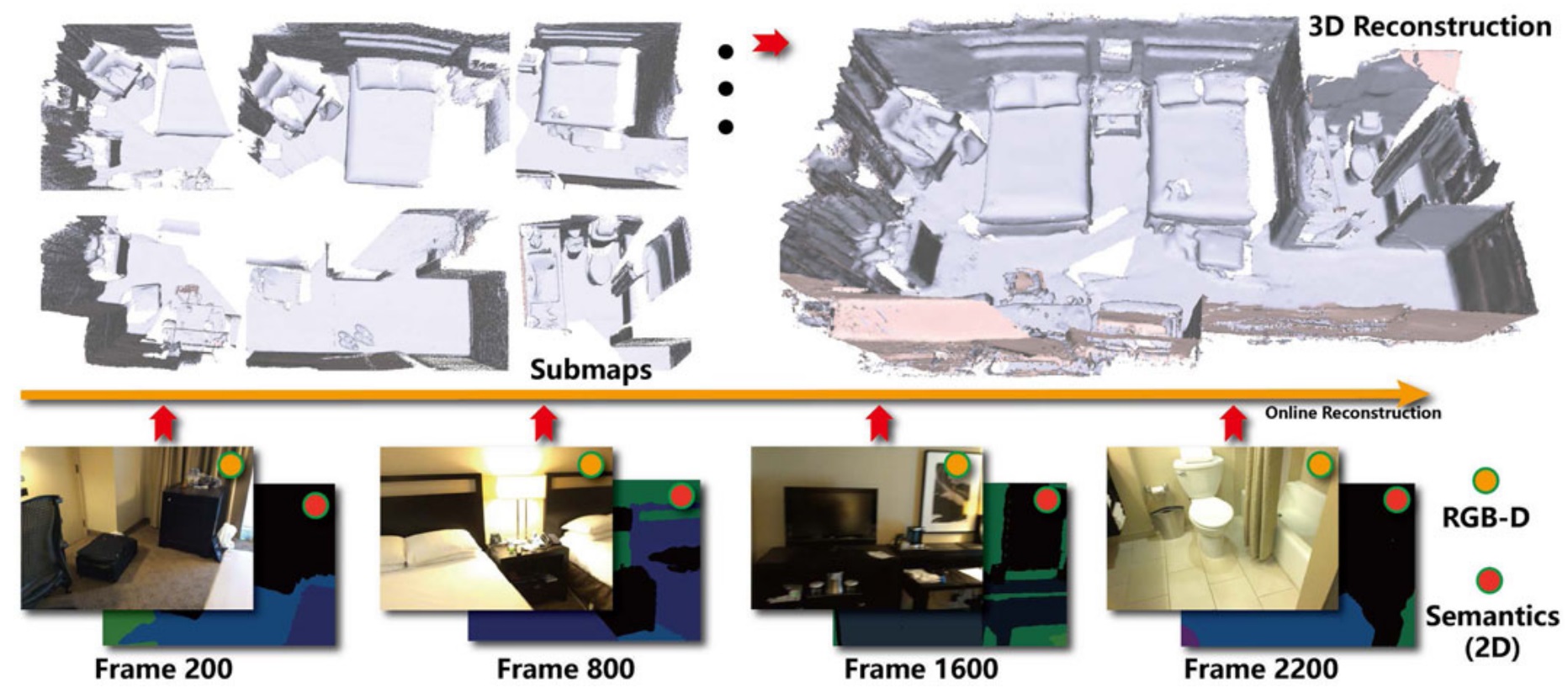

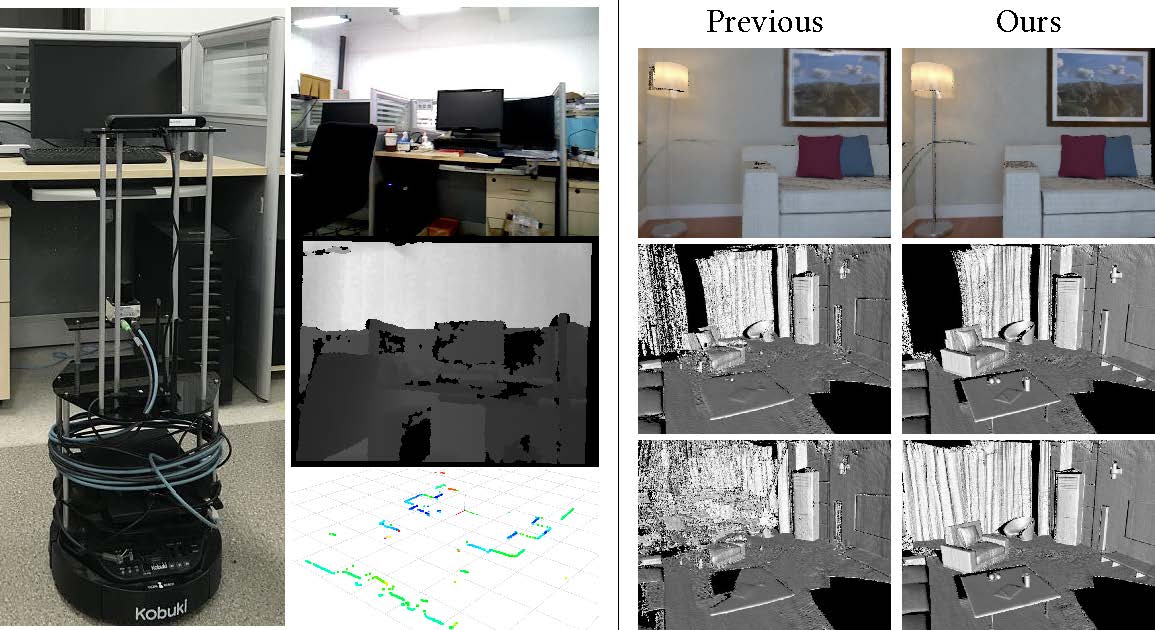

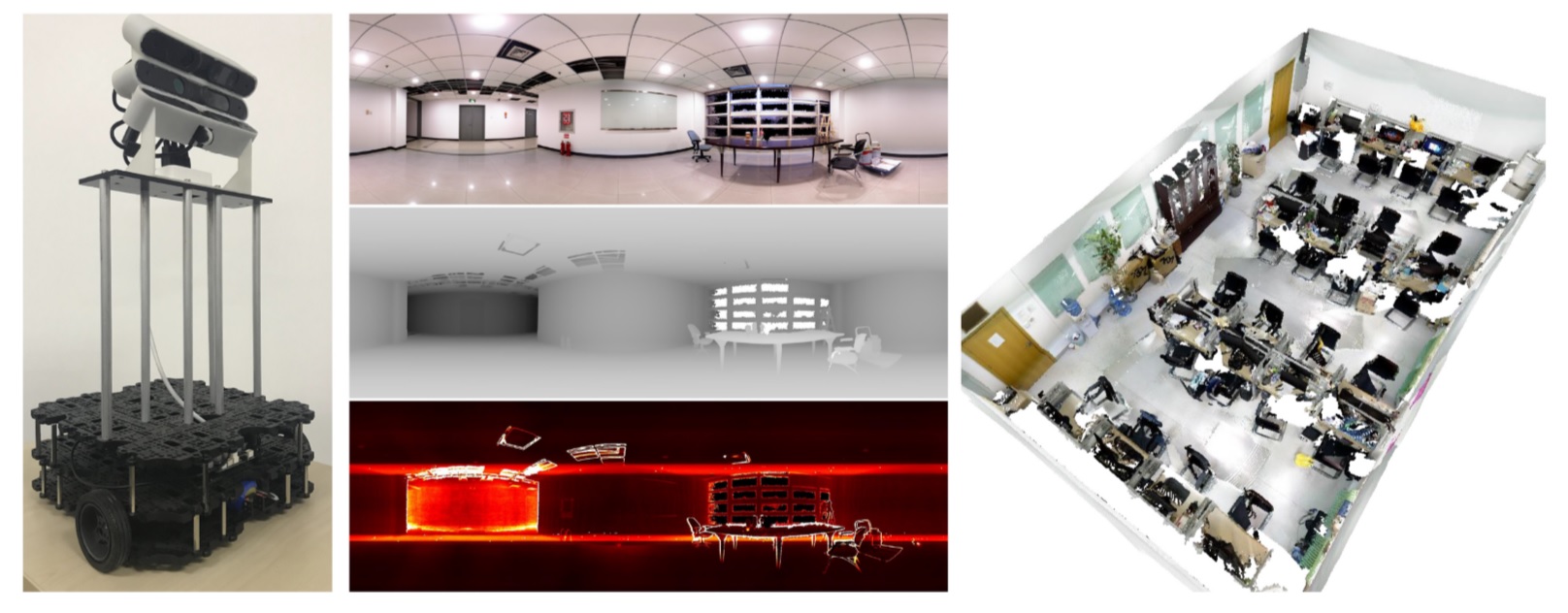

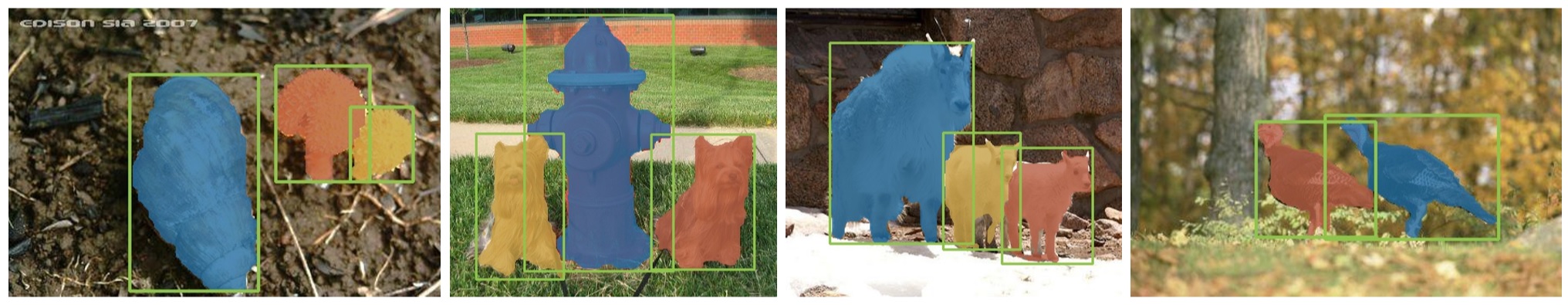

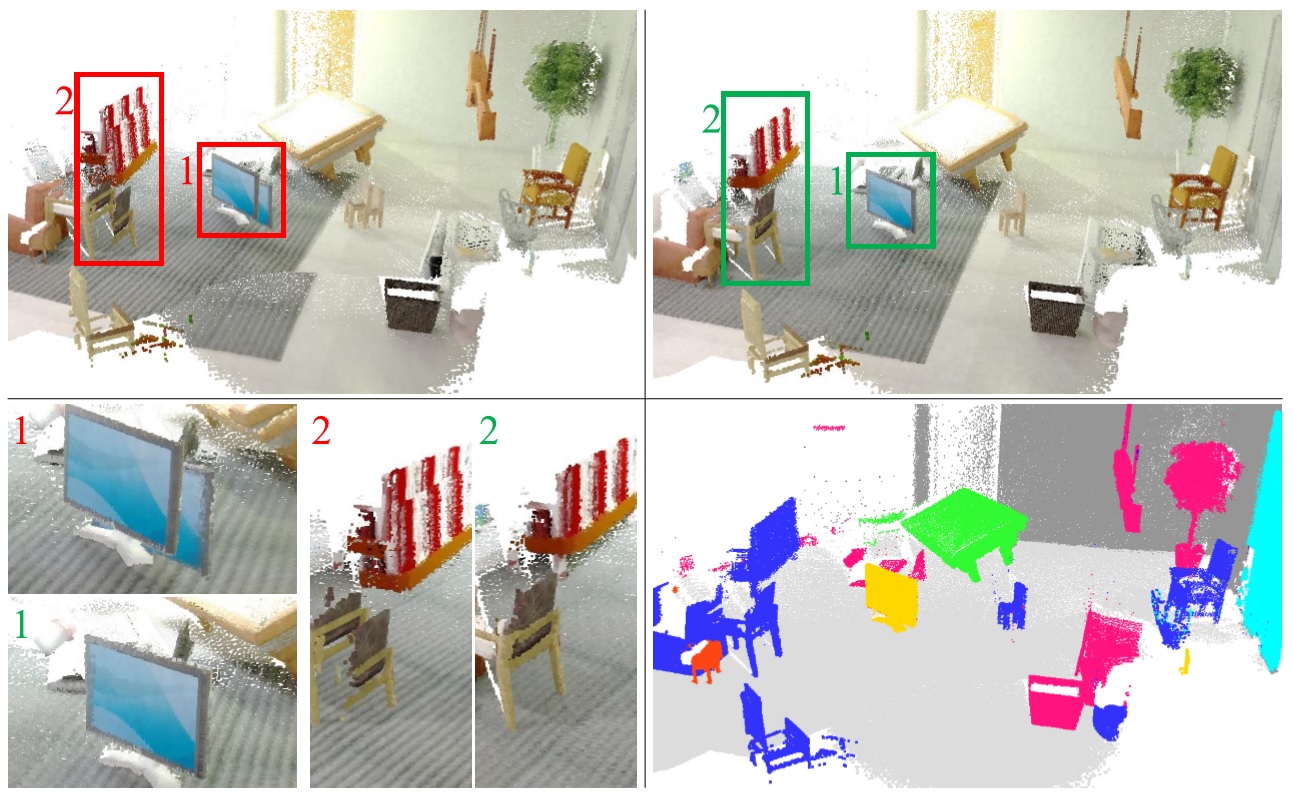

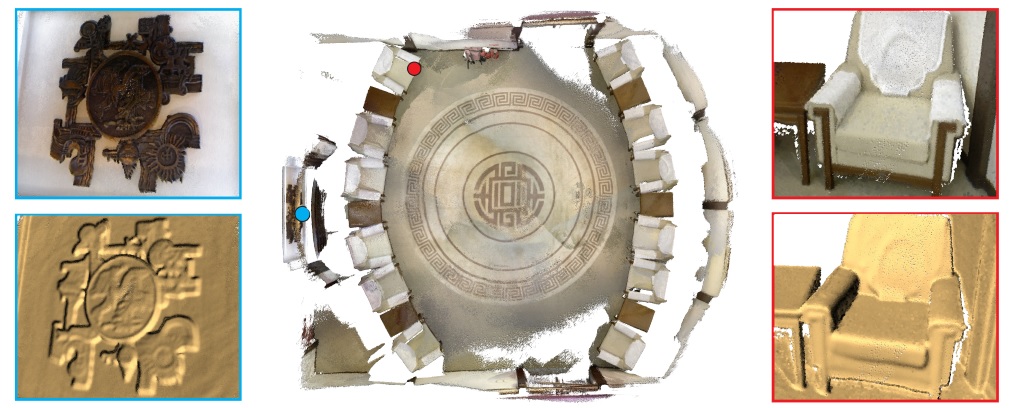

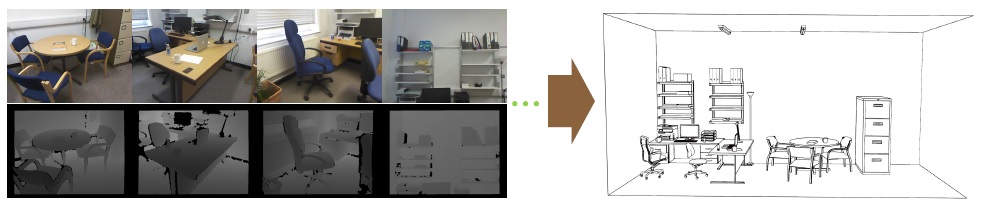

Real-Time Globally Consistent 3D Reconstruction With Semantic Priors

IEEE Transactions on Visualization and Computer Graphics, 2023, Vol. 29, No. 4, 1977-1991.

Shi-Sheng Huang, Haoxiang Chen, Jiahui Huang, Hongbo Fu, Shi-Min Hu

Maintaining global consistency continues to be critical for online 3D indoor

scene reconstruction. However, it is still challenging to generate satisfactory

3D reconstruction in terms of global consistency for previous approaches using

purely geometric analysis, even with bundle adjustment or loop closure techniques.

In this article, we propose a novel real-time 3D reconstruction approach which

effectively integrates both semantic and geometric cues. The key challenge is

how to map this indicative information, i.e., semantic priors, into a metric

space as measurable information, thus enabling more accurate semantic fusion

leveraging both the geometric and semantic cues. To this end, we introduce a

semantic space with a continuous metric function measuring the distance between

discrete semantic observations. Within the semantic space, we present an accurate

frame-to-model semantic tracker for camera pose estimation, and semantic pose

graph equipped with semantic links between submaps for globally consistent 3D

scene reconstruction. With extensive evaluation on public synthetic and real-world

3D indoor scene RGB-D datasets, we show that our approach outperforms the previous

approaches for 3D scene reconstruction both quantitatively and qualitatively,

especially in terms of global consistency.

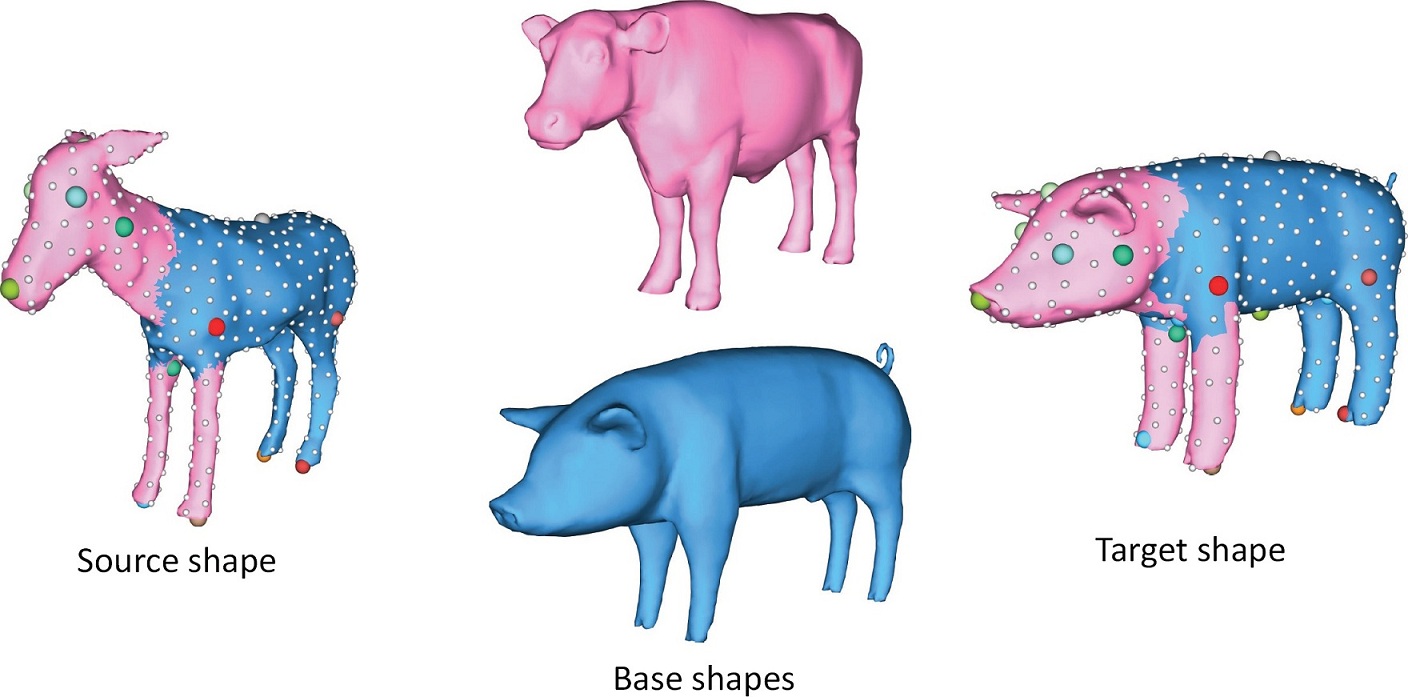

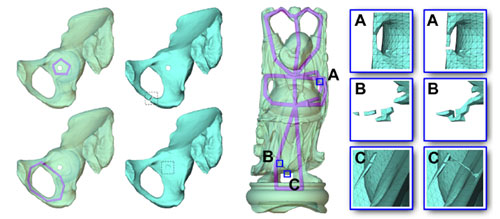

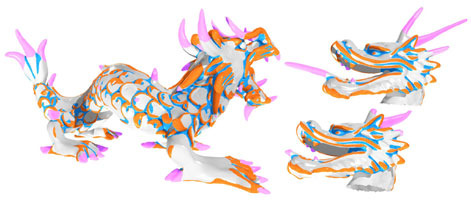

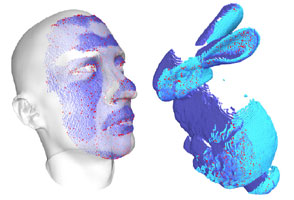

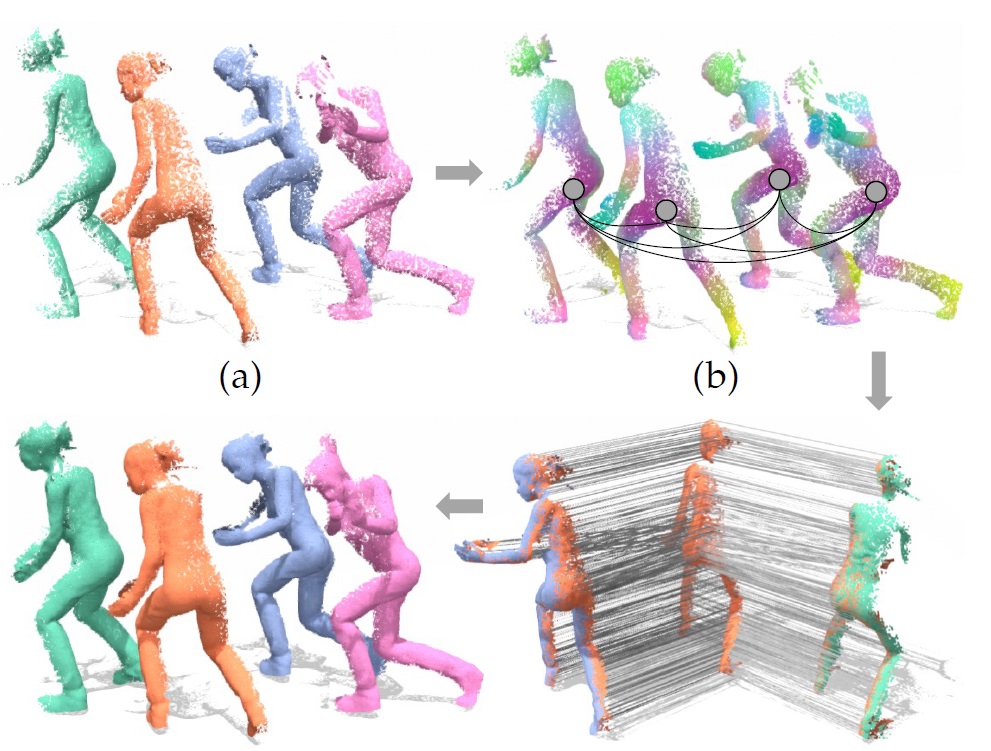

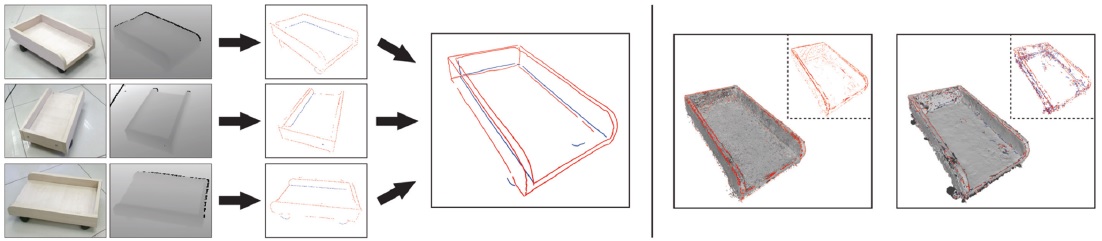

Multiway Non-Rigid Point Cloud Registration via Learned Functional Map Synchronization

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023, Vol. 45, No. 2, 2038 - 2053.

Jiahui Huang, Tolga Birdal, Zan Gojcic, Leonidas J. Guibas, Shi-Min Hu

We present SyNoRiM, a novel way to jointly register multiple non-rigid shapes by synchronizing

the maps that relate learned functions defined on the point clouds. Even though the ability

to process non-rigid shapes is critical in various applications ranging from computer

animation to 3D digitization, the literature still lacks a robust and flexible framework to

match and align a collection of real, noisy scans observed under occlusions. Given a set

of such point clouds, our method first computes the pairwise correspondences parameterized via

functional maps. We simultaneously learn potentially non-orthogonal basis functions to effectively

regularize the deformations, while handling the occlusions in an elegant way.

To maximally benefit from the multi-way information provided by the inferred pairwise

deformation fields, we synchronize the pairwise functional maps into a cycle-consistent whole

thanks to our novel and principled optimization formulation. We demonstrate via extensive

experiments that our method achieves a state-of-the-art performance in registration accuracy,

while being flexible and efficient as we handle both non-rigid and multi-body cases in a unified

framework and avoid the costly optimization over point-wise permutations by the use

of basis function maps.

2022

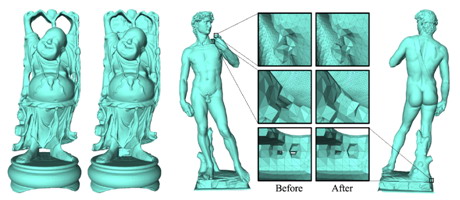

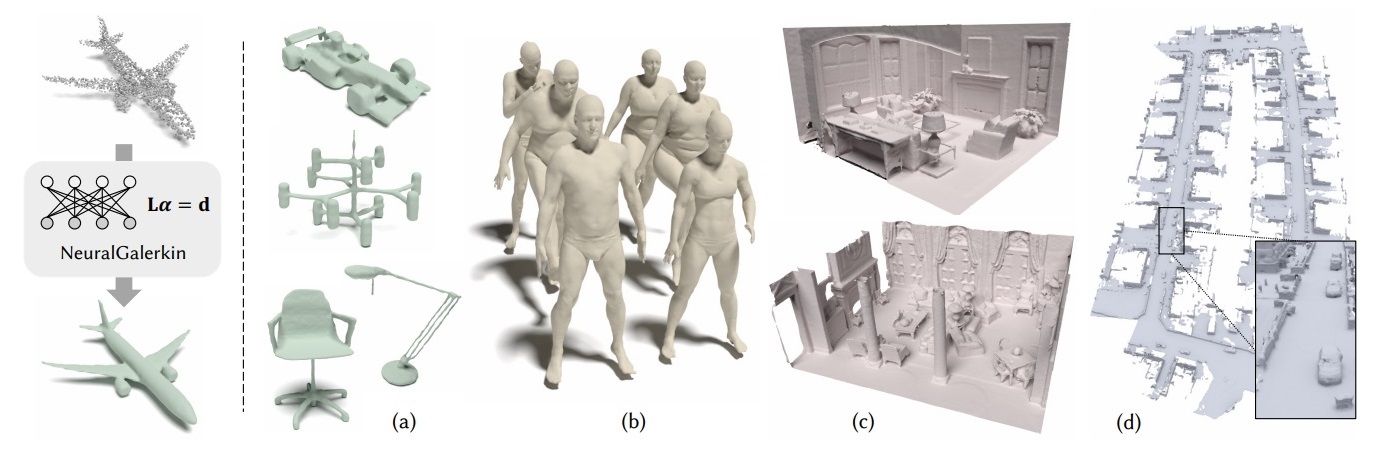

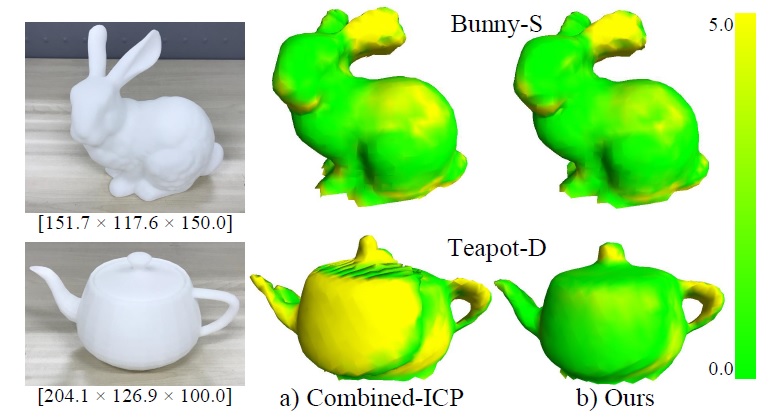

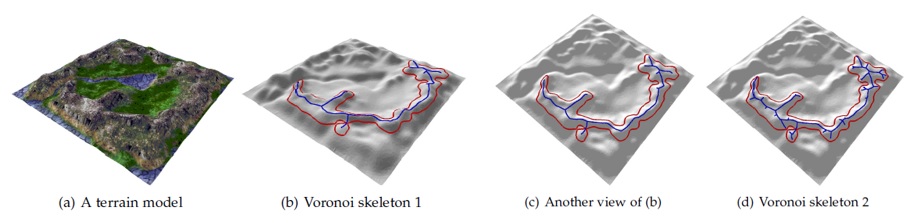

A Neural Galerkin Solver for Accurate Surface Reconstruction

ACM Transactions on Graphics, 2022, Vol. 41, No. 6, article no. 229.

Jiahui Huang, Hao-Xiang Chen, Shi-Min Hu

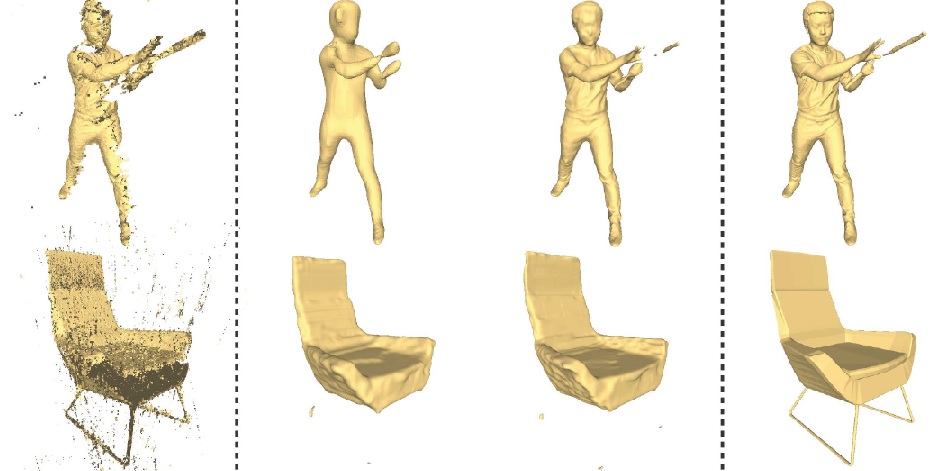

We present SyNoRiM, a novel way to jointly register multiple non-rigid shapes by

synchronizing the maps that relate learned functions defined on the point clouds.

Even though the ability to process non-rigid shapes is critical in various applications

ranging from computer animation to 3D digitization, the literature still lacks a robust and flexible framework to match and align a collection of real, noisy scans observed under occlusions. Given a set of such point clouds, our method first computes the pairwise correspondences parameterized via functional maps. We simultaneously learn potentially non-orthogonal basis functions to effectively regularize the deformations, while handling the occlusions in an elegant way. To maximally benefit from the multi-way information provided by the inferred pairwise deformation fields, we synchronize the pairwise functional maps into a cycle-consistent whole thanks to our novel and principled optimization formulation. We demonstrate via extensive experiments that our method achieves a state-of-the-art performance in registration accuracy, while being flexible and efficient as we handle both non-rigid and multi-body cases in a unified framework and avoid the costly optimization over point-wise permutations by the use of basis function maps.

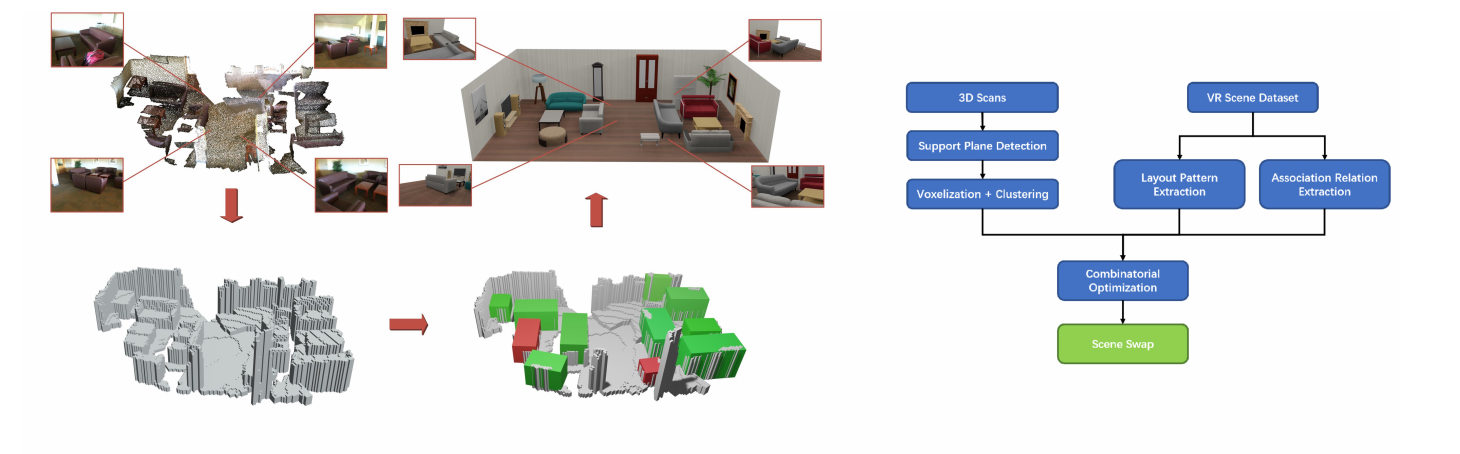

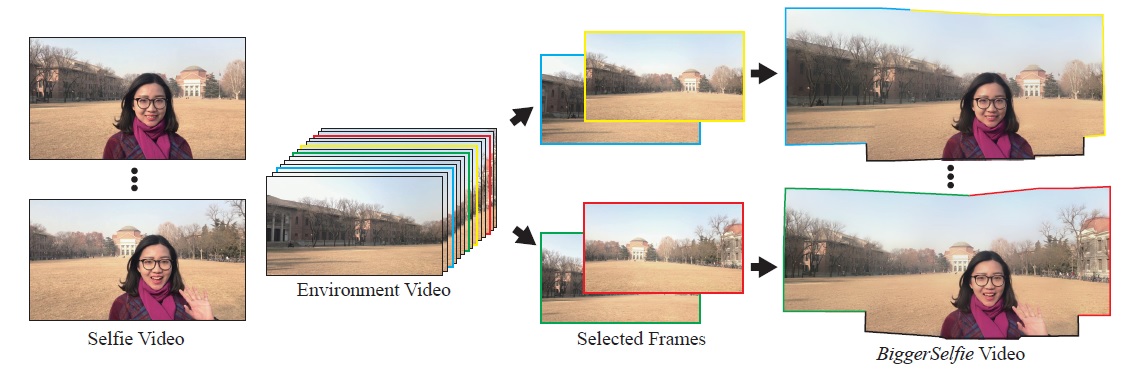

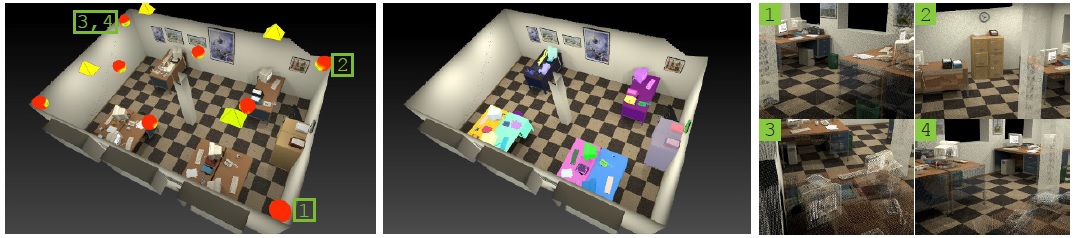

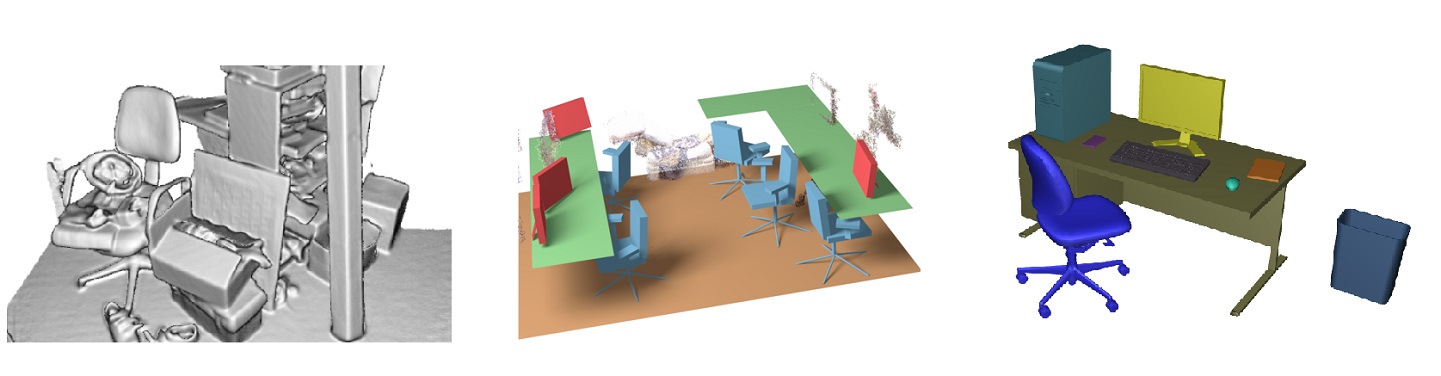

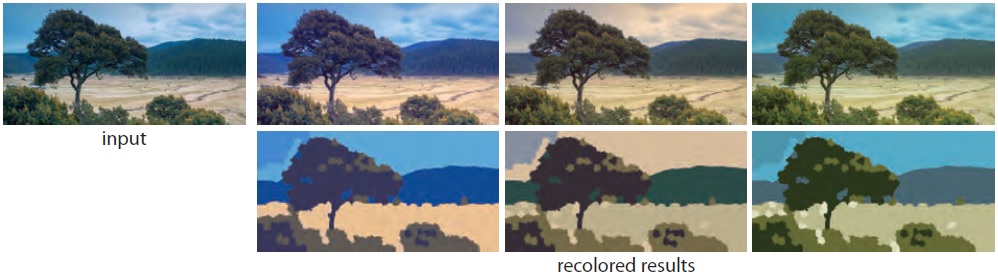

Context-Consistent Generation of Indoor Virtual Environments based on Geometry Constraints

IEEE Transactions on Visualization and Computer Graphics, 2022, Vol. 28, No. 12, 3986-3999.

Yu He, Yingtian Liu, Yihan Jin, Song-Hai Zhang, Yu-Kun Lai, Shi-Min Hu

In this paper, we propose a system that can automatically generate immersive

and interactive virtual reality (VR) scenes by taking real-world geometric

constraints into account. Our system can not only help users avoid real-world

obstacles in virtual reality experiences, but also provide context-consistent

contents to preserve their sense of presence. To do so, our system first

identifies the positions and bounding boxes of scene objects as well as a set

of interactive planes from 3D scans. Then context-compatible virtual objects

that have similar geometric properties to the real ones can be automatically

selected and placed into the virtual scene, based on learned object association

relations and layout patterns from large amounts of indoor scene configurations.

We regard virtual object replacement as a combinatorial optimization problem,

considering both geometric and contextual consistency constraints. Quantitative

and qualitative results show that our system can generate plausible interactive

virtual scenes that highly resemble real environments, and have the ability to

keep the sense of presence for users in their VR experiences.

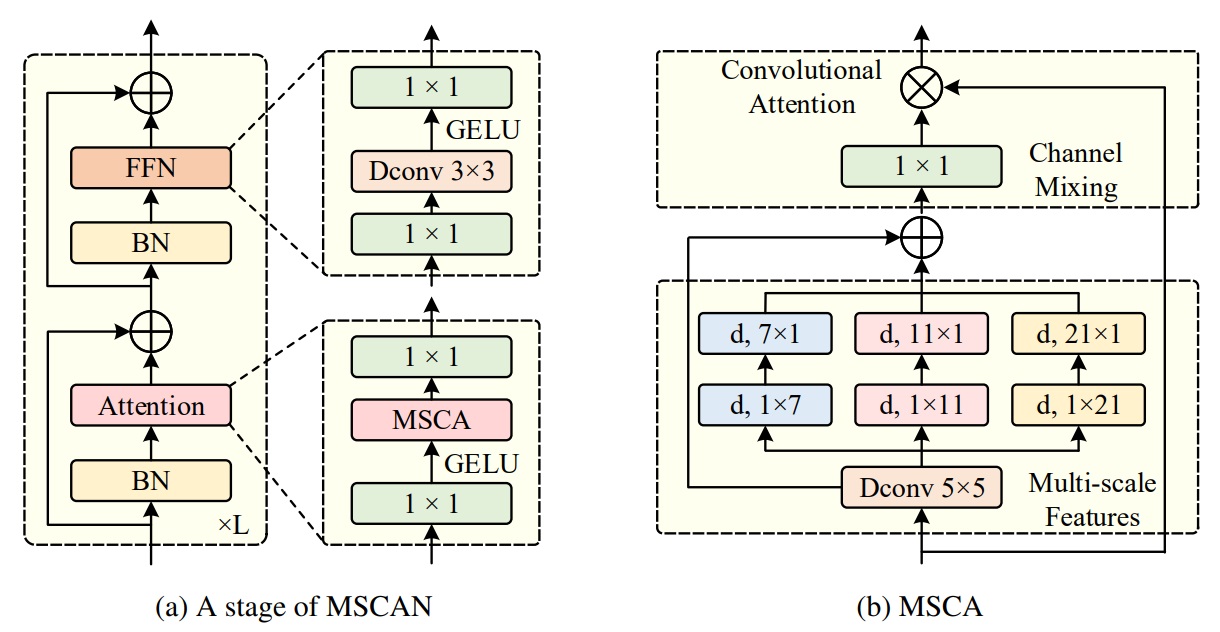

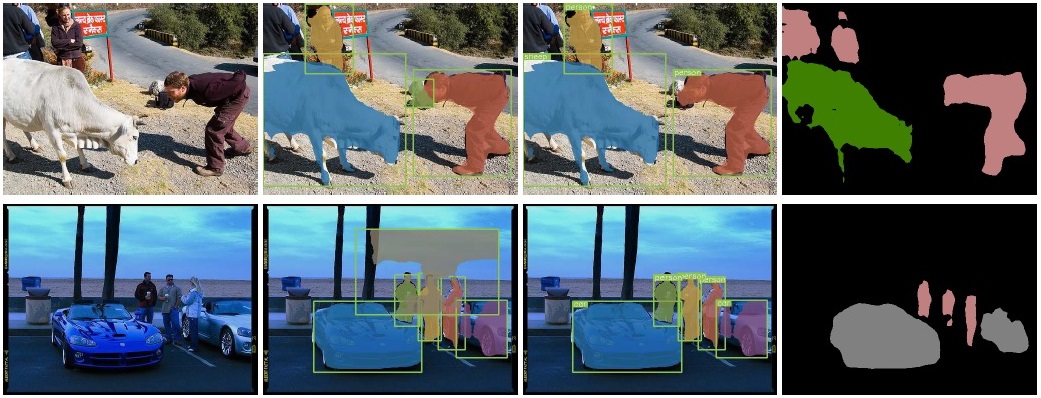

SegNeXt: rethinking convolutional attention design for semantic segmentation

The 36th International Conference on Neural Information Processing Systems, 2022, article No. 84, 1140-1156.

Meng-Hao Guo, Cheng-Ze Lu, Qibin Hou, Zheng-Ning Liu, Ming-Ming Cheng, Shi-Min Hu

We present SegNeXt, a simple convolutional network architecture for semantic segmentation.

Recent transformer-based models have dominated the field of semantic segmentation due

to the efficiency of self-attention in encoding spatial information. In this paper, we

show that convolutional attention is a more Efficient and effective way to encode contextual

information than the self-attention mechanism in transformers. By re-examining the

characteristics owned by successful segmentation models, we discover several key components

leading to the performance improvement of segmentation models. This motivates us to design

a novel convolutional attention network that uses cheap convolutional operations. Without

bells and whistles, our SegNeXt significantly improves the performance of previous

state-of-the-art methods on popular benchmarks, including ADE20K, Cityscapes, COCO-Stuff,

Pascal VOC, Pascal Context, and iSAID. Notably, SegNeXt outperforms EfficientNet-L2 w/ NAS-FPN

and achieves 90.6% mIoU on the Pascal VOC 2012 test leaderboard using only 1/10 parameters

of it. On average, SegNeXt achieves about 2.0% mIoU improvements compared to

the state-of-the-art methods on the ADE20K datasets with the same or fewer computations.

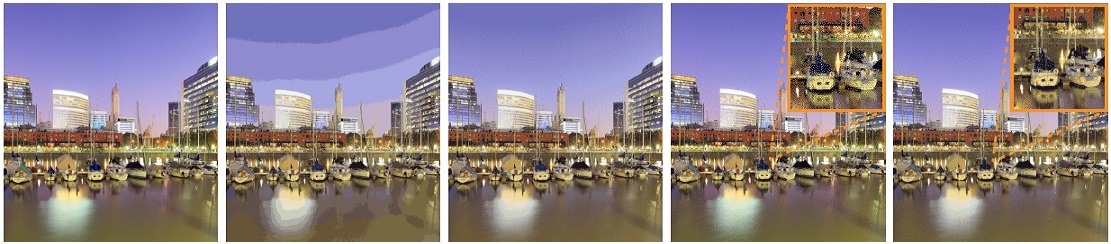

NeRF-SR: High Quality Neural Radiance Fields using Supersampling

Proceedings of the 30th ACM International Conference on Multimedia, 2022, 6445-6454.

Chen Wang, Xian Wu, Yuan-Chen Guo, Song-Hai Zhang, Yu-Wing Tai, Shi-Min Hu

We present NeRF-SR, a solution for high-resolution (HR) novel view synthesis with mostly

low-resolution (LR) inputs. Our method is built upon Neural Radiance Fields (NeRF) that

predicts per-point density and color with a multi-layer perceptron. While producing

images at arbitrary scales, NeRF struggles with resolutions that go beyond observed images.

Our key insight is that NeRF benefits from 3D consistency, which means an observed pixel

absorbs information from nearby views. We first exploit it by a super-sampling strategy

that shoots multiple rays at each image pixel, which further enforces multi-view constraint

at a sub-pixel level. Then, we show that NeRF-SR can further boost the performance of

super-sampling by a refinement network that leverages the estimated depth at hand to

hallucinate details from related patches on only one HR reference image. Experiment

results demonstrate that NeRF-SR generates high-quality results for novel view synthesis

at HR on both synthetic and real-world datasets without any external information. Project

page: https://cwchenwang.github.io/NeRF-SR

Attention mechanisms in computer vision: A survey

Computational Visual Media, 2022, Vol. 8, No. 3, 331-368.

Meng-Hao Guo, Tian-Xing Xu, Jiang-Jiang Liu, Zheng-Ning Liu, Peng-Tao Jiang, Tai-Jiang Mu, Song-Hai Zhang, Ralph R. Martin, Ming-Ming Cheng & Shi-Min Hu

Humans can naturally and effectively find salient regions in complex scenes.

Motivated by this observation, attention mechanisms were introduced into computer

vision with the aim of imitating this aspect of the human visual system. Such an

attention mechanism can be regarded as a dynamic weight adjustment process based

on features of the input image. Attention mechanisms have achieved great success

in many visual tasks, including image classification, object detection, semantic

segmentation, video understanding, image generation, 3D vision, multimodal tasks,

and self-supervised learning. In this survey, we provide a comprehensive review of

various attention mechanisms in computer vision and categorize them according to

approach, such as channel attention, spatial attention, temporal attention, and

branch attention; a related repository

https://github.com/MenghaoGuo/Awesome-Vision-Attentions is dedicated to collecting

related work. We also suggest future directions for attention mechanism research.

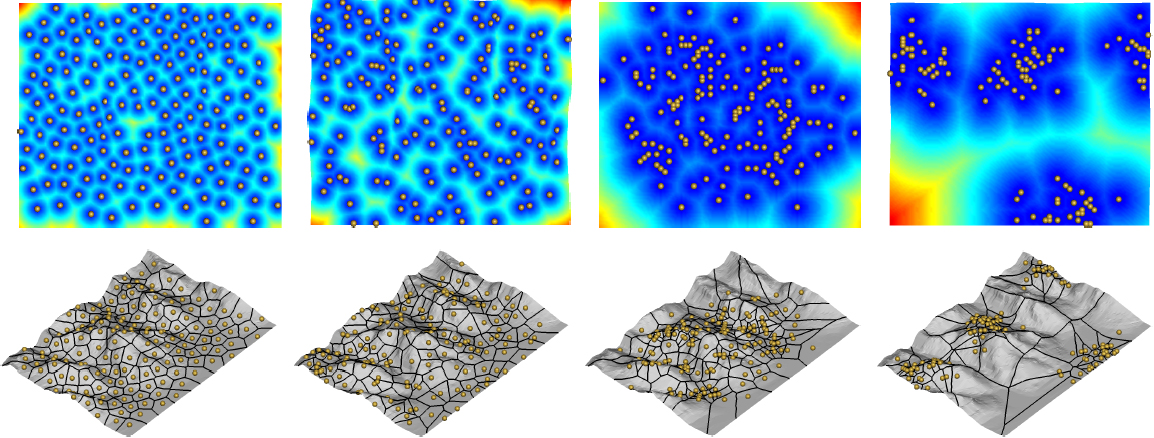

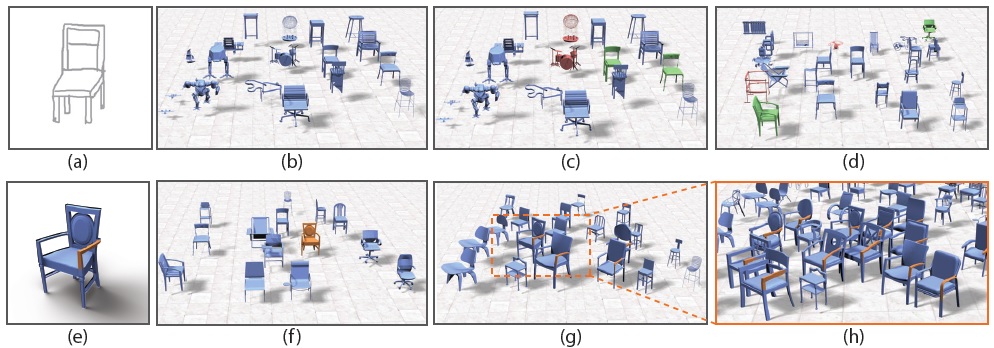

Fast 3D Indoor Scene Synthesis by Learning Spatial Relation Priors of Objects

IEEE Transactions on Visualization and Computer Graphics, 2022, Vol. 28, No. 9, 3082-3092.

Song-Hai Zhang, Shao-Kui Zhang, Wei-Yu Xie, Cheng-Yang Luo, Yongliang Yang, Hongbo Fu

We present a framework for fast synthesizing indoor scenes, given a room geometry

and a list of objects with learnt priors.Unlike existing data-driven solutions,

which often learn priors by co-occurrence analysis and statistical model fitting,

our methodmeasures the strengths of spatial relations by tests for complete

spatial randomness (CSR), and learns discrete priors based onsamples with the

ability to accurately represent exact layout patterns. With the learnt priors,

our method achieves both acceleration andplausibility by partitioning the input

objects into disjoint groups, followed by layout optimization using position-based

dynamics (PBD)based on the Hausdorff metric. Experiments show that our framework

is capable of measuring more reasonable relations amongobjects and simultaneously

generating varied arrangements in seconds compared with the state-of-the-art works.

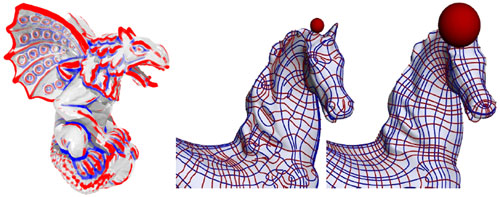

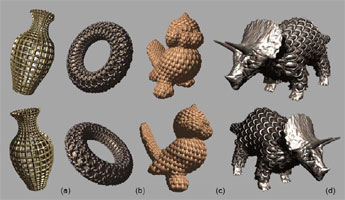

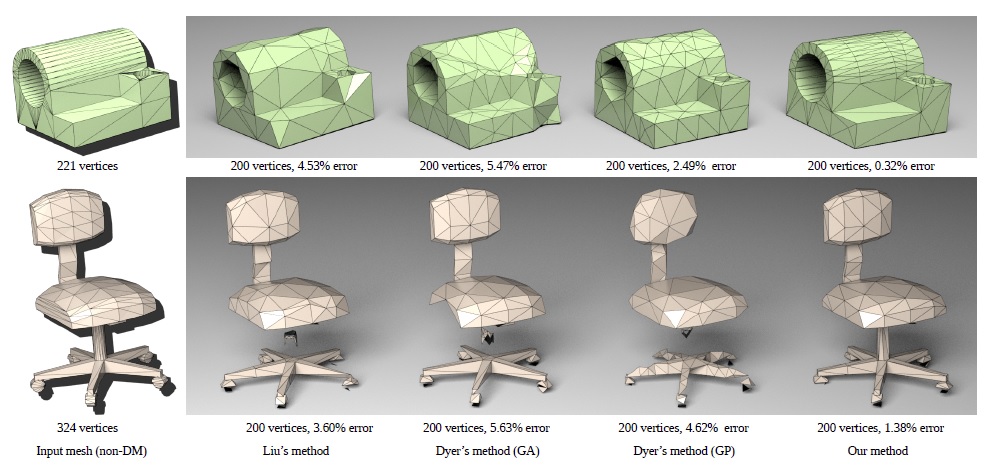

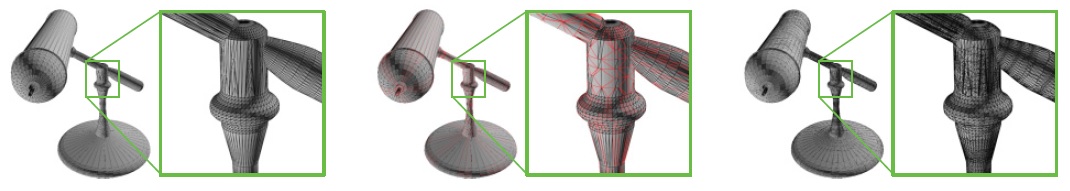

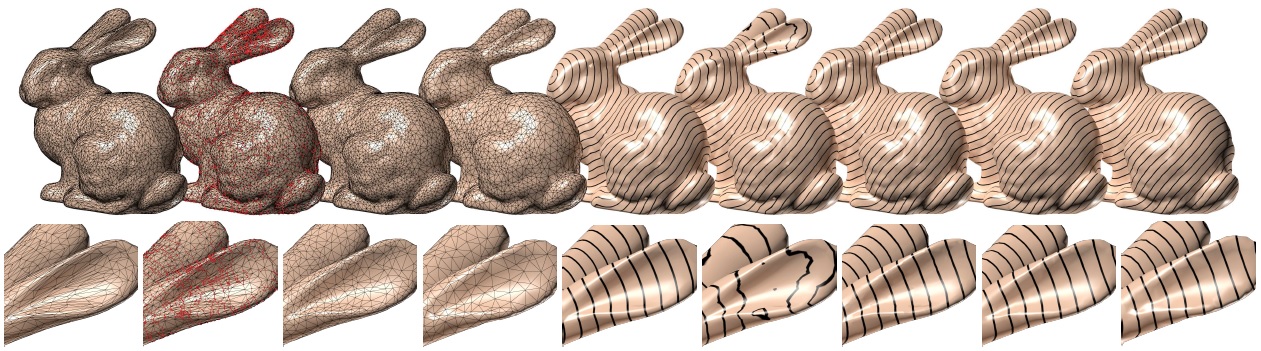

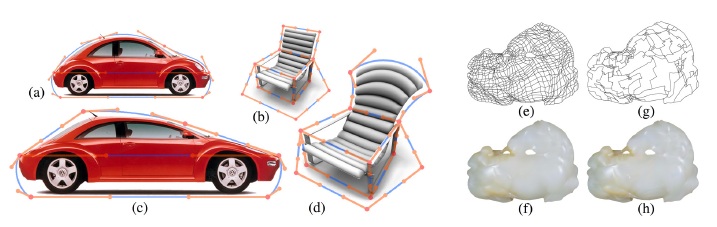

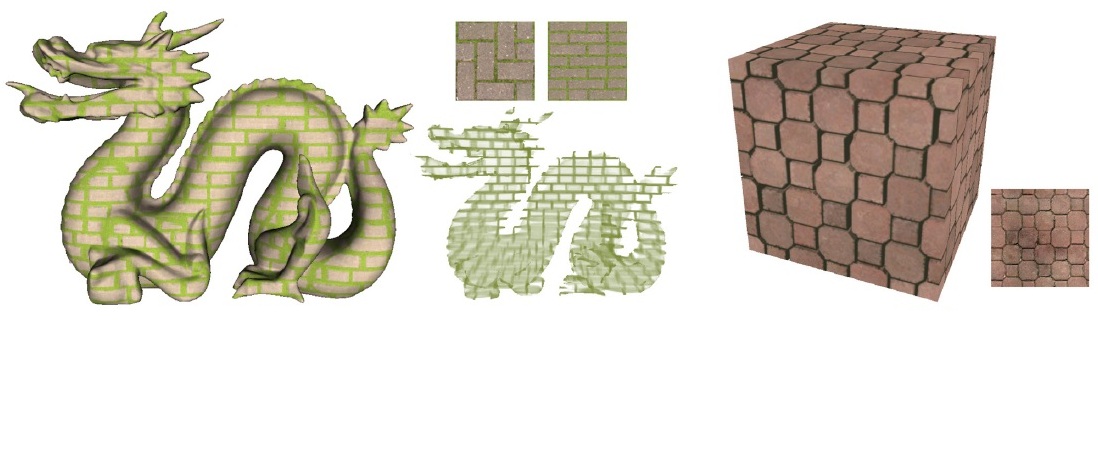

Subdivision-Based Mesh Convolution Networks

ACM Transactions on Graphics, 2022, Vol. 41, No. 3, article no. 25.

Shi-Min Hu, Zheng-Ning Liu, Meng-Hao Guo, Jun-Xiong Cai, Jiahui Huang, Tai-Jiang Tai, Ralph R. Martin

Convolutional neural networks (CNNs) have made great breakthroughs in

2D computer vision. However, their irregular structure makes it hard to

harness the potential of CNNs directly on meshes. A subdivision surface

provides a hierarchical multi-resolution structure, in which each face in a

closed 2-manifold triangle mesh is exactly adjacent to three faces. Motivated

by these two observations, this paper presents SubdivNet, an innovative and

versatile CNN framework for 3D triangle meshes with Loop subdivision

sequence connectivity. Making an analogy between mesh faces and pixels

in a 2D image allows us to present a mesh convolution operator to aggregate

local features from nearby faces. By exploiting face neighborhoods,

this convolution can support standard 2D convolutional network concepts,

e.g. variable kernel size, stride, and dilation. Based on the multi-resolution

hierarchy, we make use of pooling layers which uniformly merge four faces

into one and an upsampling method which splits one face into four. Thereby,

many popular 2D CNN architectures can be easily adapted to process 3D

meshes. Meshes with arbitrary connectivity can be remeshed to have Loop

subdivision sequence connectivity via self-parameterization, making SubdivNet

a general approach. Extensive evaluation and various applications

demonstrate SubdivNet¡¯s effectiveness and efficiency.

2021

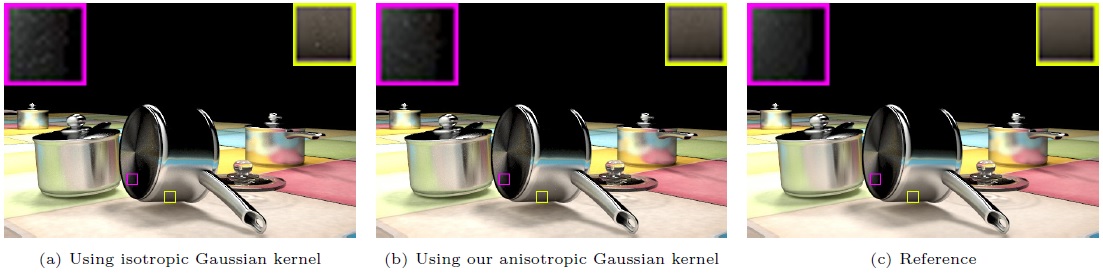

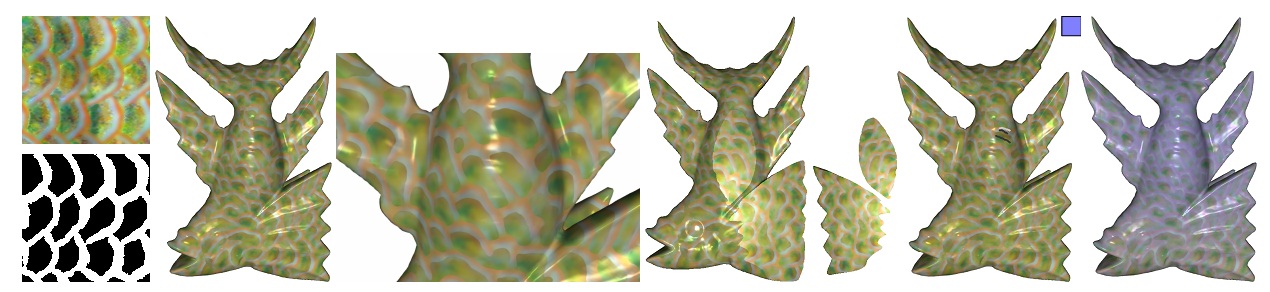

Fast and accurate spherical harmonics products

ACM Transactions on Graphics, 2021, Vol. 40, No. 6. article no. 280.

Hanggao Xin, Zhiqian Zhou, Di An, Ling-Qi Yan, Kun Xu, Shi-Min Hu, Shing-Tung Yau

Spherical Harmonics (SH) have been proven as a powerful tool for rendering,

especially in real-time applications such as Precomputed Radiance Transfer (PRT).

Spherical harmonics are orthonormal basis functions and are efficient in computing

dot products. However, computations of triple product and multiple product

operations are often the bottlenecks that prevent moderately high-frequency

use of spherical harmonics. Specifically state-of-the-art methods for accurate

SH triple products of order n have a time complexity of O(n5), which is a heavy

burden for most real-time applications. Even worse, a brute-force way to compute

k-multiple products would take O(n2k) time. In this paper, we propose a fast and

accurate method for spherical harmonics triple products with the time complexity

of only O(n3), and further extend it for computing k-multiple products with the

time complexity of O(kn3 + k2n2 log(kn)). Our key insight is to conduct the

triple and multiple products in the Fourier space, in which the multiplications

can be performed much more efficiently. To our knowledge, our method is theoretically

the fastest for accurate spherical harmonics triple and multiple products.

And in practice, we demonstrate the efficiency of our method in rendering

applications including mid-frequency relighting and shadow fields.

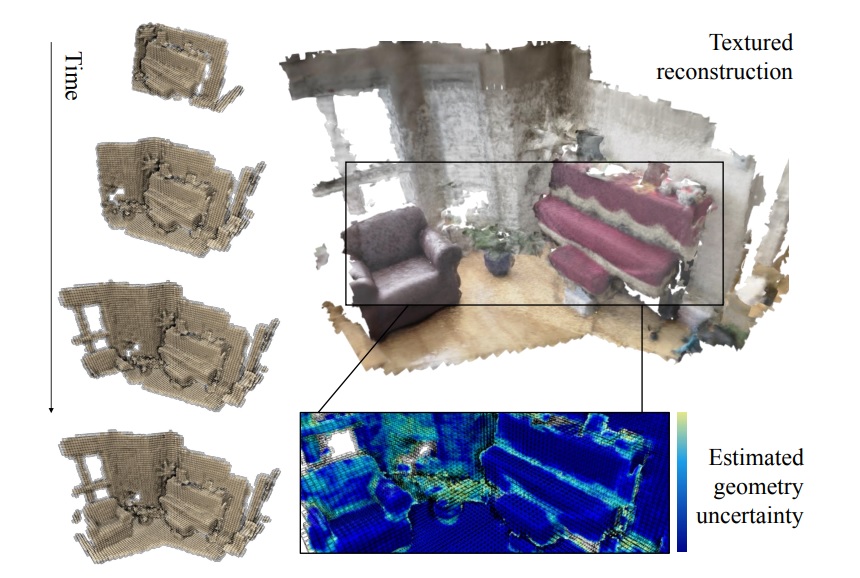

DI-Fusion: Online Implicit 3D Reconstruction with Deep Priors

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, 8932-8941.

Jiahui Huang, Shi-Sheng Huang, Haoxuan Song, Shi-Min Hu

Previous online 3D dense reconstruction methods struggle to achieve

the balance between memory storage and surface quality,

largely due to the usage of stagnant underlying

geometry representation, such as TSDF (truncated signed

distance functions) or surfels, without any knowledge of the

scene priors. In this paper, we present DI-Fusion (Deep

Implicit Fusion), based on a novel 3D representation, i.e.

Probabilistic Local Implicit Voxels (PLIVoxs), for online

3D reconstruction with a commodity RGB-D camera. Our

PLIVox encodes scene priors considering both the local

geometry and uncertainty parameterized by a deep neural network.

With such deep priors, we are able to perform online implicit 3D

reconstruction achieving state-ofthe-art camera trajectory estimation accuracy and mapping

quality, while achieving better storage efficiency compared

with previous online 3D reconstruction approaches. Our

implementation is available at https://www.github.

com/huangjh-pub/di-fusion.

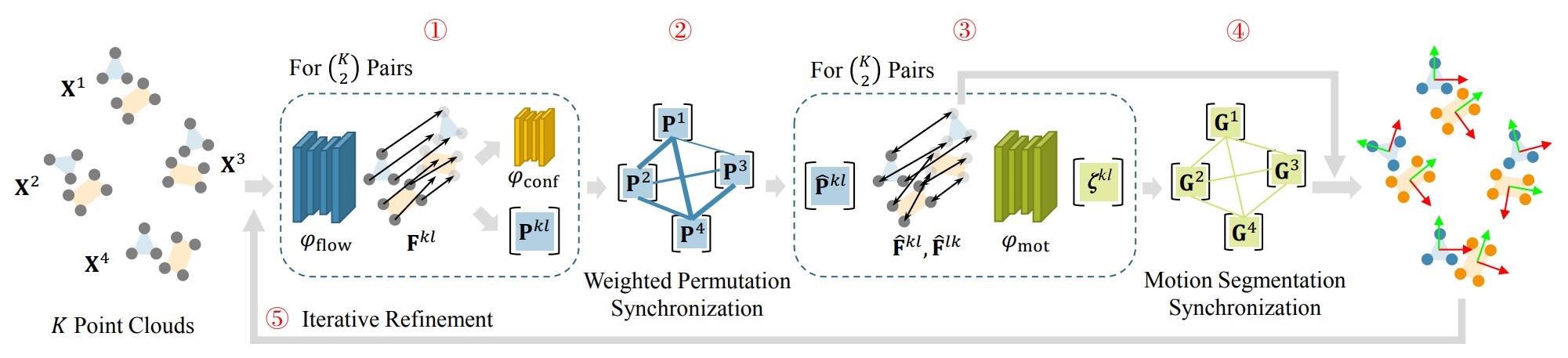

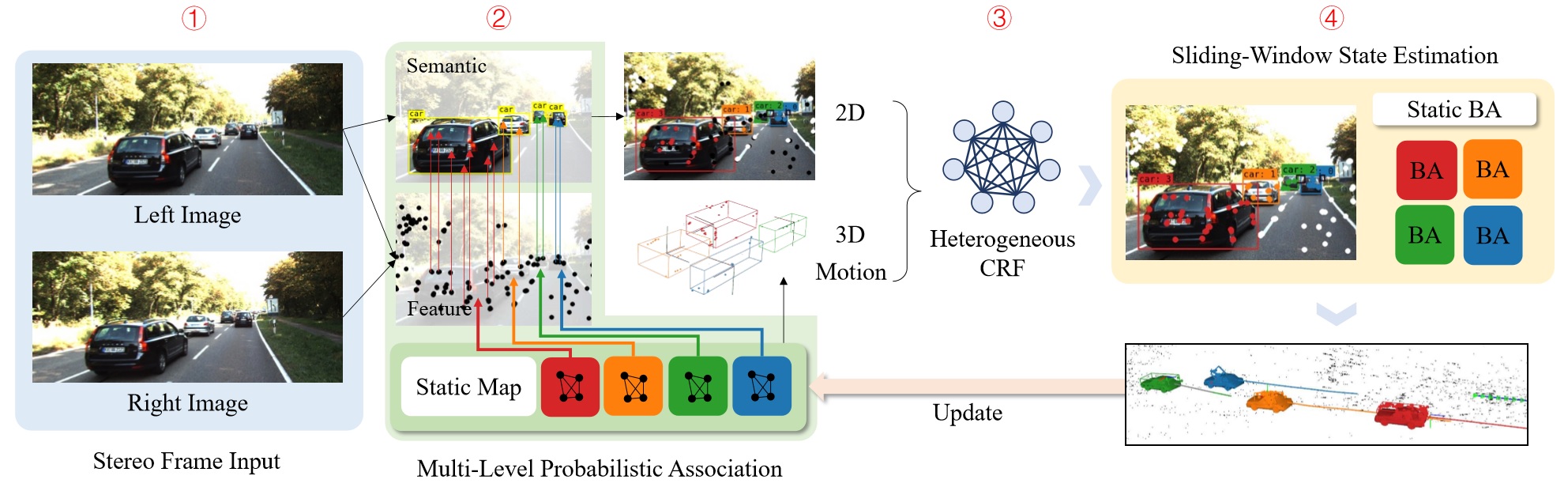

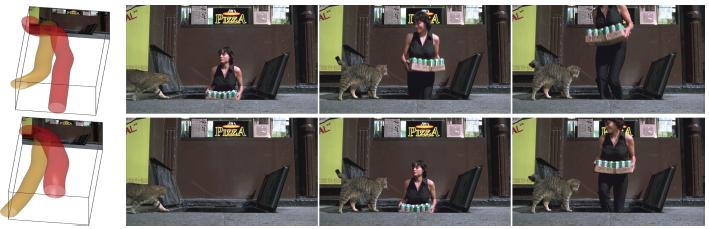

MultiBodySync: Multi-Body Segmentation and Motion Estimation via 3D Scan Synchronization

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, 7108-7118.

Jiahui Huang, He Wang, Tolga Birdal, Minhyuk Sung, Federica Arrigoni, Shi-Min Hu, Leonidas Guibas

We present MultiBodySync, a novel, end-to-end trainable multi-body motion segmentation

and rigid registration framework for multiple input 3D point clouds. The

two non-trivial challenges posed by this multi-scan multibody setting that we

investigate are: (i) guaranteeing correspondence and segmentation consistency

across multiple input point clouds capturing different spatial arrangements

of bodies or body parts; and (ii) obtaining robust motion-based rigid body

segmentation applicable to

novel object categories. We propose an approach to address these issues that

incorporates spectral synchronization into an iterative deep declarative network,

so as to simultaneously recover consistent correspondences as well

as motion segmentation. At the same time, by explicitly

disentangling the correspondence and motion segmentation estimation modules,

we achieve strong generalizability across different object categories.

Our extensive evaluations demonstrate that our method is effective on various

datasets ranging from rigid parts in articulated objects to

individually moving objects in a 3D scene, be it single-view

or full point clouds. Code at https://github.com/

huangjh-pub/multibody-sync.

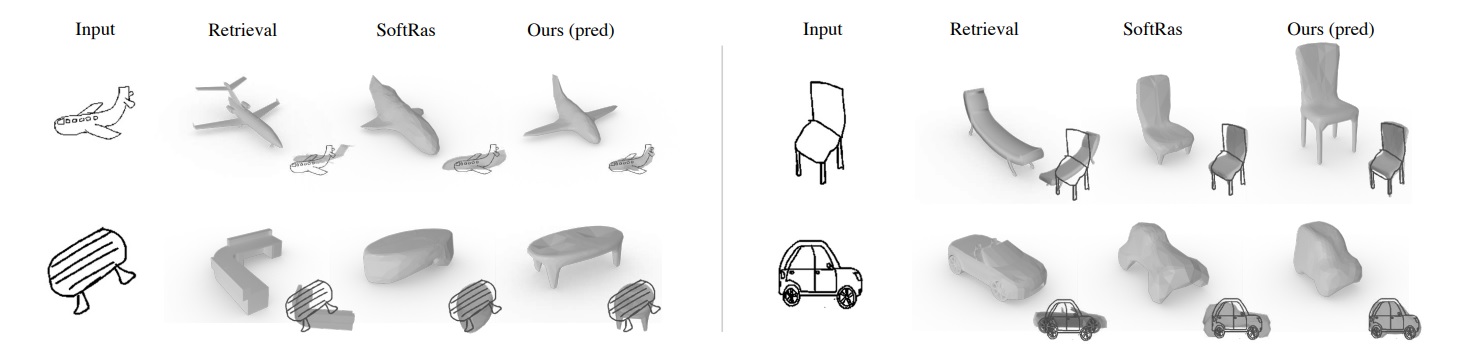

Sketch2Model: View-Aware 3D Modeling from Single Free-Hand Sketches

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021, 6012-6021.

Song-Hai Zhang, Yuan-Chen Guo, Qing-Wen Gu

We investigate the problem of generating 3D meshes

from single free-hand sketches, aiming at fast 3D modeling

for novice users. It can be regarded as a single-view

reconstruction problem, but with unique challenges, brought

by the variation and conciseness of sketches. Ambiguities in

poorly-drawn sketches could make it hard to determine how

the sketched object is posed. In this paper, we address the

importance of viewpoint specification for overcoming such

ambiguities, and propose a novel view-aware generation

approach. By explicitly conditioning the generation process on a given

viewpoint, our method can generate plausible shapes automatically with predicted viewpoints, or with

specified viewpoints to help users better express their intentions.

Extensive evaluations on various datasets demonstrate the effectiveness of our view-aware design in solving

sketch ambiguities and improving reconstruction quality.

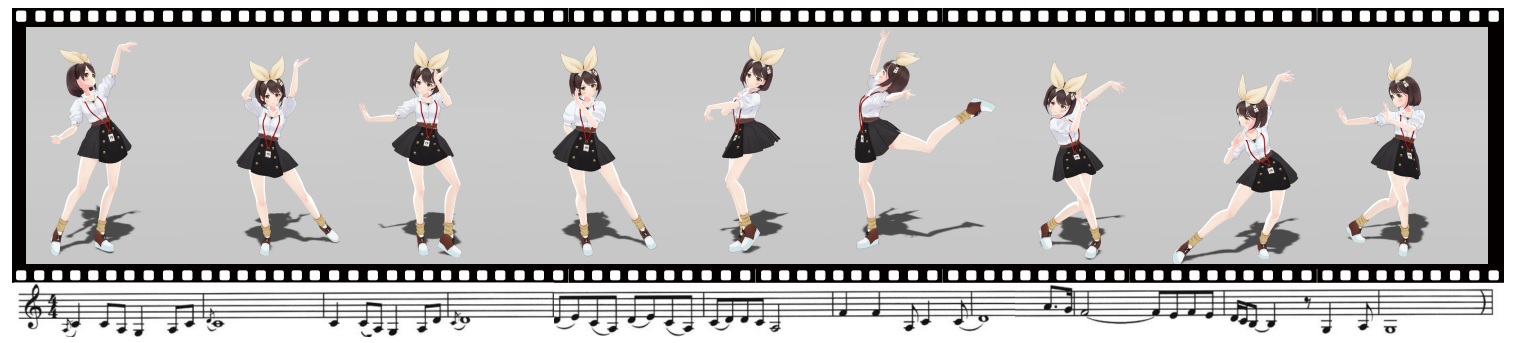

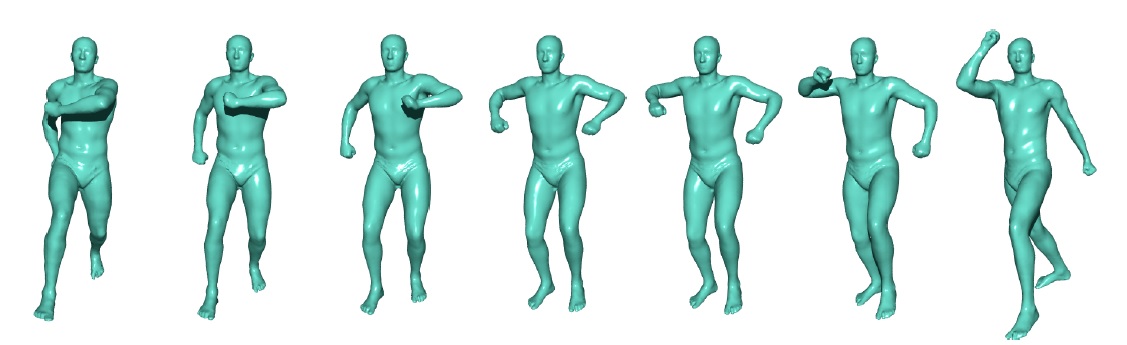

ChoreoMaster: Choreography-Oriented Music-Driven Dance Synthesis

ACM Transactions on Graphics, 2021, Vol. 40, No.4, artice no. 145, pages 1-13.

Kang Chen, Zhipeng Tan, Jin Lei, Song-Hai Zhang, Yuan-Chen Guo, Weidong Zhang, Shi-Min Hu

Despite strong demand in the game and film industry, automatically synthesizing

high-quality dance motions remains a challenging task. In this paper,

we present ChoreoMaster, a production-ready music-driven dance motion

synthesis system. Given a piece of music, ChoreoMaster can automatically

generate a high-quality dance motion sequence to accompany the input

music in terms of style, rhythm and structure. To achieve this goal, we

introduce a novel choreography-oriented choreomusical embedding framework,

which successfully constructs a unified choreomusical embedding space

for both style and rhythm relationships between music and dance phrases.

The learned choreomusical embedding is then incorporated into a novel

choreography-oriented graph-based motion synthesis framework, which

can robustly and efficiently generate high-quality dance motions following

various choreographic rules. Moreover, as a production-ready system,

ChoreoMaster is sufficiently controllable and comprehensive for users to

produce desired results. Experimental results demonstrate that dance motions

generated by ChoreoMaster are accepted by professional artists.

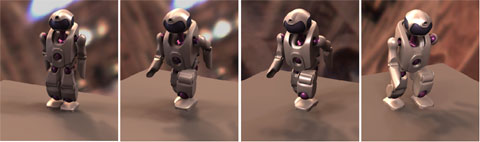

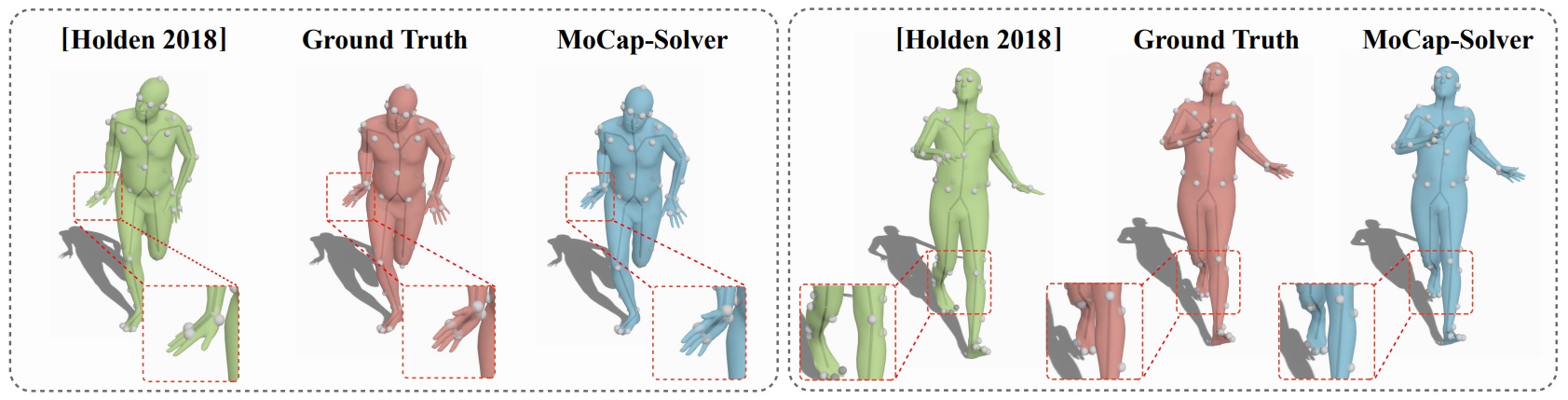

MoCap-Solver: A Neural Solver for Optical Motion Capture Data

ACM Transactions on Graphics, 2021, Vol. 40, No.4, artice no. 84, pages 1-11.

Kang Chen, Yupan Wang, Song-Hai Zhang, Sen-Zhe Xu, Weidong Zhang, Shi-Min Hu

In a conventional optical motion capture (MoCap) workflow, two processes

are needed to turn captured raw marker sequences into correct skeletal

animation sequences. Firstly, various tracking errors present in the

markers must be fixed (cleaning or refining). Secondly, an agent skeletal mesh

must be prepared for the actor/actress, and used to determine skeleton

information from the markers (re-targeting or solving). The whole process,

normally referred to as solving MoCap data, is extremely time-consuming,

labor-intensive, and usually the most costly part of animation production.

Hence, there is a great demand for automated tools in industry. In this

work, we present MoCap-Solver, a production-ready neural solver for optical

MoCap data. It can directly produce skeleton sequences and clean marker

sequences from raw MoCap markers, without any tedious manual operations.

To achieve this goal, our key idea is to make use of neural encoders

concerning three key intrinsic components: the template skeleton, marker

configuration and motion, and to learn to predict these latent vectors from

imperfect marker sequences containing noise and errors. By decoding these

components from latent vectors, sequences of clean markers and skeletons

can be directly recovered. Moreover, we also provide a novel normalization

strategy based on learning a pose-dependent marker reliability function,

which greatly improves system robustness. Experimental results demonstrate

that our algorithm consistently outperforms the state-of-the-art on

both synthetic and real-world datasets

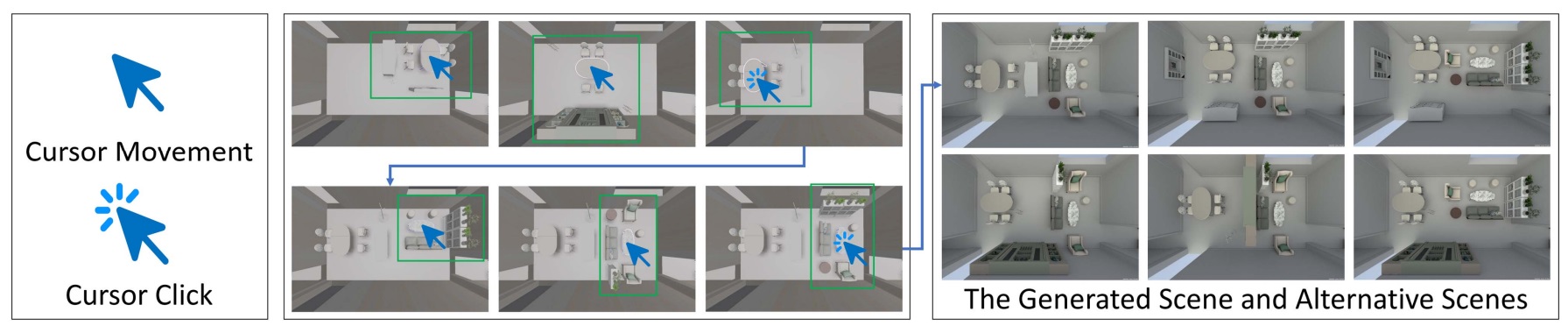

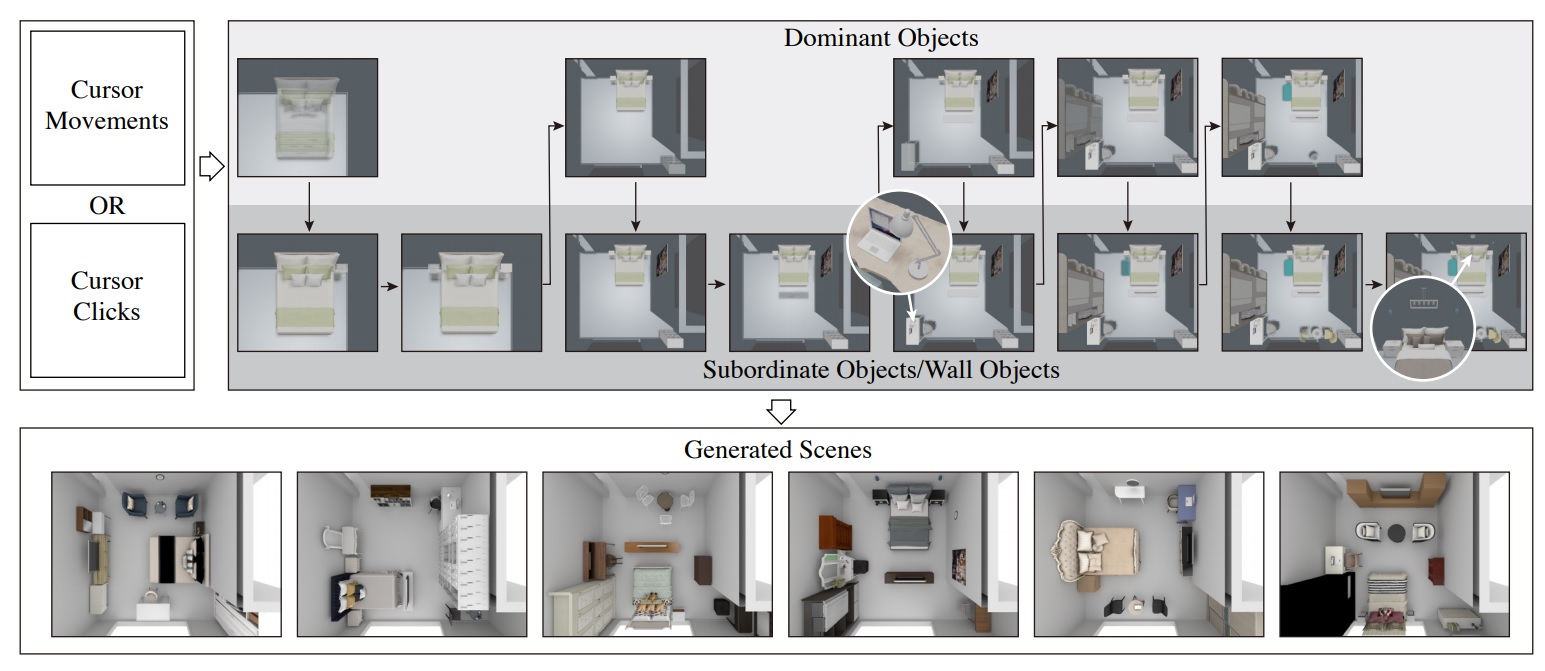

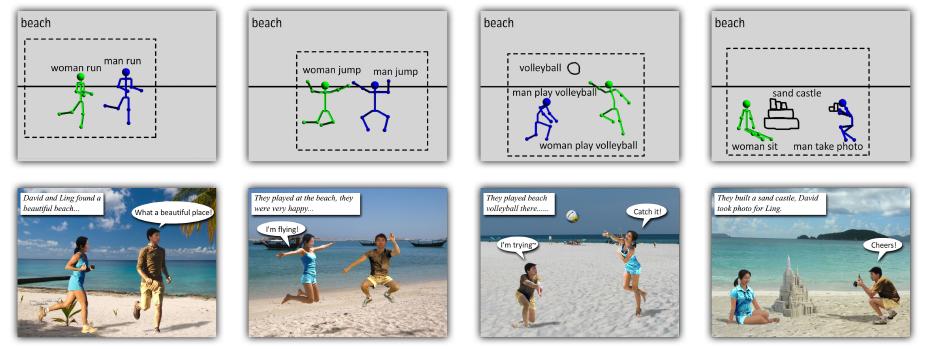

MageAdd: Real-Time Interaction Simulation for Scene Synthesis

Proceedings of the 29th ACM International Conference on Multimedia, October 2021, 965-973.

(click for project webpage in Github)

(click for project webpage in Github)

Shao-Kui Zhang, Yi-Xiao Li, Yu He, Yong-Liang Yang, Song-Hai Zhang

While recent researches on computational 3D scene synthesis have achieved

impressive results, automatically synthesized scenes do not guarantee satisfaction

of end users. On the other hand, manual scene modelling can always ensure high

quality, but requires a cumbersome trial-and-error process. In this paper,

we bridge the above gap by presenting a data-driven 3D scene synthesis framework

that can intelligently infer objects to the scene by incorporating and simulating

user preferences with minimum input. While the cursor is moved and clicked in

the scene, our framework automatically selects and transforms suitable objects

into scenes in real time. This is based on priors learnt from the dataset for

placing different types of objects, and updated according to the current scene

context. Through extensive experiments we demonstrate that our framework outperforms

the state-of-the-art on result aesthetics, and enables effective and efficient user

interactions.

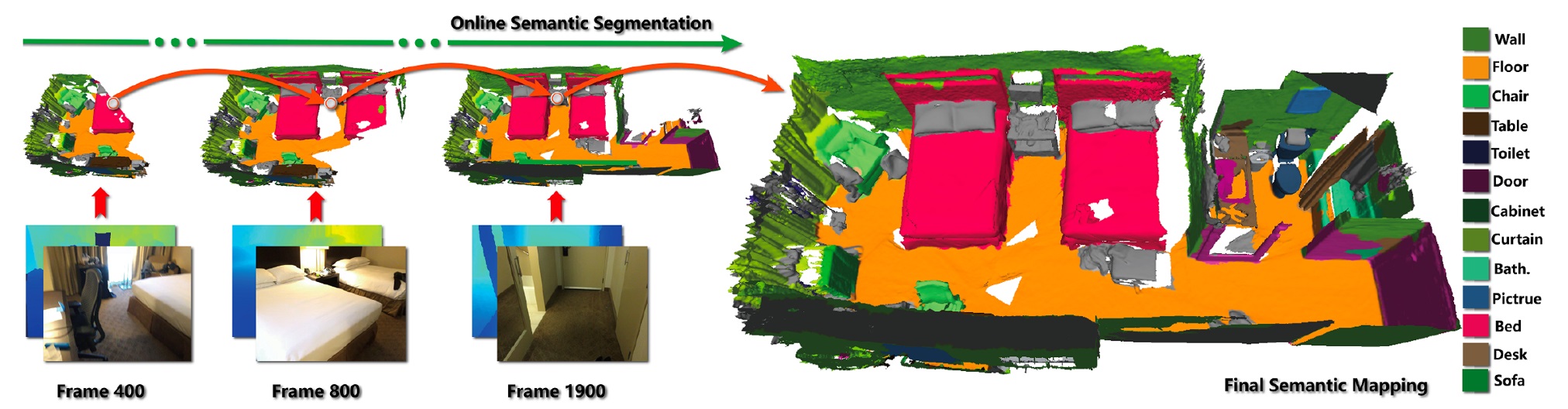

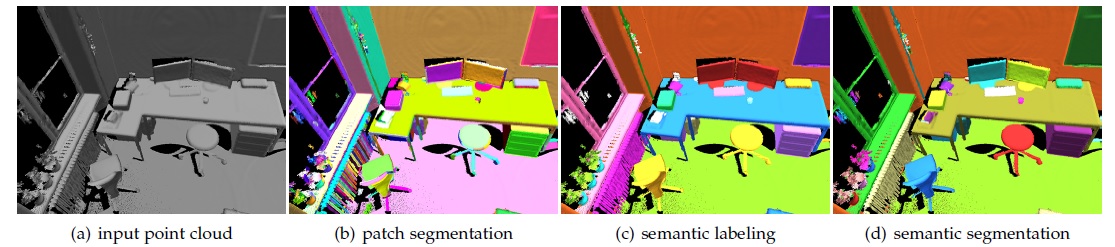

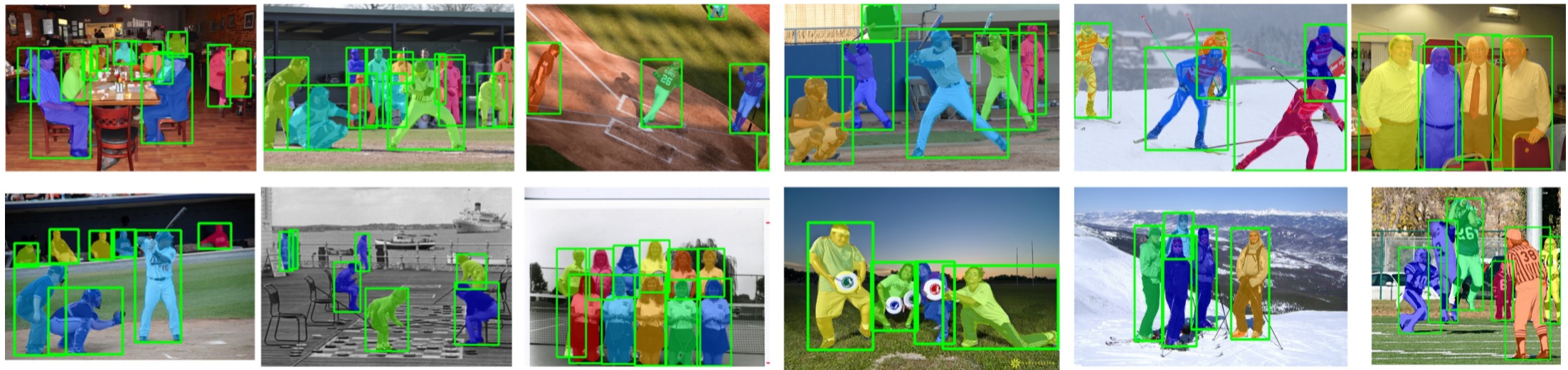

Supervoxel Convolution for Online 3D Semantic Segmentation

ACM Transactions on Graphics, 2021, Vol. 40, No. 3, article No. 34, pages 1-15.

Shi-Sheng Huang, Ze-Yu Ma, Tai-Jiang Ma, Hongbo FU, Shi-Min Hu

Online 3D semantic segmentation, which aims to perform real-time 3D scene

reconstruction along with semantic segmentation, is an important but challenging topic.

A key challenge is to strike a balance between efficiency and

segmentation accuracy. There are very few deep learning based solutions

to this problem, since the commonly used deep representations based on

volumetric-grids or points do not provide efficient 3D representation and

organization structure for online segmentation. Observing that on-surface

supervoxels, i.e., clusters of on-surface voxels, provide a compact representation of 3D

surfaces and brings efficient connectivity structure via supervoxel

clustering, we explore a supervoxel-based deep learning solution for this task.

To this end, we contribute a novel convolution operation (SVConv) directly

on supervoxels. SVConv can efficiently fuse the multi-view 2D features and

3D features projected on supervoxels during the online 3D reconstruction,

and leads to an effective supervoxel-based convolutional neural network,

termed as Supervoxel-CNN, enabling 2D-3D joint learning for 3D semantic

prediction. With the Supervoxel-CNN, we propose a clustering-then-prediction

online 3D semantic segmentation approach. The extensive evaluations on

the public 3D indoor scene datasets show that our approach significantly

outperforms the existing online semantic segmentation systems in terms of

efficiency or accuracy.

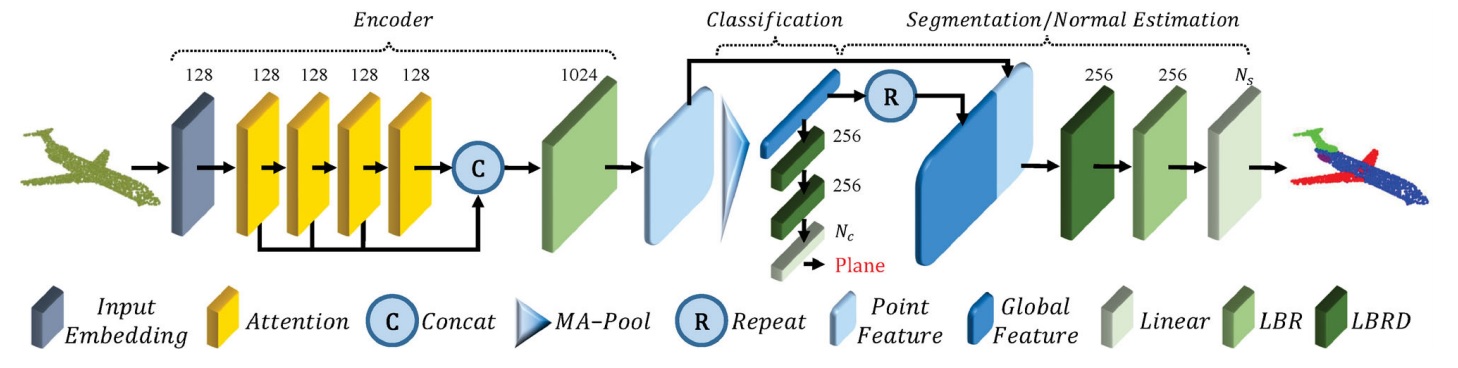

PCT: Point cloud transformer

Computational Visual Media, 2021, Vol. 7, No. 2, 187-199.

Meng-Hao Guo, Jun-Xiong Cai, Zheng-Ning Liu, Tai-Jiang Mu, Ralph R. Martin & Shi-Min Hu

The irregular domain and lack of ordering make it challenging to design deep neural networks for point

cloud processing. This paper presents a novel framework named Point Cloud Transformer (PCT) for point

cloud learning. PCT is based on Transformer, which achieves huge success in natural language processing

and displays great potential in image processing. It is inherently permutation invariant for processing

a sequence of points, making it well-suited for point cloud learning. To better capture local

context within the point cloud, we enhance input embedding with the support of farthest point sampling

and nearest neighbor search. Extensive experiments demonstrate that the PCT achieves the state-of-the-art

performance on shape classification, part segmentation, semantic segmentation, and normal estimation tasks.

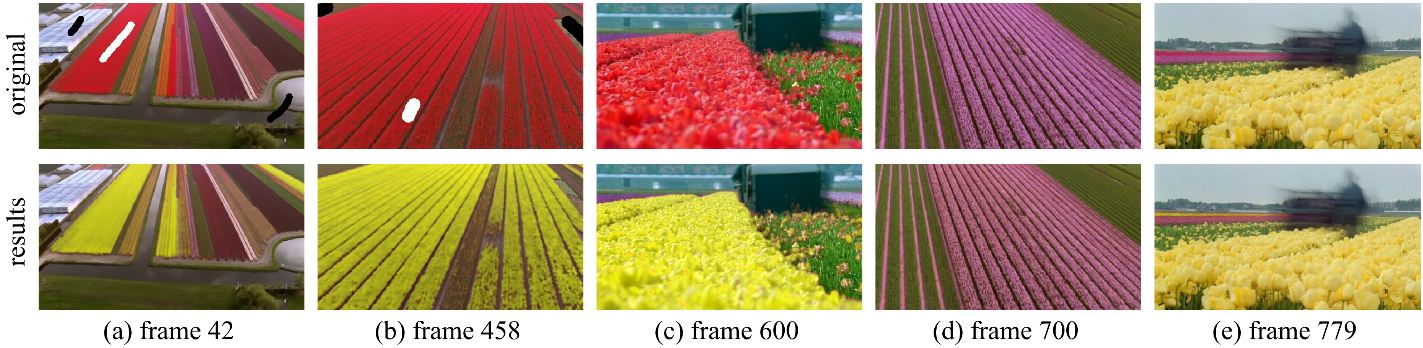

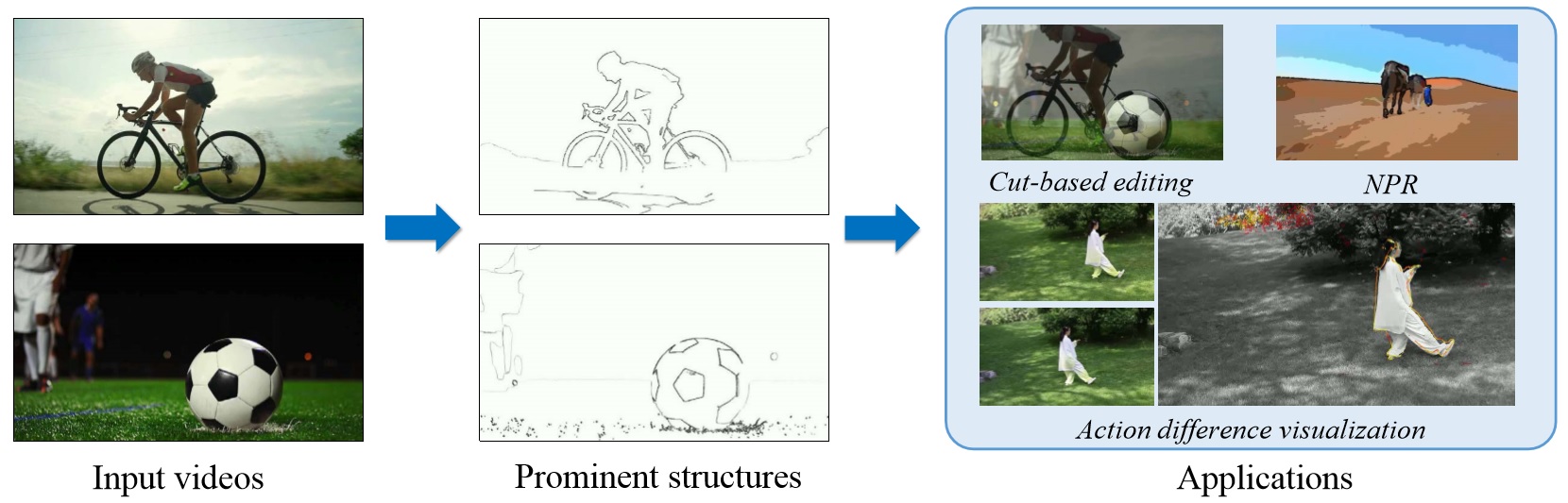

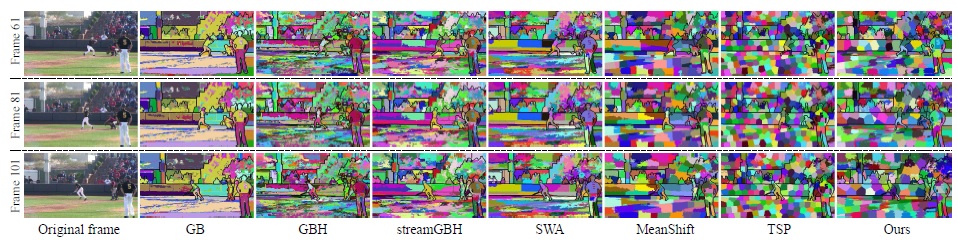

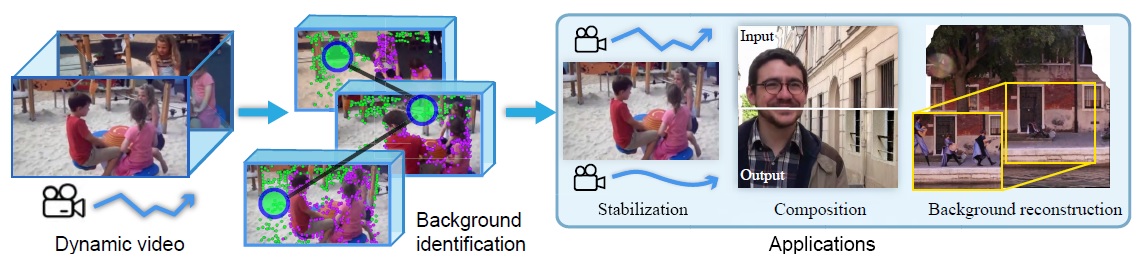

Prominent Structures for Video Analysis and Editing

IEEE Transactions on Visualization and Computer Graphics, 2021, Vol. 27, No. 7, 3305-3317.

Miao Wang, Xiao-Nan Fang, Guo-Wei Yang, Ariel Shamir, Shi-Min Hu

We present prominent structures in video, a representation of visually strong,

spatially sparse and temporally stable structural units, for use in video analysis and editing.

With a novel quality measurement of prominent structures in video, we develop a general framework

for prominent structure computation, and an ef?cient hierarchical structure alignment algorithm

between a pair of videos. The prominent structural unit map is proposed to encode both binary

prominence guidances and numerical strength and geometry details for each video frame.

Even though the detailed appearance of videos could be visually different, the proposed

alignment algorithm can ?nd candidate matched prominent structure sub-volumes. Prominent

structures in video support a wide range of video analysis and editing applications

including graphic match-cut between successive videos, instant cut editing,

finding transition portals from a video collection,

structure-aware video re-ranking, visualizing human action differences, etc.

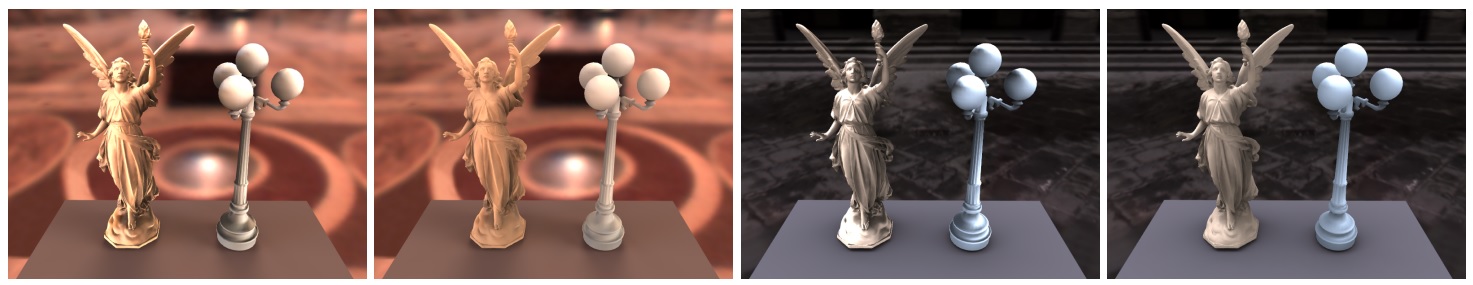

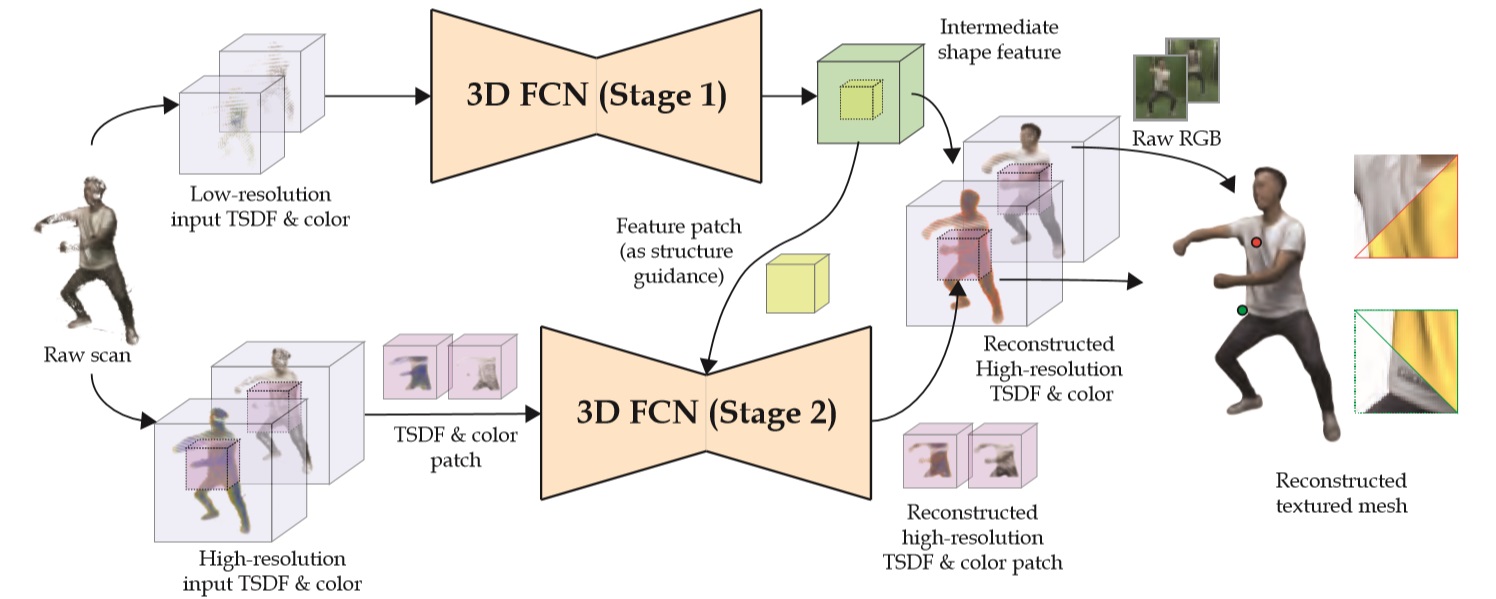

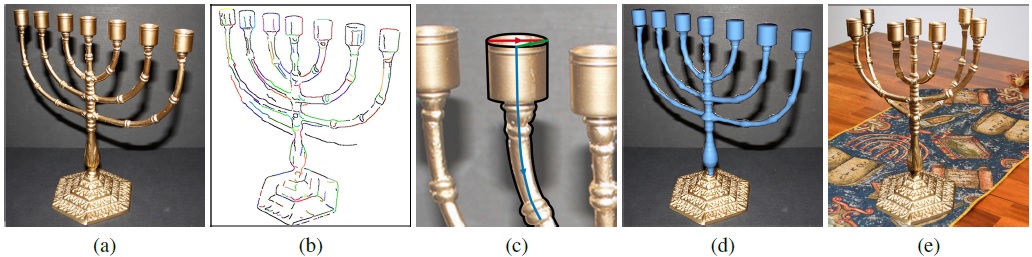

High-quality Textured 3D Shape Reconstruction with Cascaded Fully Convolutional Networks

IEEE Transactions on Visualization and Computer Graphics, 2021, Vol. 27, No.1, 83-97.

Zheng-Ning Liu, Yan-Pei Cao, Zheng-Fei Kuang, Leif Kobbelt, Shi-Min Hu

We present a learning-based approach to reconstructing high-resolution three-dimensional (3D)

shapes with detailed geometry and high-?delity textures. Albeit extensively studied,

algorithms for 3D reconstruction from multi-view depth-and-color (RGB-D) scans are

still prone to measurement noise and occlusions; limited scanning or capturing angles

also often lead to incomplete reconstructions. Propelled by recent advances in 3D deep

learning techniques, in this paper, we introduce a novel computation and memory efficient cascaded

3D convolutional network architecture, which learns to reconstruct implicit surface representations

as well as the corresponding color information from noisy and imperfect RGB-D maps. The proposed 3D

neural network performs reconstruction in a progressive and coarse-to-?ne manner, achieving

unprecedented output resolution and ?delity. Meanwhile, an algorithm for end-to-end training

of the proposed cascaded structure is developed. We further introduce Human10, a newly created

dataset containing both detailed and textured full body reconstructions as well as corresponding

raw RGB-D scans of 10 subjects. Qualitative and quantitative experimental results on both

synthetic and real-world datasets demonstrate that the presented approach outperforms existing