Kun Xu ( )

)

I am an associate professor in the Department of Computer Science and Technology of Tsinghua University. I received my doctor and bachelor degree from Department of Computer Science and Technology, Tsinghua University in 2009 and in 2005, respectively.

My research interests include: real-time rendering, image/video editing, and 3D scene synthesis.

My contact info:

Email: xukun (at) tsinghua.edu.cn

Office: Ziqiang Tech Building 1-1002, Tsinghua Univeristy, Beijing, P.R. China, P.C.: 100084

Academic Services

- Program Committee Member, SIGGRAPH Technical Papers 2025

- Program Committee Member, SIGGRAPH Asia Technical Papers 2024

- Editorial Board, Computers & Graphics (2020-present)

- Program Co-Chair, Pacific Graphics 2015

Awards

- National Science and Technology Progress Award of China (Second Class Prize, 4th Achiever), 2018

- National Science Fund for Excellent Young Scholars by NSFC, 2018

- Young Elite Scientists Sponsorship Program by CAST, 2016

- The State Natural Science Award of China (Second Class Prize, 4th Achiever), 2015

- CCF-Intel Young Faculty Researcher Program (YFRP), 2013

- Outstanding Doctoral Dissertations of CCF, 2009

- Microsoft Fellowship, 2008

Publications

| 2024

|

|

GPU Coroutines for Flexible Splitting and Scheduling of Rendering TasksACM Transactions on Graphics, 43(6), 281:1 – 281:24, 2024 (SIGGRAPH Asia 2024) @article{Zheng2024GPUCoroutines,

author = {Zheng, Shaokun and Chen, Xin and Shi, Zhong and Yan, Ling-Qi and Xu, Kun},

title = {GPU Coroutines for Flexible Splitting and Scheduling of Rendering Tasks},

year = {2024},

issue_date = {December 2024},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {43},

number = {6},

issn = {0730-0301},

url = {https://doi.org/10.1145/3687766},

doi = {10.1145/3687766},

journal = {ACM Trans. Graph.},

month = nov,

articleno = {281},

numpages = {24},

keywords = {GPU, coroutine, rendering, program transformation, asynchronous programming, task scheduling}

}

|

|

Differentiable Photon Mapping using Generalized Path GradientsACM Transactions on Graphics, 43(6), 257:1 – 257:15, 2024 (SIGGRAPH Asia 2024) @article{Xing2024DPMG,

title = {Differentiable Photon Mapping using Generalized Path Gradients},

author = {Xing, Jiankai and Li, Zengyu and Luan, Fujun and Xu, Kun},

year = {2024},

month = {dec},

url = {https://doi.org/10.1145/3687958},

articleno = {257},

numpages = {14},

journal = {ACM Trans. Graph.},

volume = {43},

number = {6},

publisher = {Association for Computing Machinery},

}

|

|

Filtering-Based Reconstruction for Gradient-Domain RenderingSIGGRAPH Asia, 2024 @inproceedings{Yan2024Filtering,

author = {Yan, Difei and Zheng, Shaokun and Yan, Ling-Qi and Xu, Kun},

title = {Filtering-Based Reconstruction for Gradient-Domain Rendering},

year = {2024},

isbn = {9798400711312},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3680528.3687568},

doi = {10.1145/3680528.3687568},

booktitle = {SIGGRAPH Asia 2024 Conference Papers},

articleno = {69},

numpages = {10},

keywords = {Gradient-Domain Rendering, Reconstruction, Optimization},

location = {

},

series = {SA '24}

}

|

|

| 2023

|

|

Extended Path Space Manifolds for Physically Based Differentiable RenderingSIGGRAPH Asia, 2023 @inproceedings{Xing2023EPSM,

title = {Extended Path Space Manifolds for Physically Based Differentiable Rendering},

author = {Xing, Jiankai and Hu, Xuejun and Luan, Fujun and Yan, Ling-Qi and Xu, Kun},

year = {2023},

publisher = {ACM},

booktitle = {SIGGRAPH Asia 2023 Conference Papers},

url = {https://doi.org/10.1145/3610548.3618195},

articleno = {62},

numpages = {11}

}

|

|

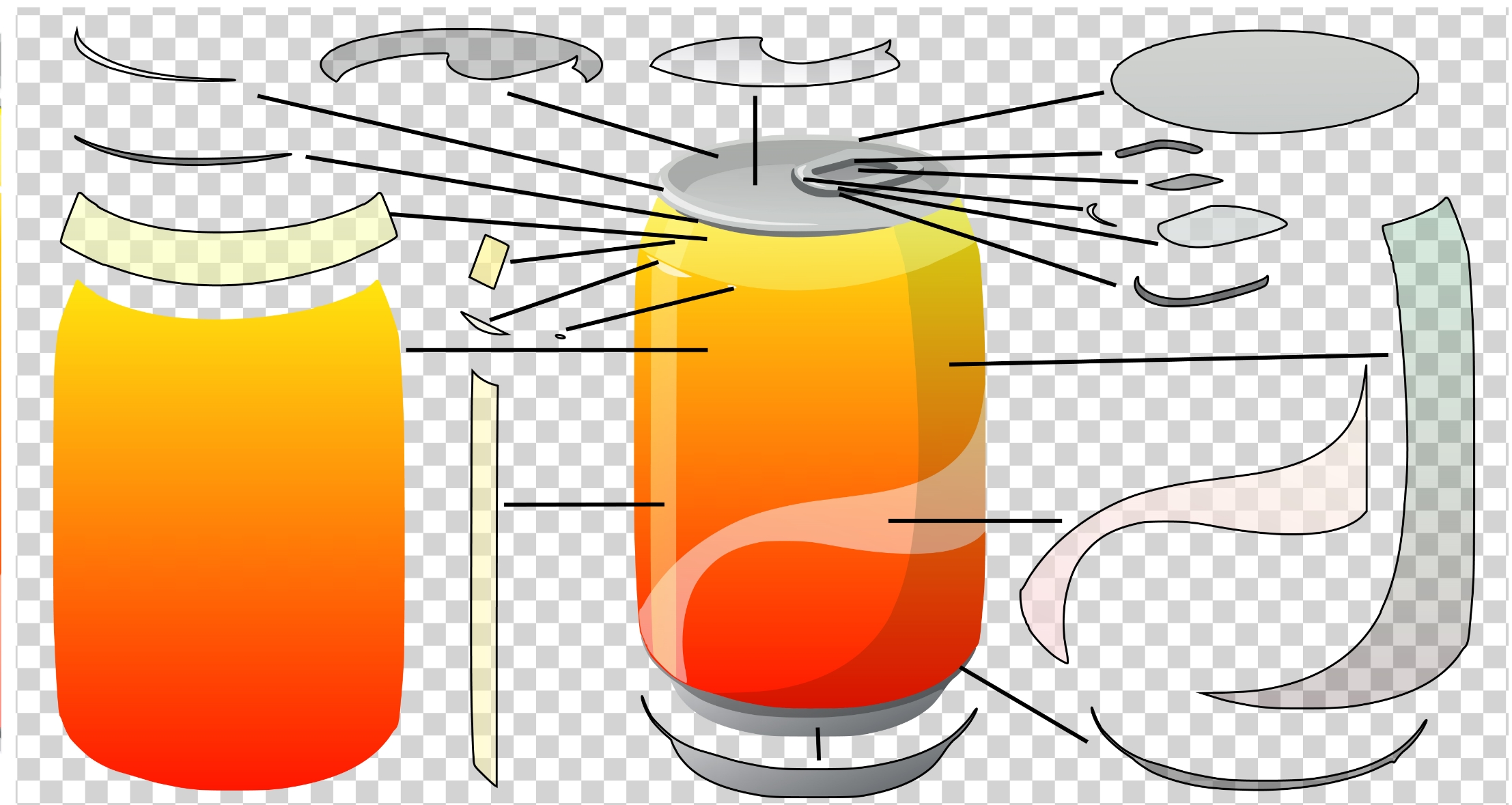

Image vectorization and editing via linear gradient layer decompositionACM Transactions on Graphics, 42(4), 97:1 – 97:13, 2023 (SIGGRAPH 2023) project page paper supplemental slides bibtex @article{Du2023Layer,

author = {Du, Zheng-Jun and Kang, Liang-Fu and Tan, Jianchao and Gingold, Yotam and Xu, Kun},

title = {Image Vectorization and Editing via Linear Gradient Layer Decomposition},

year = {2023},

issue_date = {August 2023},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {42},

number = {4},

issn = {0730-0301},

url = {https://doi.org/10.1145/3592128},

doi = {10.1145/3592128},

journal = {ACM Trans. Graph.},

month = {jul},

articleno = {97},

numpages = {13},

keywords = {vectorization, compositing, layers, recoloring, gradient, color space, images, RGB}

}

|

|

| 2022

|

|

LuisaRender: A High-Performance Rendering Framework with Layered and Unified Interfaces on Stream ArchitecturesACM Transactions on Graphics 41(6), 232:1 – 232:19, 2022 (SIGGRAPH Asia 2022) project page paper supplemental code bibtex @article{Zheng2022LuisaRender,

title = {LuisaRender: A High-Performance Rendering Framework with Layered and Unified Interfaces on Stream Architectures},

author = {Zheng, Shaokun and Zhou, Zhiqian and Chen, Xin and Yan, Difei and Zhang, Chuyan and Geng, Yuefeng and Gu, Yan and Xu, Kun},

journal = {ACM Trans. Graph.},

volume = {41},

number = {6},

year = {2022},

issue_date = {December 2022},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

issn = {0730-0301},

url = {https://doi.org/10.1145/3550454.3555463},

doi = {10.1145/3550454.3555463},

month = {dec},

articleno = {232},

numpages = {19}

}

|

|

Differentiable Rendering using RGBXY Derivatives and Optimal TransportACM Transactions on Graphics 41(6), 189:1 – 189:13, 2022 (SIGGRAPH Asia 2022) project page paper supplemental code bibtex

@article{Xing2022drot,

title = {Differentiable Rendering using RGBXY Derivatives and Optimal Transport},

author = {Xing, Jiankai and Luan, Fujun and Yan, Ling-Qi and Hu, Xuejun and Qian, Houde and Xu, Kun},

journal = {ACM Trans. Graph.},

volume = {41},

number = {6},

year = {2022},

issue_date = {December 2022},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3550454.3555479},

issn = {0730-0301},

doi = {10.1145/3550454.3555479},

month = {dec},

articleno = {189},

numpages = {13}

}

|

|

Unbiased Caustics Rendering Guided by Representative Specular PathIn Proceedings of SIGGRAPH Asia, 2022 project page paper supplemental bibtex @inproceedings{Li2022Pathcut,

author = {He Li and Beibei Wang and Changehe Tu and Kun Xu and Nicolas Holzschuch and Ling-Qi Yan},

title = {Unbiased Caustics Rendering Guided by Representative Specular Paths},

booktitle={Proceedings of SIGGRAPH Asia 2022},

year = {2022},

publisher = {Association for Computing Machinery},

url = {https://doi.org/10.1145/3550469.3555381},

doi = {10.1145/3550469.3555381},

}

|

|

Neural Color Operators for Sequential Image RetouchingIn Proceedings of the European Conference on Computer Vision (ECCV), 2022 project page paper supplemental code bibtex @InProceedings{wang2022neurop,

author = {Wang, Yili and Li, Xin and Xu, Kun and He, Dongliang and Zhang, Qi and Li, Fu and Ding, Errui},

title = {Neural Color Operators for Sequential Image Retouching},

booktitle = {Computer Vision -- ECCV 2022},

year = {2022},

}

|

|

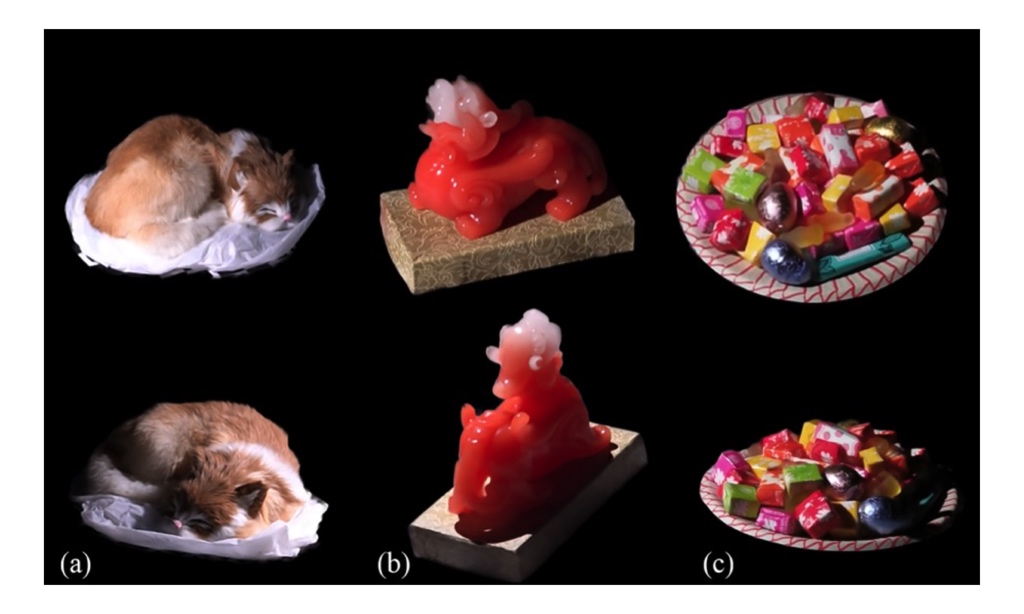

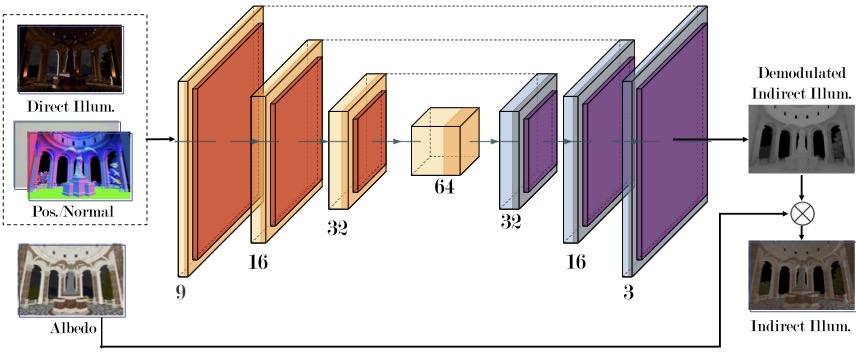

Neural Global Illumination: Interactive Indirect Illumination Prediction under Dynamic Area LightsIEEE Transactions on Visualization and Computer Graphics (Early Access), 2022 paper supplemental video bibtex @article{gao2022NGL,

author={Gao, Duan and Mu, Haoyuan and Xu, Kun},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Neural Global Illumination: Interactive Indirect Illumination Prediction under Dynamic Area Lights}, year={2022},

volume={}, number={},

pages={1-17},

doi={10.1109/TVCG.2022.3209963}

}

|

|

An Image-to-video Model for Real-Time Video EnhancementIn Proceedings of the 30th ACM International Conference on Multimedia, 2022 @inproceedings{she2022video,

author = {She, Dongyu and Xu, Kun},

title = {An Image-to-Video Model for Real-Time Video Enhancement},

year = {2022},

isbn = {9781450392037},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3503161.3548325},

doi = {10.1145/3503161.3548325},

booktitle = {Proceedings of the 30th ACM International Conference on Multimedia},

pages = {1837–1846},

numpages = {10},

keywords = {image enhancement, image-to-video model, video enhancement, temporal consistency},

location = {Lisboa, Portugal},

series = {MM '22}

}

|

|

| 2021

|

|

Ensemble Denoising for Monte Carlo RenderingsACM Transactions on Graphics 40(6), 274:1 – 274:17, 2021 (SIGGRAPH Asia 2021) @article{Zheng:2021:EnsembleDenoising,

author = {Zheng, Shaokun and Zheng, Fengshi and Xu, Kun and Yan, Ling-Qi},

title = {Ensemble Denoising for Monte Carlo Renderings},

journal = {ACM Transactions on Graphics},

volume = {40},

number = {6},

year = {2021},

articleno = {274},

numpages = {17},

pages = {274:1--274:17}

}

|

|

Fast and Accurate Spherical Harmonics ProductsACM Transactions on Graphics 40(6), 280:1-280:14, 2021 (SIGGRAPH Asia 2021) @article{Xin:2021:SHProducts,

author = {Xin, Hanggao and Zhou, Zhiqian and An, Di and Yan, Ling-Qi and Xu, Kun and Hu, Shi-Min and Yau, Shing-Tung},

title = {Fast and Accurate Spherical Harmonics Products},

journal = {ACM Transactions on Graphics},

volume = {40},

number = {6},

year = {2021},

articleno = {280},

numpages = {14},

pages = {280:1--280:14}

}

|

|

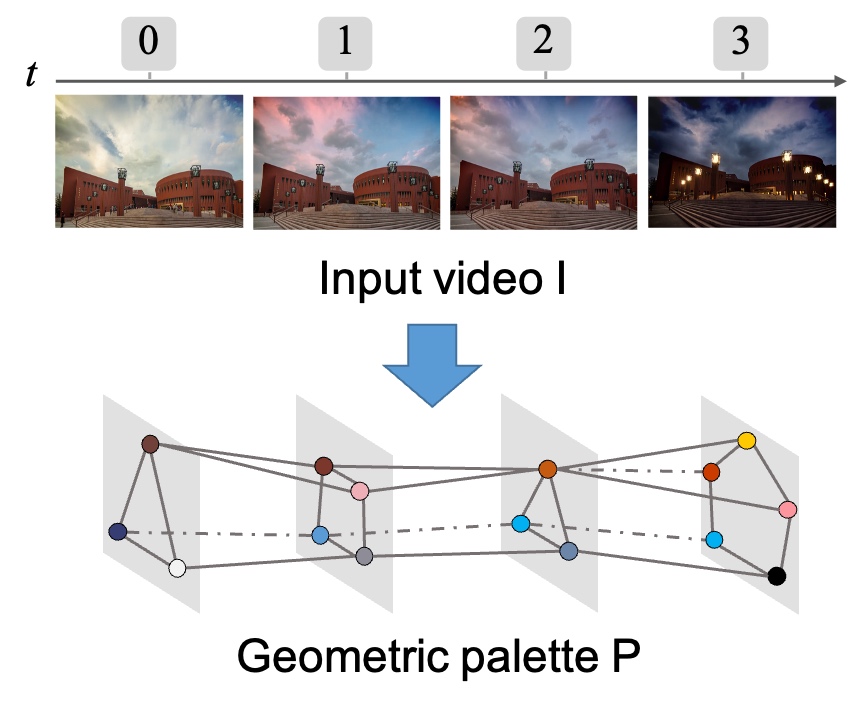

Video Recoloring via Spatial-Temporal Geometric PalettesACM Transactions on Graphics, 40(4), 150:1-150:16,2021 (SIGGRAPH 2021) paper slides video supplemental code bibtex @article{Du:2021:VRS,

author = {Du, Zheng-Jun and Lei, Kai-Xiang and Xu, Kun and Tan, Jianchao and Gingold, Yotam},

title = {Video Recoloring via Spatial-Temporal Geometric Palettes},

journal = {ACM Transactions on Graphics},

volume = {40},

number = {4},

year = {2021},

month = aug,

articleno = {150},

numpages = {16},

keywords = {free-viewpoint, neural rendering, relighting},

pages = {150:1--150:16},

keywords = {Palette, Color, Grading, Painting, Image, Video, Spatial-temporal, Recoloring, Layer, RGB}

}

|

|

Hierarchical Layout-Aware Graph Convolutional Network for Unified Aesthetics AssessmentIn Proceedings of IEEE CVPR 2021. paper supplemental jittor code bibtex @InProceedings{She:2021:AestheticsAssessment,

author = {She, Dongyu and Lai, Yu-Kun and Yi, Gaoxiong and Xu, Kun},

title = {Hierarchical Layout-Aware Graph Convolutional Network for Unified Aesthetics Assessment},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {8475-8484}

}

| |

| 2020

|

|

Deferred Neural Lighting: Free-viewpoint Relighting from Unstructured PhotographsACM Transactions on Graphics, 39(6), 258:1-258:15,2020 (SIGGRAPH Asia 2020) paper slides video supplemental code bibtex @article{Gao2020DeferredNeuralLighting,

author = {Gao, Duan and Chen, Guojun and Dong, Yue and Peers, Pieter and Xu, Kun and Tong, Xin},

title = {Deferred Neural Lighting: Free-Viewpoint Relighting from Unstructured Photographs},

year = {2020},

volume = {39},

number = {6},

doi = {10.1145/3414685.3417767},

journal = {ACM Transactions on Graphics},

month = nov,

articleno = {258},

numpages = {15},

keywords = {free-viewpoint, neural rendering, relighting},

pages = {258:1--258:15},

}

| |

Lightweight Bilateral Convolutional Neural Networks for Interactive Single-bounce Diffuse Indirect IlluminationIEEE Transactions on Visualization and Computer Graphics, 2020, accepted. @ARTICLE{Xin2020BilateralCNN,

author={Xin, Hanggao and Zheng, Shaokun and Xu, Kun and Yan, Ling-Qi},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={Lightweight Bilateral Convolutional Neural Networks for Interactive Single-bounce Diffuse Indirect Illumination},

year={2020},

volume={},

number={},

pages={1-1},

doi={10.1109/TVCG.2020.3023129}

}

| |

2019 |

|

Adversarial Monte Carlo denoising with conditioned auxiliary feature modulationACM Transactions on Graphics, 38(6), 224:1--224:12, 2019. (SIGGRAPH Asia 2019) @article{Xu2019AdversarialMCDenoising,

author = {Bing Xu and Junfei Zhang and Rui Wang and Kun Xu and Yong-Liang Yang and Chuan Li and Rui Tang},

title = {Adversarial Monte Carlo Denoising with Conditioned Auxiliary Feature},

journal = {ACM Transactions on Graphics},

volume = {38},

number = {6},

year = {2019},

pages = {224:1--224:12},

articleno = {224},

numpages = {12},

}

| |

An Improved Geometric Approach for Palette‐based Image Decomposition and RecoloringComputer Graphics Forum 38(7), 11-22, 2019. (PG 2019) @article{Wang2019ImprovedPalette,

author = {Wang, Yili and Liu, Yifan and Xu, Kun},

title = {An Improved Geometric Approach for Palette-based Image Decomposition and Recoloring},

journal = {Computer Graphics Forum},

volume = {38},

number = {7},

pages = {11--22},

year = {2019}

}

| |

Adaptive BRDF-Aware Multiple Importance Sampling of Many LightsComputer Graphics Forum 38(4), 123-133, 2019. (EGSR 2019) @article{Liu2019BRDFOrientedSampling,

author = {Liu, Yifan and Xu, Kun and Yan, Ling-Qi},

title = {Adaptive BRDF-Oriented Multiple Importance Sampling of Many Lights},

journal = {Computer Graphics Forum},

volume = {38},

number = {4},

pages = {123--133},

year = {2019}

}

| |

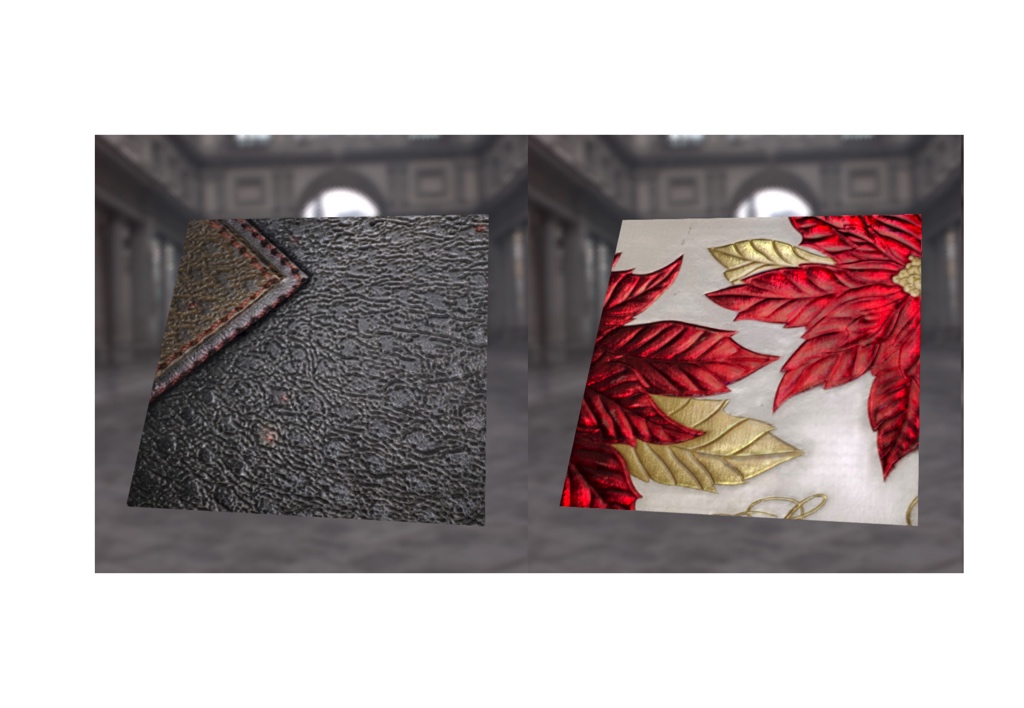

Deep Inverse Rendering for High-resolution SVBRDF Estimation from an Arbitrary Number of ImagesACM Transactions on Graphics, 38(4), 134:1-134:15, 2019. (SIGGRAPH 2019) @article{Gao2019EstimationSVBRDF,

author = {Gao, Duan and Li, Xiao and Dong, Yue and Peers, Pieter and Xu, Kun and Tong, Xin},

title = {Deep Inverse Rendering for High-resolution SVBRDF Estimation from an Arbitrary Number of Images},

journal = {ACM Transactions on Graphics},

issue_date = {July 2019},

volume = {38},

number = {4},

month = jul,

year = {2019},

issn = {0730-0301},

pages = {134:1--134:15},

articleno = {134},

numpages = {15},

doi = {10.1145/3306346.3323042},

}

| |

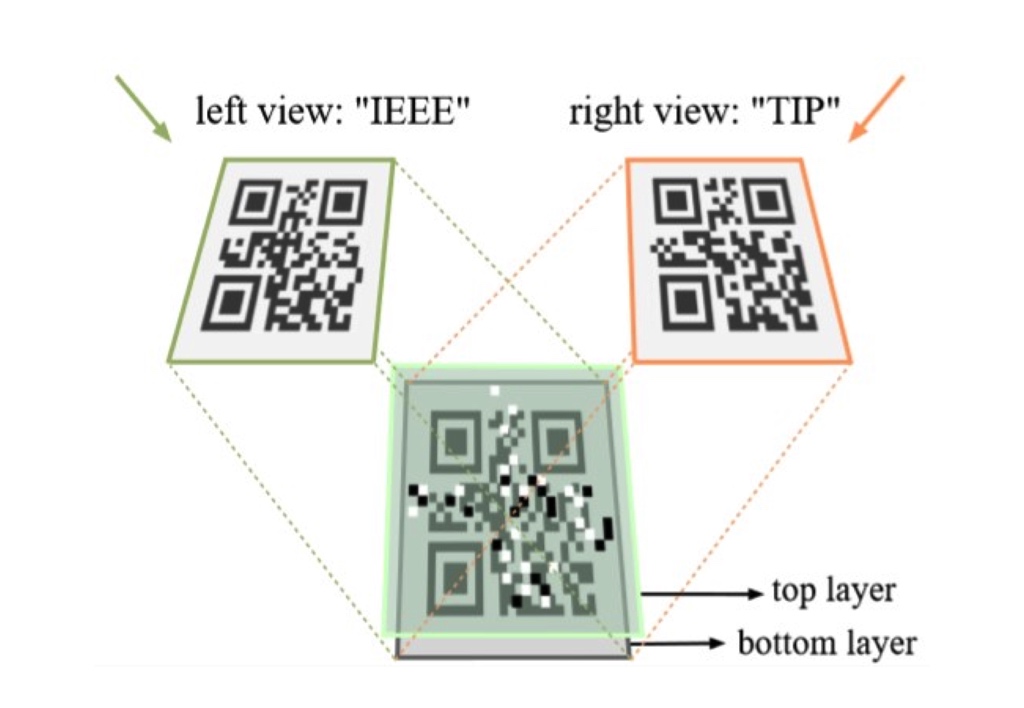

Two-Layer QR CodesIEEE Transactions on Image Processing, 28(9):4413-4428,2019. @article{Yuan19TwoLayerQRCodes,

author = {Tailing Yuan and Yili Wang and Kun Xu and Ralph R. Martin and Shi-Min Hu},

title = {Two-Layer QR Codes},

journal = {IEEE Transactions on Image Processing},

volume = {28},

number = {9},

year = {2019},

pages = {4413--4428},

}

| |

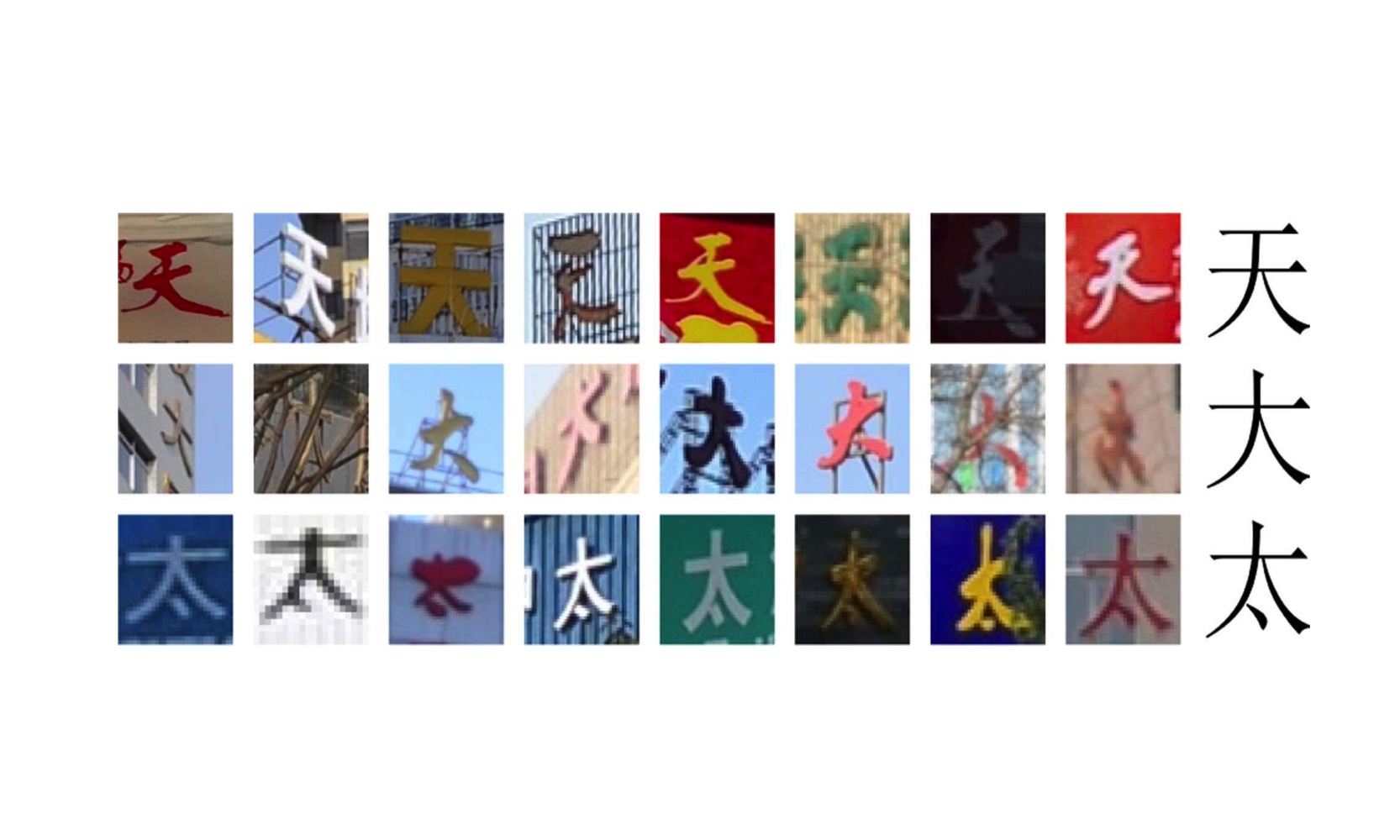

A Large Chinese Text Dataset in the WildJournal of Computer Science and Technology, 34(3), 509-521, 2019. project page paper supplemental dataset bibtex @article{Yuan19CTW,

author = {Tai-Ling Yuan and Zhe Zhu and Kun Xu and Cheng-Jun Li and Tai-Jiang Mu and Shi-Min Hu},

title = {A Large Chinese Text Dataset in the Wild},

journal = {Journal of Computer Science and Technology},

volume = {34},

number = {3},

year = {2019},

pages = {509--521},

}

| |

2018 |

|

Real-time High-fidelity Surface Flow SimulationIEEE Transactions on Visualization and Computer Graphics, 24(8), 2411-2423, 2018. @article{Ren18SurfaceFlow,

author = {Bo Ren and Tailing Yuan and Chenfeng Li and Kun Xu and Shi-Min Hu},

title = {Real-time High-fidelity Surface Flow Simulation},

journal = {IEEE Transactions on Visualization and Computer Graphics},

volume = {24},

number = {8},

year = {2018},

pages = {2411--2423},

}

| |

Computational Design of Transforming Pop-up BooksACM Transactions on Graphics, 37(1), 8:1--8:14, 2018. (presented at SIGGRAPH 2018) @article{Xiao18TransformPopup,

author = {Xiao, Nan and Zhu, Zhe and Martin, Ralph R. and Xu, Kun and Lu, Jia-Ming and Hu, Shi-Min},

title = {Computational Design of Transforming Pop-up Books},

journal = {ACM Transactions on Graphics},

volume = {37},

number = {1},

year = {2018},

pages = {8:1--8:14},

}

| |

2017 |

|

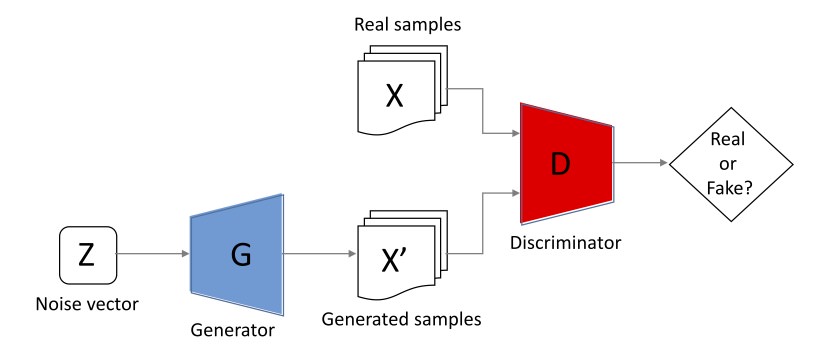

A survey of image synthesis and editing with generative adversarial networksTsinghua Science and Technology, 22(6), 660-674, 2017. @article{Wu17GANSurvey,

author = {Xian Wu and Kun Xu and Peter Hall},

title = {A survey of image synthesis and editing with generative adversarial networks},

journal = {Tsinghua Science and Technology},

volume = {22},

number = {6},

year = {2017},

pages = {660--674},

}

| |

Static Scene Illumination Estimation from Video with ApplicationsJournal of Computer Science and Technology, 32(3), 430-442, 2017. @article{Liu17VideoInsert,

author = {Bin Liu and Kun Xu and Ralph Martin},

title = {Static Scene Illumination Estimation from Video with Applications},

journal = {Journal of Computer Science and Technology},

volume = {32},

number = {3},

year = {2017},

pages = {430--442},

}

| |

2016 |

|

Efficient, Edge-Aware, Combined Color Quantization and DitheringIEEE Transactions on Image Processing, 25(3), 1152-1162, 2016. @article{Huang16ColorQuantization,

author = {Hao-Zhi Huang and Kun Xu and Ralph R. Martin and Fei-Yue Huang and Shi-Min Hu},

title = {Efficient, Edge-Aware, Combined Color Quantization and Dithering},

journal = {IEEE Transactions on Image Processing},

volume = {25},

number = {3},

year = {2016},

pages = {1152--1162},

}

| |

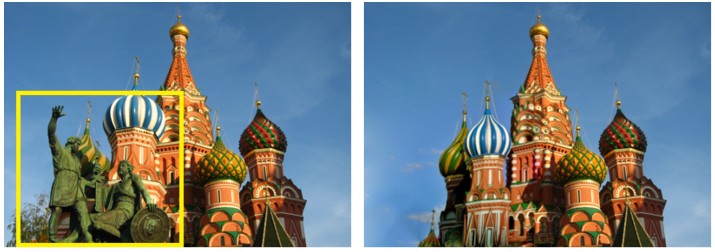

Faithful Completion of Images of Scenic Landmarks using Internet ImagesIEEE Transactions on Visualization and Computer Graphics, 22(8), 1945 - 1958, 2016. @article{Zhu16FaithfulCompletion,

author = {Zhu, Zhe and Huang, Hao-Zhi and Tan, Zhi-Peng and Xu, Kun and Hu, Shi-Min},

title = {Faithful Completion of Images of Scenic Landmarks using Internet Images},

journal = {IEEE Transactions on Visualization and Computer Graphics},

volume = {22},

number = {8},

year = {2016},

pages = {1945--1958},

}

| |

2015 |

|

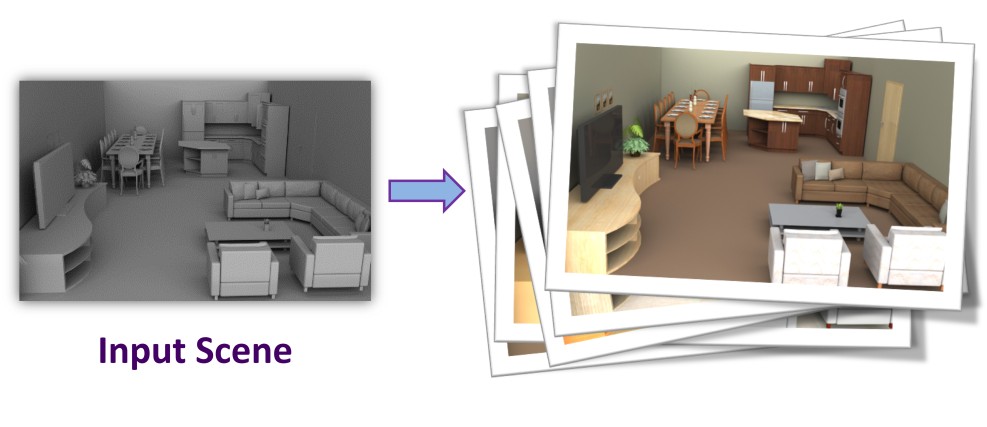

Magic Decorator: Automatic Material Suggestion for Indoor Digital ScenesACM Transactions on Graphics, 34(6), 232:1 - 232:11, 2015. (SIGGRAPH Asia 2015) project page paper 22M slides 85M supplemental 45M video 45M bibtex @article{Chen15MagicDecorator,

author = {Chen, Kang and Xu, Kun and Yu, Yizhou and Wang, Tian-Yi and Hu, Shi-Min},

title = {Magic Decorator: Automatic Material Suggestion for Indoor Digital Scenes},

journal = {ACM Transactions on Graphics},

volume = {34},

number = {6},

year = {2015},

pages = {232:1--232:11},

}

| |

2014 |

|

A Practical Algorithm for Rendering Interreflections with All-frequency BRDFsACM Transactions on Graphics, 33(1), 10:1 - 10:16, 2014. (presented at SIGGRAPH 2014) project page paper 1.2M slides 37M video 30.9M bibtex @article{Xu14Interreflection,

author = {Xu, Kun and Cao, Yan-Pei and Ma, Li-Qian and Dong, Zhao and Wang, Rui and Hu, Shi-Min},

title = {A Practical Algorithm for Rendering Interreflections with All-frequency BRDFs},

journal = {ACM Transactions on Graphics},

volume = {33},

number = {1},

year = {2014},

pages = {10:1--10:16},

}

| |

2013 |

|

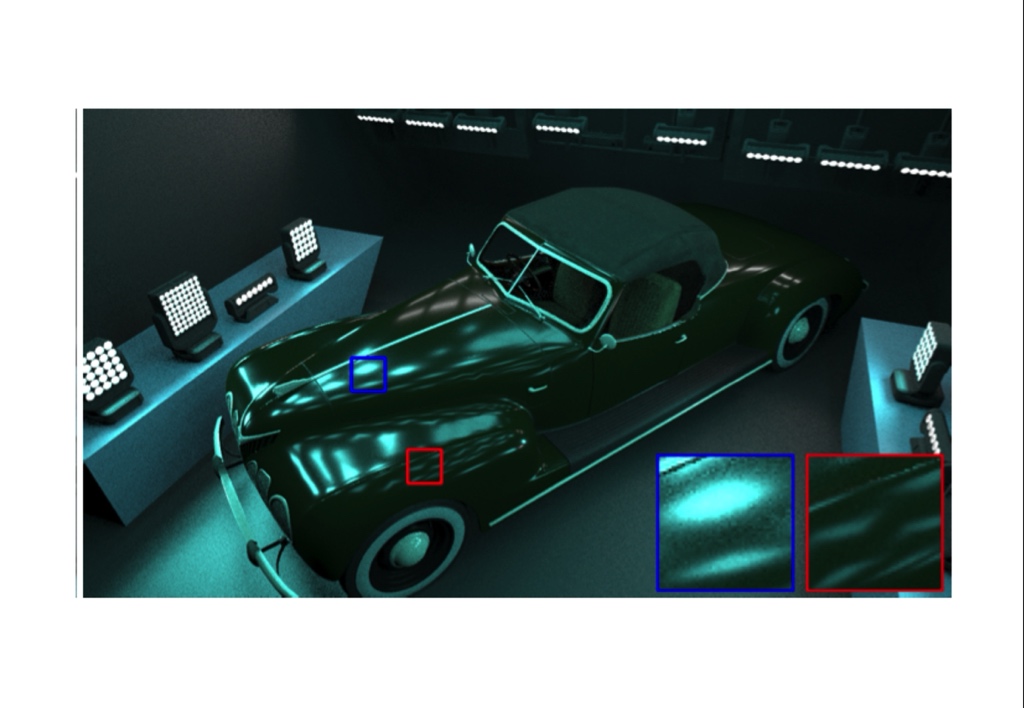

Anisotropic Spherical GaussiansACM Transactions on Graphics 32(6), 209:1 - 209:11, 2013. (SIGGRAPH Asia 2013). project page paper 2.3M slides 16.5M supplemental 1.7M video 45.8M bibtex @article{Xu13AnisotropicSG,

author = {Kun Xu and Wei-Lun Sun and Zhao Dong and Dan-Yong Zhao and Run-Dong Wu and Shi-Min Hu},

title = {Anisotropic Spherical Gaussians},

journal = {ACM Transactions on Graphics},

volume = {32},

number = {6},

year = {2013},

pages = {209:1--209:11},

}

| |

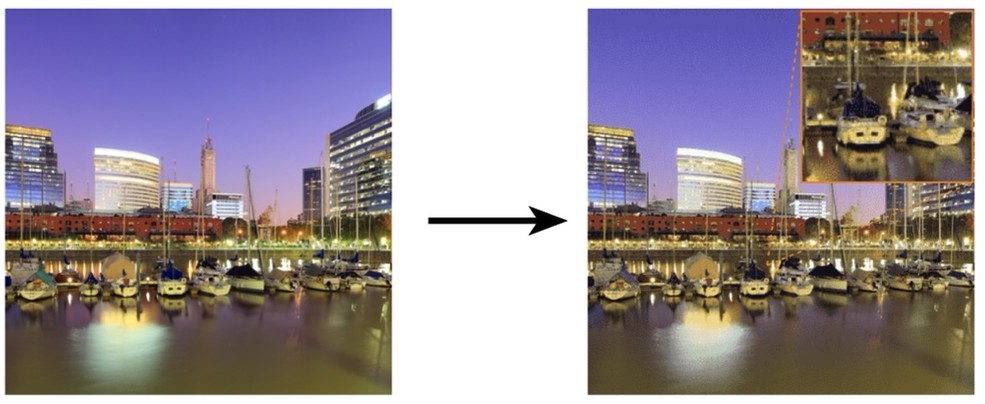

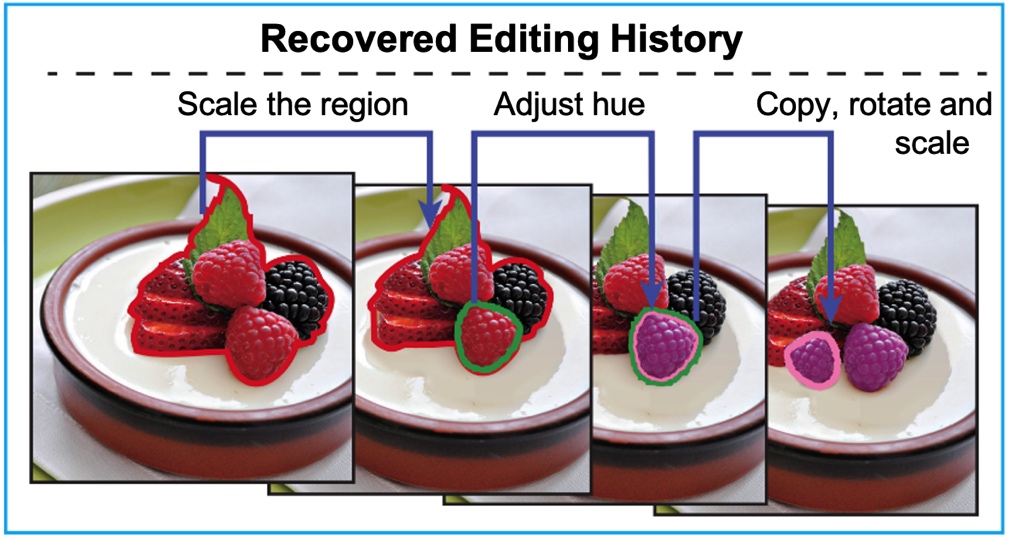

Inverse Image Editing: Recovering a Semantic Editing History from a Before-and-After Image PairACM Transactions on Graphics 32(6), 194:1 - 194:11, 2013. (SIGGRAPH Asia 2013). project page paper 8.2M slides 43.4M supplemental 13.6M bibtex @article{Hu13InverseImageEditing,

author = {Shi-Min Hu and Kun Xu and Li-Qian Ma and Bin Liu and Bi-Ye Jiang and Jue Wang},

title = {Inverse Image Editing: Recovering a Semantic Editing History from a Before-and-After Image Pair},

journal = {ACM Transactions on Graphics},

volume = {32},

number = {6},

year = {2013},

pages = {194:1--194:11},

}

| |

Change Blindness ImagesIEEE Transactions on Visualization and Computer Graphics 19(11),1808-1819, 2013. project page paper 3.9M slides 6.7M supplemental 3.5M video 13.2M bibtex @article{Ma13ChangeBlindness,

author = {Li-Qian Ma and Kun Xu and Tien-Tsin Wong and Bi-Ye Jiang and Shi-Min Hu},

title = {Change Blindness Images},

journal = {IEEE Transactions on Visualization and Computer Graphics},

volume = {19},

number = {11},

year = {2013},

pages = {1808--1819},

}

| |

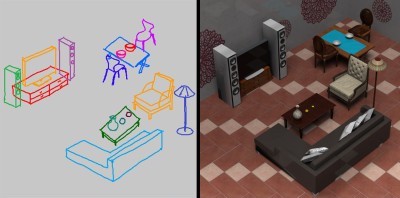

Sketch2Scene: Sketch-based Co-retrieval and Co-placement of 3D ModelsACM Transactions on Graphics 32(4), 123:1-123:12, 2013. (SIGGRAPH 2013). project page paper 6.1M pptx 33.0M supplemental 4.4M video 12.5M bibtex @article{Xu12Sketch2scene,

author = {Kun Xu and Kang Chen and Hongbo Fu and Wei-Lun Sun and Shi-Min Hu},

title = {Sketch2Scene: sketch-based co-retrieval and co-placement of 3D models},

journal = {ACM Transactions on Graphics},

volume = {32},

number = {4},

year = {2013},

pages = {123:1--123:12},

}

| |

2012 |

|

Accurate Translucent Material Rendering under Spherical Gaussian LightsComputer Graphics Forum 31(7), 2267–2276, 2012. (Pacific Graphics 2012) @article{Yan12GaussianTranslucent,

author = {Ling-Qi Yan and Yahan Zhou and Kun Xu and Rui Wang},

title = {Accurate Translucent Material Rendering under Spherical Gaussian Lights},

journal = {Computer Graphics Forum},

volume = {31},

number = {7},

year = {2012},

pages = {2267-–2276},

}

| |

Efficient Antialiased Edit Propagation for Images and VideosComputer & Graphics 36(8), 1005–1012, 2012. @article{Ma12AntialiasedEditPropagation,

author = {Li-Qian Ma and Kun Xu},

title = {Efficient Antialiased Edit Propagation for Images and Videos},

journal = {Computer and Graphics},

volume = {36},

number = {8},

year = {2012},

pages = {1005–-1012},

}

| |

2011 |

|

Interactive Hair Rendering and Appearance Editing under Environment LightingACM Transactions on Graphics 30(6), 173:1-173:10, 2011. (SIGGRAPH Asia 2011) project page paper 1.9M pptx 34.6M pptx 4.4M (no video) supplemental 1.3M video 53.2M bibtex @article{Xu11sigasia,

author = {Kun Xu and Li-Qian Ma and Bo Ren and Rui Wang and Shi-Min Hu},

title = {Interactive Hair Rendering and Appearance Editing under Environment Lighting},

journal = {ACM Transactions on Graphics},

volume = {30},

number = {6},

year = {2011},

pages = {173:1--173:10},

articleno = {173},

}

| |

2009 |

|

Efficient Affinity-based Edit Propagation using K-D TreeACM Transactions on Graphics 28(5), 118:1-118:6, 2009. (SIGGRAPH Asia 2009) @article{Xu09sigasia,

author = {Kun Xu and Yong Li and Tao Ju and Shi-Min Hu and Tian-Qiang Liu},

title = {Efficient Affinity-based Edit Propagation using K-D Tree},

journal = {ACM Transactions on Graphics},

volume = {28},

number = {5},

year = {2009},

pages = {118:1--118:6},

articleno = {118},

}

| |

Edit Propagation on Bidirectional Texture FunctionsComputer Graphics Forum 28(7), 1871-1877, 2009. (Pacific Graphics 2009) @article{Xu09pg,

author = {Kun Xu and Jiaping Wang and Xin Tong and Shi-Min Hu and Baining Guo },

title = {Edit Propagation on Bidirectional Texture Functions},

journal = {Computer Graphics Forum},

volume = {28},

number = {7},

year = {2009},

pages = {1871--1877},

}

| |

2008 |

|

Spherical Piecewise Constant Basis Functions for All-Frequency Precomputed Radiance TransferIEEE Transaction on Visualization and Computer Graphics 14(2), 454-467, 2008. @article{Xu08tvcg,

author = {Kun Xu and Yun-Tao Jia and Hongbo Fu and Shi-Min Hu and Chiew-Lan Tai},

title = {Spherical Piecewise Constant Basis Functions for All-Frequency Precomputed Radiance Transfer},

journal = {IEEE Transaction on Visualization and Computer Graphics},

volume = {14},

number = {2},

year = {2008},

pages = {454--467},

}

| |

2007 |

|

Real-time homogeneous translucent material editingComputer Graphics Forum 26(3), 545-552, 2007. (EuroGraphics 2007) @article{Xu07eg,

author = {Kun Xu and Yue Gao and Yong Li and Tao Ju and Shi-Min Hu},

title = {Real-time Homogenous Translucent Material Editing},

journal = {Computer Graphics Forum},

volume = {26},

number = {3},

year = {2007},

pages = {545--552},

}

| |

2006 |

|

Spherical Harmonics Scaling

Pacific Conference on Computer Graphics and Applications, Oct 2006. @article{Wang06pg,

author = {Jiaping Wang and Kun Xu and Kun Zhou and Stephen Lin and Shi-Min Hu and Baining Guo},

title = {Spherical Harmonics Scaling},

journal = {The Visual Computer},

volume = {22},

month = {Sept},

year = {2006},

pages = {713-720}

}

|