Traffic-Sign Detection and Classification in the Wild

Zhe Zhu, Dun Liang, Songhai Zhang, Xiaolei Huang, Baoli Li, Shimin Hu

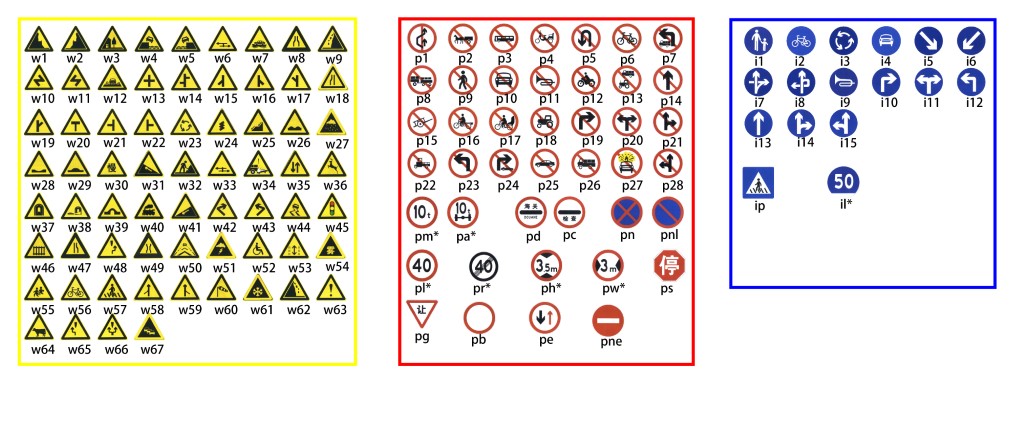

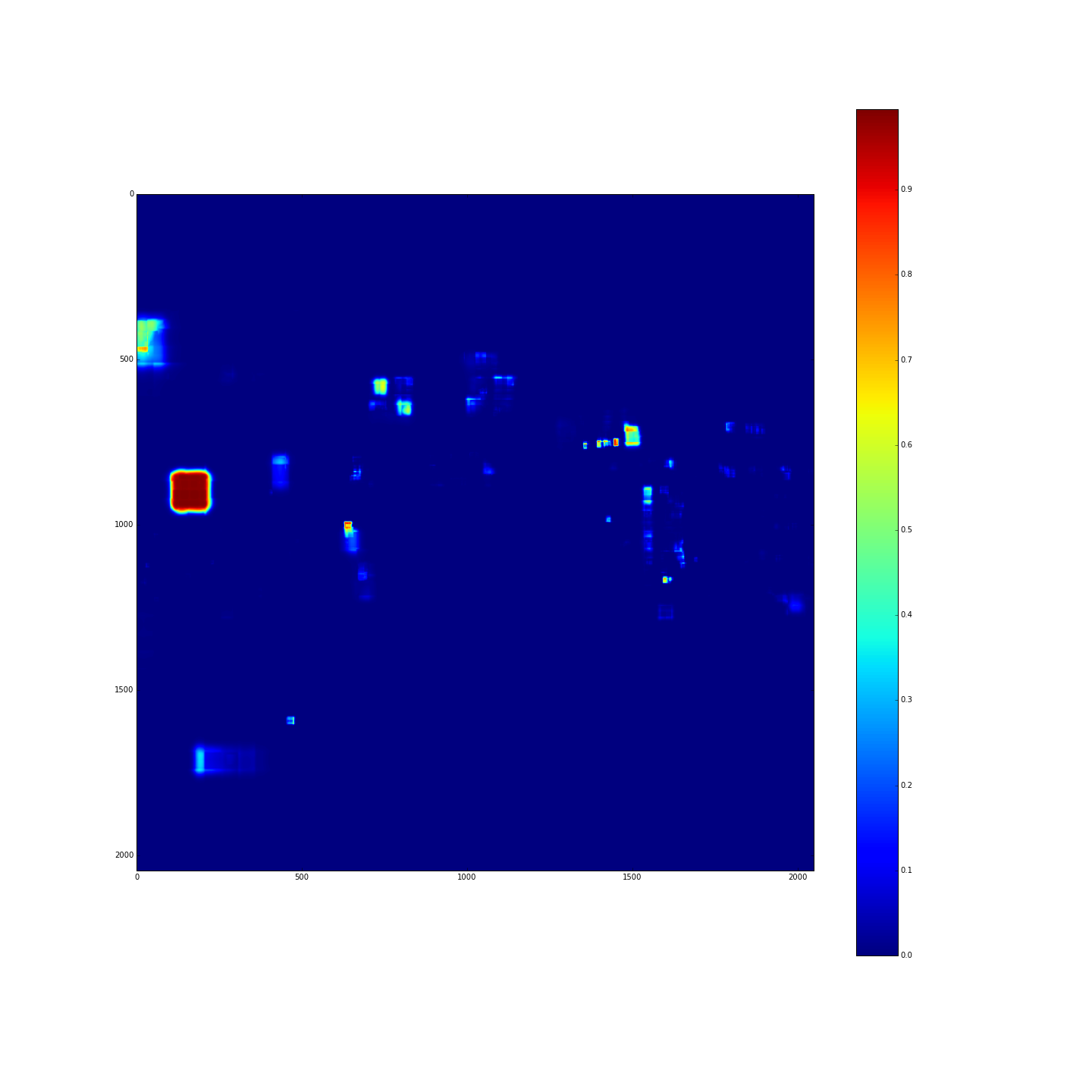

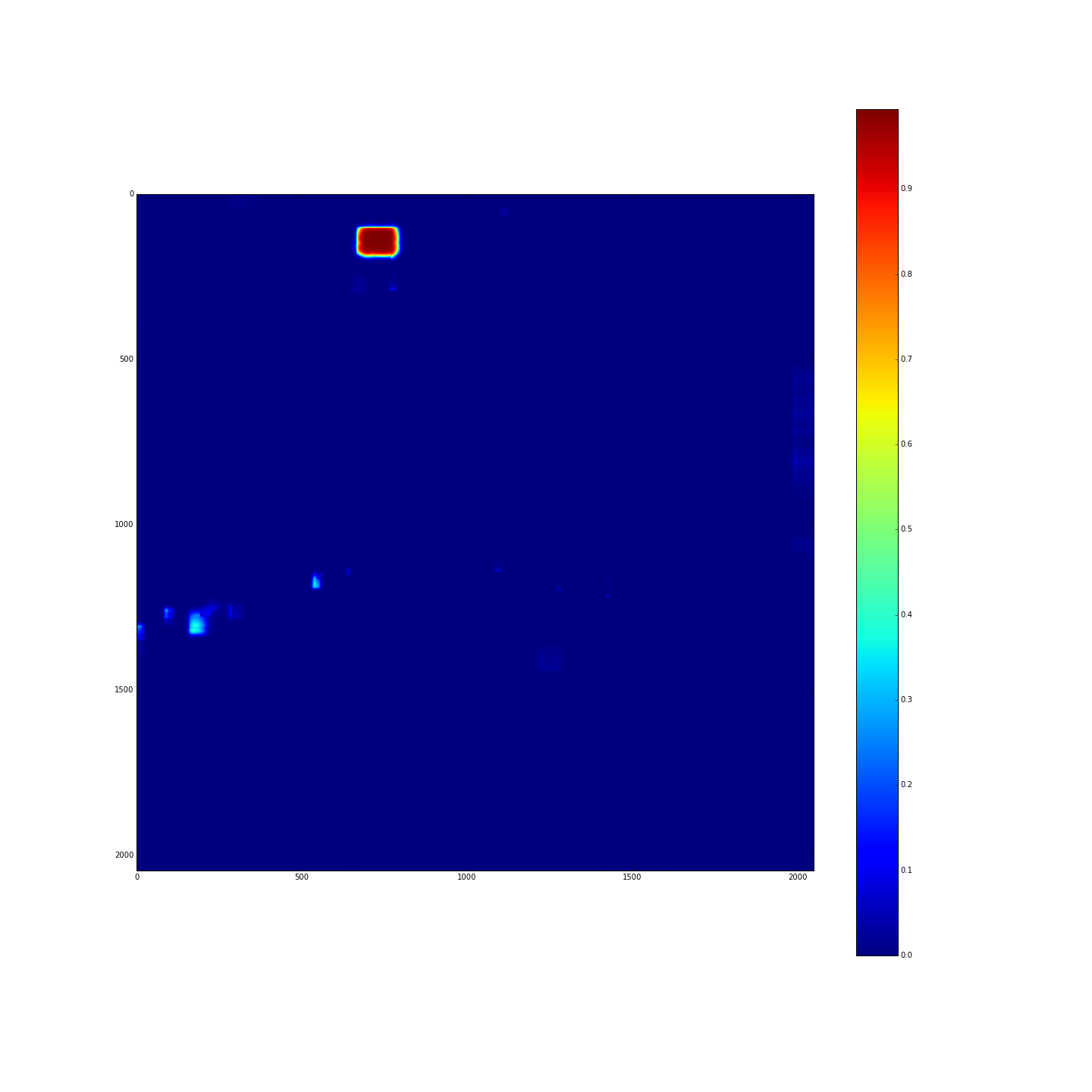

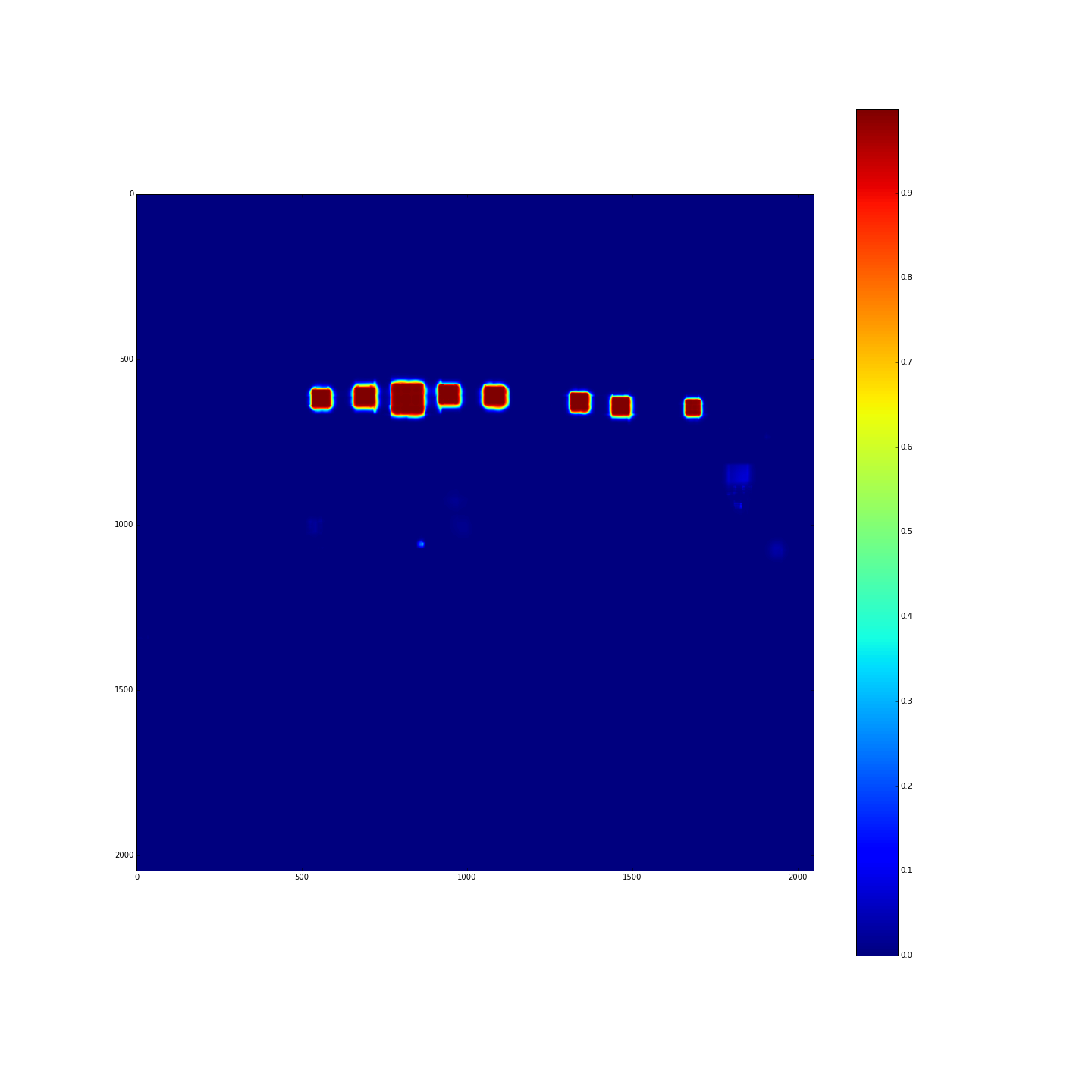

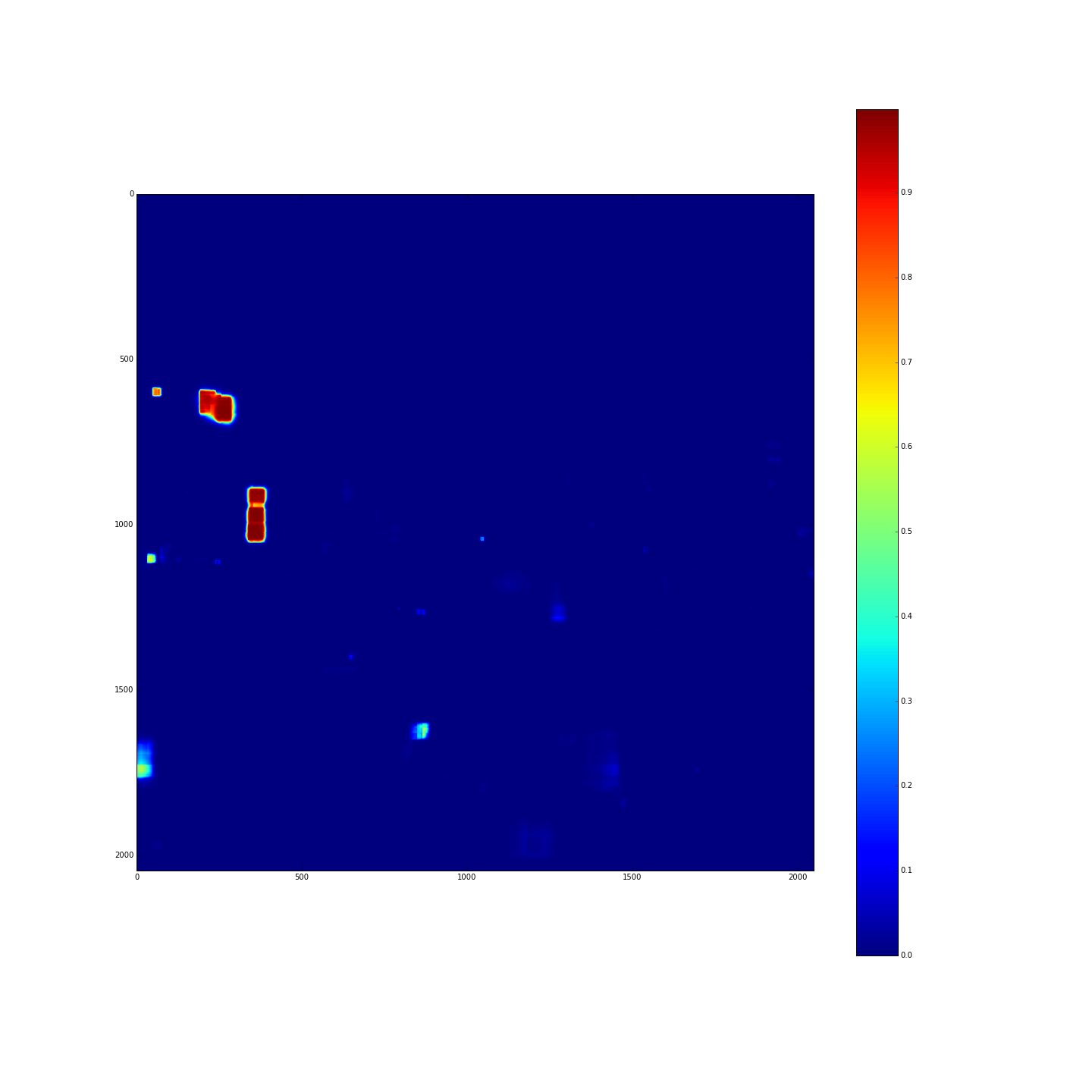

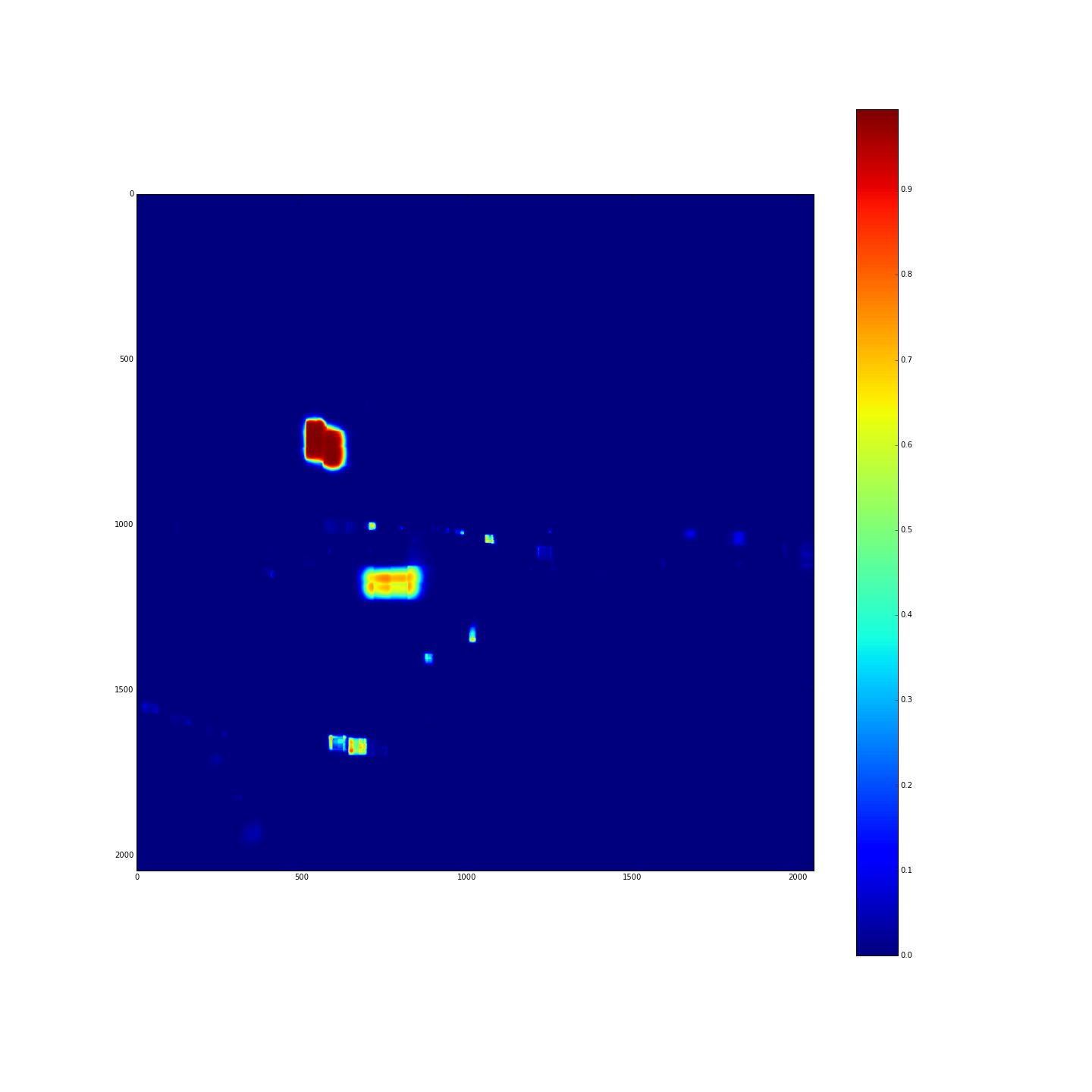

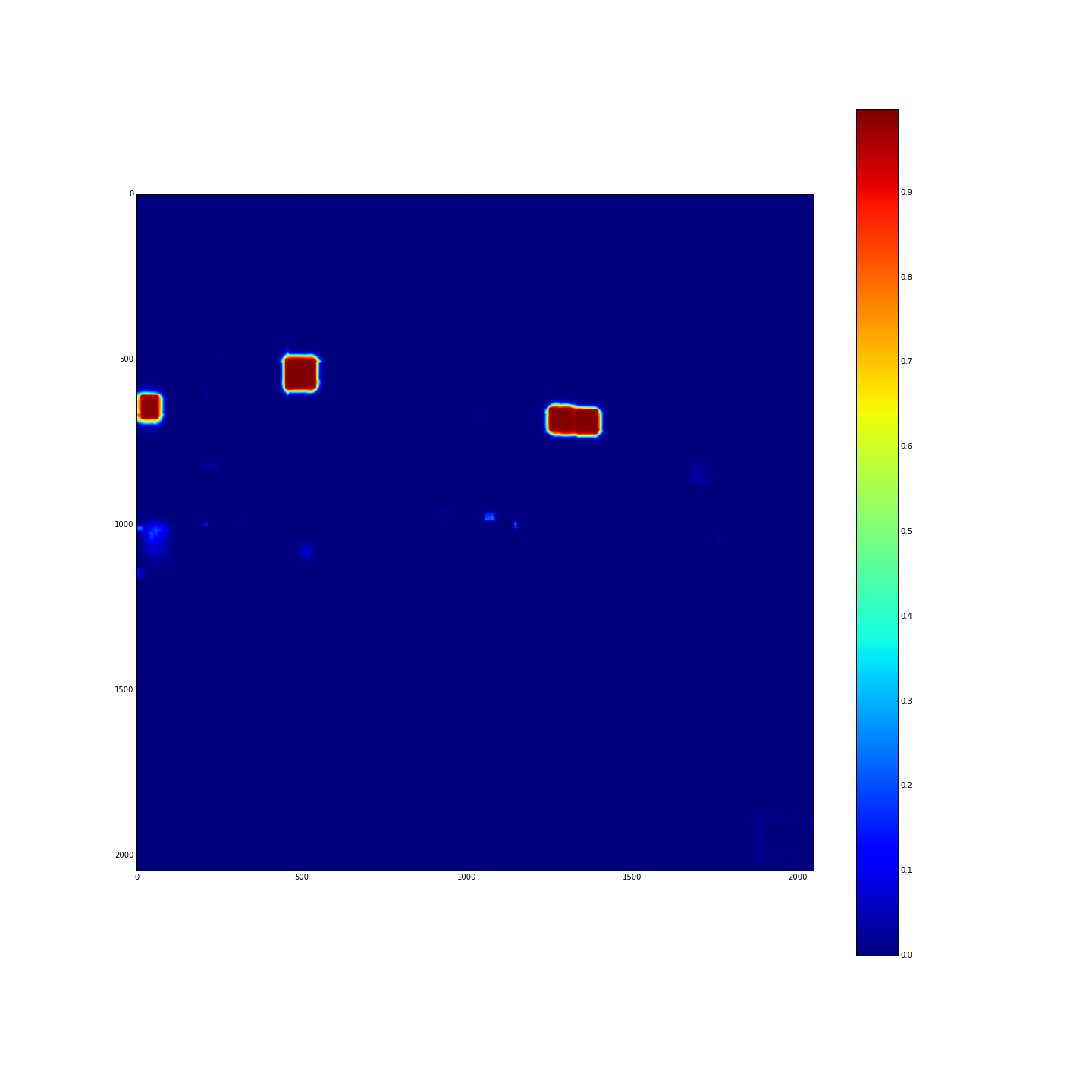

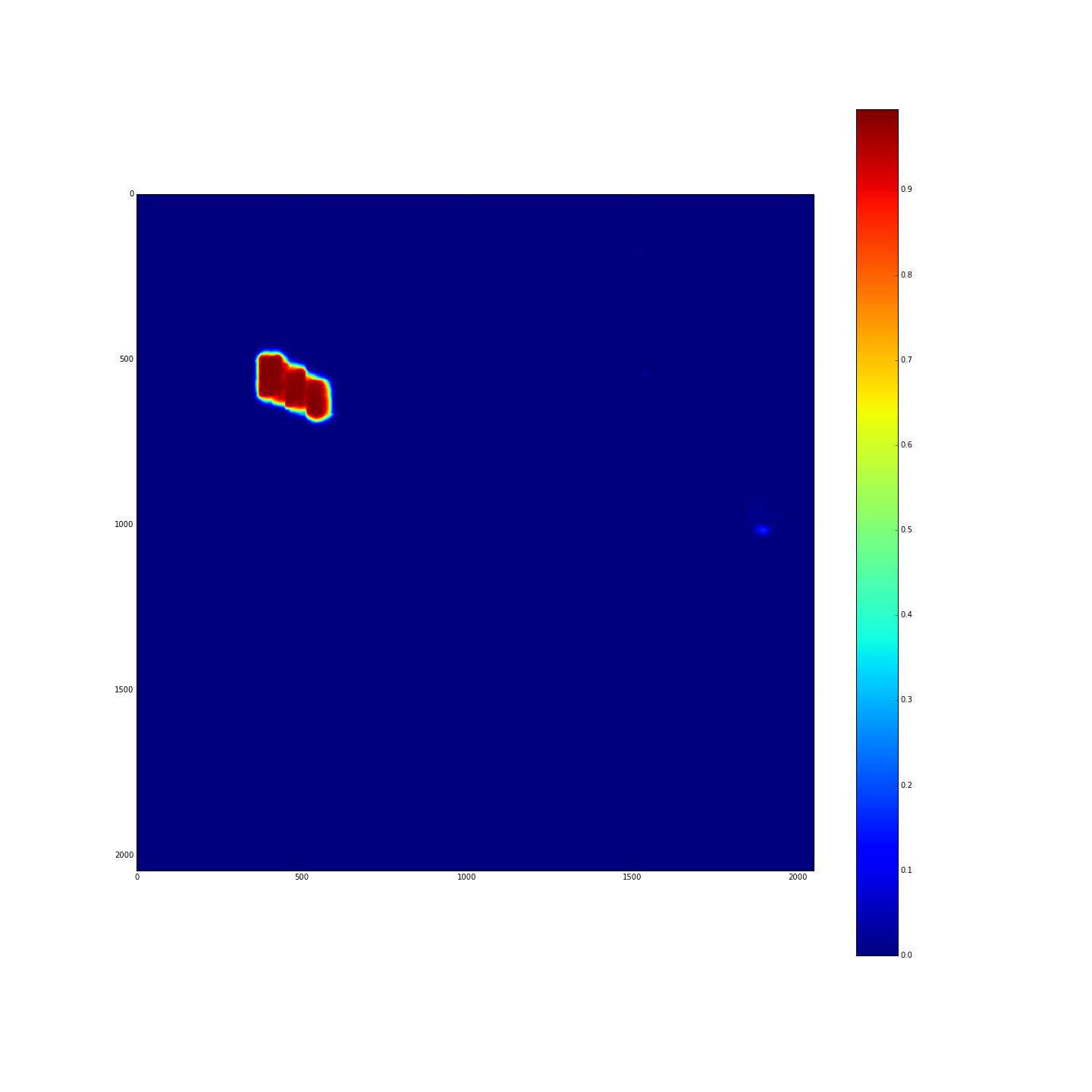

Although promising results have been achieved in the areas of traffic-sign detection and classification, few works have provided simultaneous solutions to these two tasks for realistic real world images. We make two contributions to this problem. Firstly, we have created a large traffic-sign benchmark from 100000 Tencent Street View panoramas, going beyond previous benchmarks. We call this benchmark Tsinghua-Tencent 100K. It provides 100000 images containing 30000 traffic-sign instances. These images cover large variations in illuminance and weather conditions. Each traffic-sign in the benchmark is annotated with a class label, its bounding box and pixel mask. Secondly, we demonstrate how a robust end-to-end convolutional neural network (CNN) can simultaneously detect and classify traffic-signs. Most previous CNN image processing solutions target objects that occupy a large proportion of an image, and such networks do not work well for target objects occupying only a small fraction of an image like the traffic-signs here. Experimental results show the robustness of our network and its superiority to alternatives. The benchmark, source code and the CNN model introduced in this paper is publicly available.

Paper:

Tutorial:

Tsinghua-Tencent 100K Tutorial

Dataset:

Images with annotations:Tsinghua-Tencent 100K Annotations 2016 (Code for Dataset)

Tsinghua-Tencent 100K Annotations 2021 (with more classification)

No sign Images:part1(17.7G,16555 images) part2(17.8G,16638 images) part3(17.8G,16582 images) part4(17.9G,16632 images) part5(16.8G,15690 images)

[Tsinghua-Tencent 100K dataset is under Creative Commons Attribution-NonCommercial (CC-BY-NC) license.]

If you have any questions about the code or dataset, or request for commercial use,

please contact Song-Hai Zhang(shz@tsinghua.edu.cn).

If you use, finding our code and data useful, please cite our paper:

@InProceedings{Zhe_2016_CVPR,

author = {Zhu, Zhe and Liang, Dun and Zhang, Songhai and Huang, Xiaolei and Li, Baoli and Hu, Shimin},

title = {Traffic-Sign Detection and Classification in the Wild},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2016}

}