|

|

|

|

||

|

|

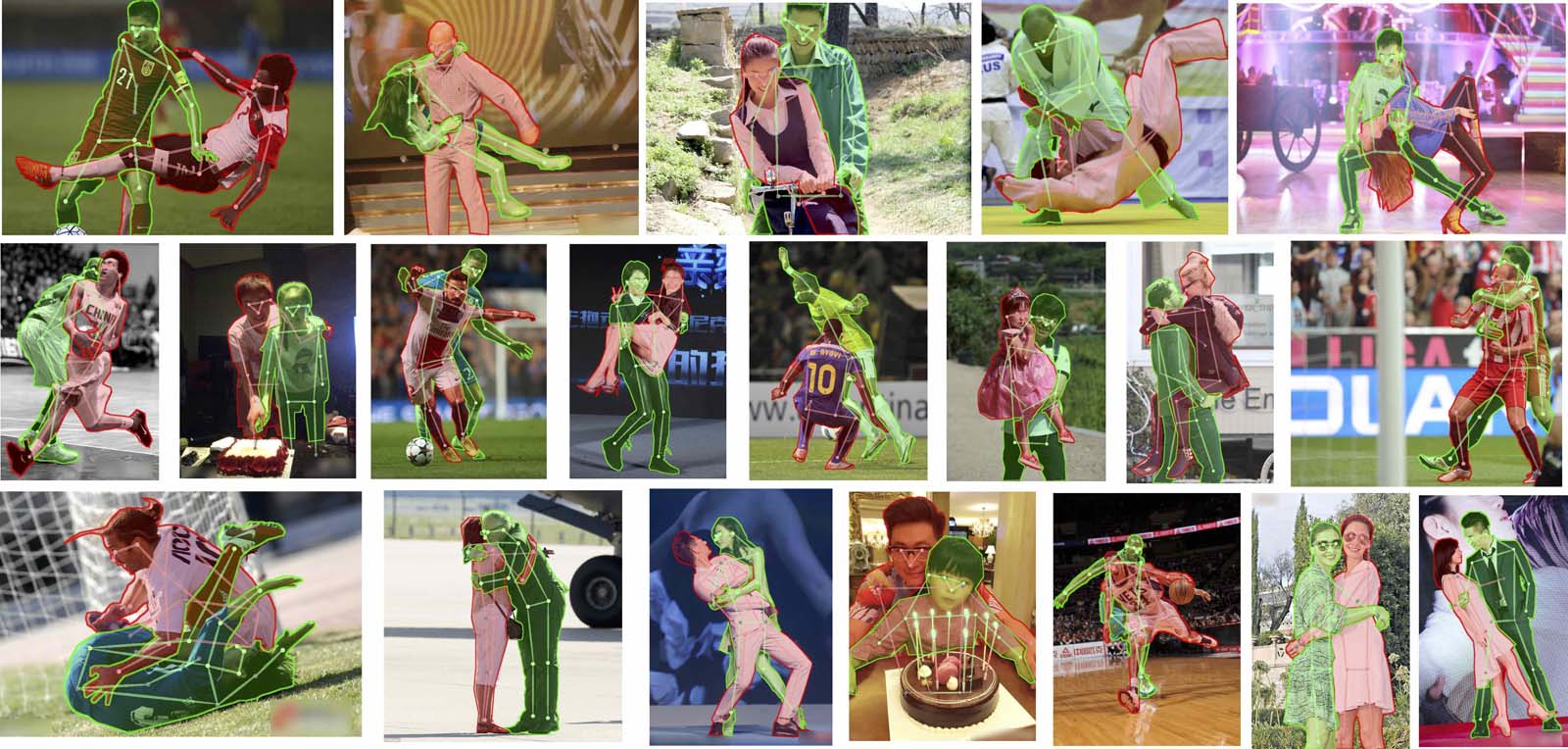

@InProceedings {zhang2018pose2seg,

title={Pose2Seg: Detection Free Human Instance Segmentation},

author={Zhang, Song-Hai and Li, Ruilong and Dong, Xin and Rosin, Paul and Cai, Zixi and Han, Xi and Yang, Dingcheng and Huang, Hao-Zhi and Hu, Shi-Min},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2019}

}

Tsinghua-Tencent Traffic Light

@Article{Lu2018,

author="Lu, Yifan and Lu, Jiaming and Zhang, Songhai and Hall, Peter",

title="Traffic signal detection and classification in street views using an attention model",

journal="Computational Visual Media",

year="2018",

month="Sep",

day="01",

volume="4",

number="3",

pages="253--266",

issn="2096-0662",

doi="10.1007/s41095-018-0116-x",

url="https://doi.org/10.1007/s41095-018-0116-x"

}

@InProceedings{Cao_2018_ECCV,

author = {Cao, Yan-Pei and Liu, Zheng-Ning and Kuang, Zheng-Fei and Kobbelt, Leif and Hu, Shi-Min},

title = {Learning to Reconstruct High-quality 3D Shapes with Cascaded Fully Convolutional Networks},

booktitle = {The European Conference on Computer Vision (ECCV)},

month = {September},

year = {2018}

}

@InProceedings{Zhe_2016_CVPR,

author = {Zhu, Zhe and Liang, Dun and Zhang, Songhai and Huang, Xiaolei and Li, Baoli and Hu, Shimin},

title = {Traffic-Sign Detection and Classification in the Wild},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2016}

}